Additive quantizers

An additive quantizer approximates a vector

where

As usual with data-adaptive quantizers, the tables

The product quantization can be considered as a special case of additive quantization where only a sub-vector of size

A good introduction to additive quantization is Additive quantization for extreme vector compression, Babenko and Lempitsky, CVPR'14.

The Faiss implementation is based on the AdditiveQuantizer object.

The Faiss implementation imposes that

The decoding of an additive quantizer is unambiguous. However there is no simple method to (1) train an additive quantizer and (2) perform the encoding. Therefore, Faiss contains two implementations of additive quantizers: the Residual Quantizer and the Local Search Quantizer.

The Faiss residual quantizer is in the ResidualQuantizer object.

In a residual quantizer, the encoding is sequential.

At stage

This is a greedy approach, which tends to get trapped in local minima.

To avoid the local minima, the encoder maintains a beam of possible codes and picks the best code at stage M.

The beam size is given by the max_beam_size field of ResidualQuantizer: the larger the more accurate, but also the slower.

At training time, the tables are trained sequentially by k-means at each step. The max_beam_size is also used for that.

The ResidualQuantizer supports a version of k-means that starts training in a lower dimension, as described in "Improved Residual Vector Quantization for High-dimensional Approximate Nearest Neighbor Search", Shicong et al, AAAI'15.

We found that this k-means implementation was more useful for residual quantizer training than for k-means used in non-recursive settings (like IVF training).

The alternative k-means is implemented in the ProgressiveDimClustering object.

The LSQ quantizer is from "LSQ++: Lower running time and higher recall in multi-codebook quantization", Julieta Martinez, et al. ECCV 2018.

It is implemented in the LocalSearchQuantizer object.

At encoding time, LSQ starts from a random encoding of the vector and proceeds to a simulated annealing optimization to optimize the codes.

The parameter that determines the speed vs. accuracy tradeoff is the number of optimization steps encode_ils_iters.

The Faiss LSQ implementation is limited to constant codebook sizes

The simplest usage of the additive quantizers is as codecs.

Like other Faiss codecs, they have a compute_codes function and a decode function.

Since encoding is approximate and iterative, the tradeoff between them is mainly on encoding time vs. accuracy.

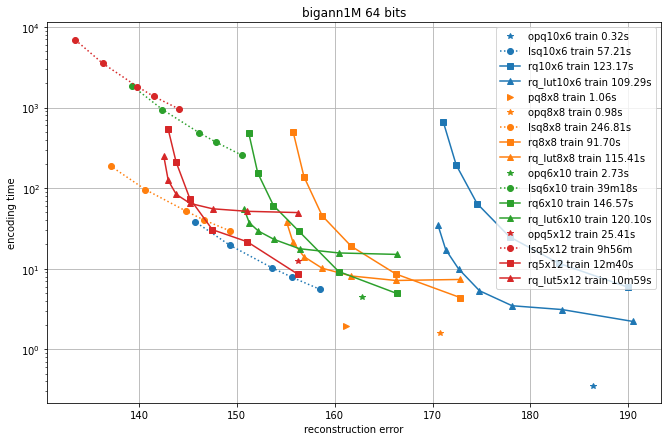

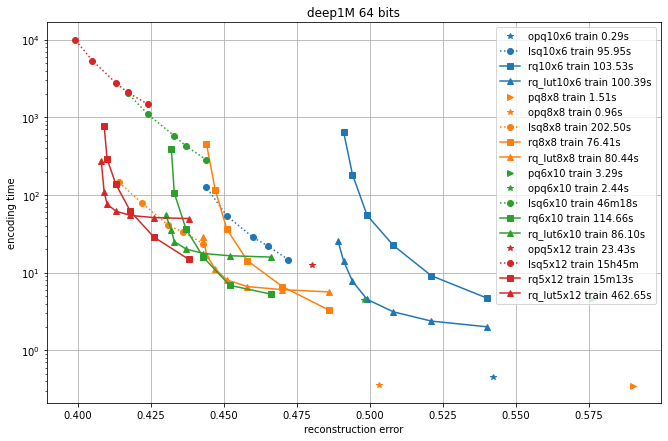

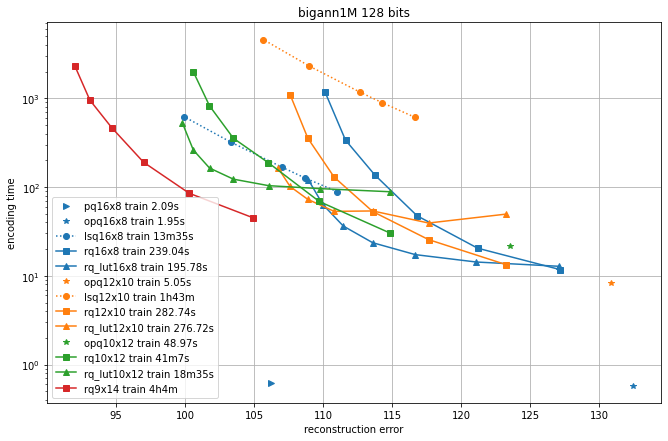

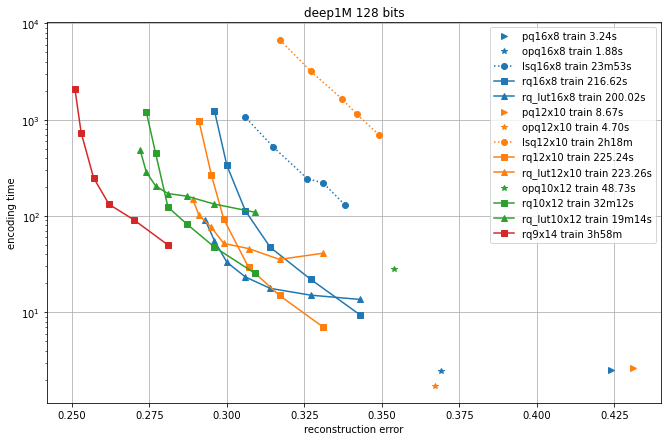

In the following, we compare different quantizers in terms of reconstruction error and recall for symmetric comparison.

We compare the additive quantizer options on the Deep1M and Bigann1M datasets (bigann rather than SIFT1M because we need more than 100k training vectors).

The results are in terms of average symmetric L2 encoding error, to be comparable with the Codec benchmarks.

The regimes are in 64 bits and 128 bits.

Since the limiting factor of additive quantizers is often the encoding time, we plot the accuracy vs. encoding time.

The different operating points for a given codec are obtained by varying the max_beam_size parameters (for the residual quantizer) or the encode_ils_iters parameter (for the LSQ).

One can observe that:

-

the PQ variants (PQ and OPQ) are the fastest options by far

-

it is worthwhile to experiment with other sizes than the traditional 8-bit elementary quantizers. Many interesting operating points are obtained with laerger vocabularies (although training time can be prohibitive)

-

for most use cases, the residual quantizer is better than LSQ

-

for the residual quantizer, the encoding with look-up tables (rq_lut) is interesting for high values of max_beam_size (and also when the data dimensionality increases, but this is not shown in the plots). This is enabled by setting

use_beam_LUTto 1 in the quantizer object.

How to generate

Code: benchs/bench_quantizer.py

Driver script:

for dsname in deep1M bigann1M ; do

for q in pq rq lsq rq_lut; do

for sz in 8x8 6x10 5x12 10x6 16x8 12x10 10x12 9x14 8x16; do

run_on_half_machine BQ.$dsname.$q.$sz.j \

/usr/bin/time -v python -X faulthandler -u $code/benchs/bench_quantizer.py $dsname $sz $q

done

done

done

Plot with: this notebook.

At each encoding step

Instead of computing the residuals explicitly, use_beam_LUT decomposes this distance into

The four terms are computed as follows:

-

$|| T_m(j) ||^2$ is stored in thecent_normstable -

$|| x - T_1[i_1] - ... - T_{m-1}[i_{m-1}] ||^2$ is the encoding distance of step$m -1$ -

$\langle T_m(j), x \rangle$ is computed on entry to the function (it is the only component whose computation complexity depends on$d$ ). -

$\Sigma_{\ell=1}^{m-1} \langle T_m(j), T_\ell(i_\ell) \rangle$ is stored in thecodebook_cross_productstable.

It appears that this approach is more interesting in high dimension (

Additive quantizers can be used to do compressed-domain distance comparisons to do efficient comparisons between one query and many encoded vectors.

The flat indexes are implemented in IndexAdditiveQuantizer, parent class of IndexResidualQuantizer and IndexLocalSearchQuantizer.

The search function depends only on the additive quantization decoder, therefore it is in common between the two child classes.

For a given query q, it is possible to precompute look-up tables

Then the dot product with x' encoded as

LUTs cannot be used directly to compute L2 distances. However,

Therefore if the norm of search_type field in the AdditiveQuantizer object.

It can take the following values:

-

ST_decompress: no norm stored, decompress database vector at runtime -

ST_LUT_nonorm: just treat ||x'|| = 0 (may be OK for normalized vectors) -

ST_norm_float,ST_norm_qint8,ST_norm_qint4: use various levels of compression

TODO

The additive quantizers can be used as a drop-in replacement for product or scalar quantization: the database vectors x are then stored in different buckets, as determined by a first-level coarse quantizer.

This is implemented in the objects IndexIVFAdditiveQuantizer, IndexIVFResidualQuantizer and IndexIVFLocalSearchQuantizer.

In addition, each bucket is associated to a centroid by_residual determines whether the vector is stored directly or by residual.

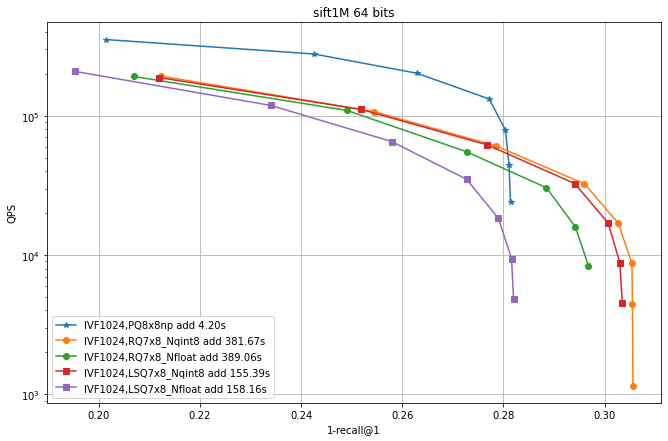

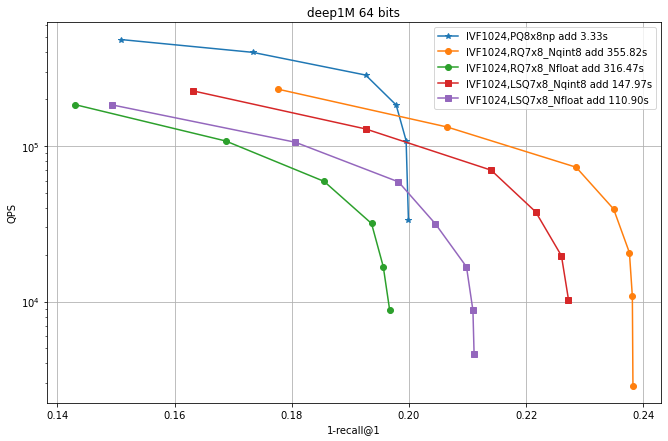

For datasets of scale 1M elements, we keep the coarse quantizer the same (Flat, 1024 centroids).

Then we compare the speed vs. accuracy tradeoff of the IVF variants, varying the nprobe parameter.

We also indicate the addition time, because as before the encoding time can be a limiting factor.

See The index factory for an explanation of the quantizer strings.

Observations:

-

IVF PQ is faster than the additive quantizer variants. This is mainly because the precomputed tables options is not implemented for the additive quantizers yet.

-

for the additive quantizers we fixed the size to 7x8 bits plus 8 bits of norm (

qint8), so that the total size is 64 bits like PQ8x8. Suprisingly, the exact encoding of the vectors (_Nfloat) is not necessarily better than quantizing it to 8 bits (_Nqint8) -

The additive quantizer options have a significant advantage in terms of accuracy.

-

LSQ addition is faster than for the residual quantizers.

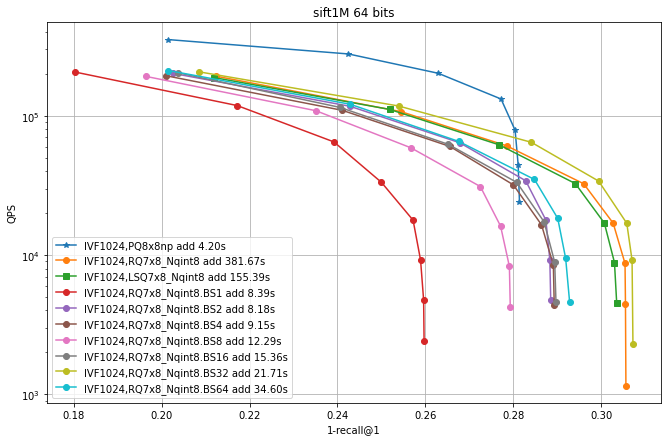

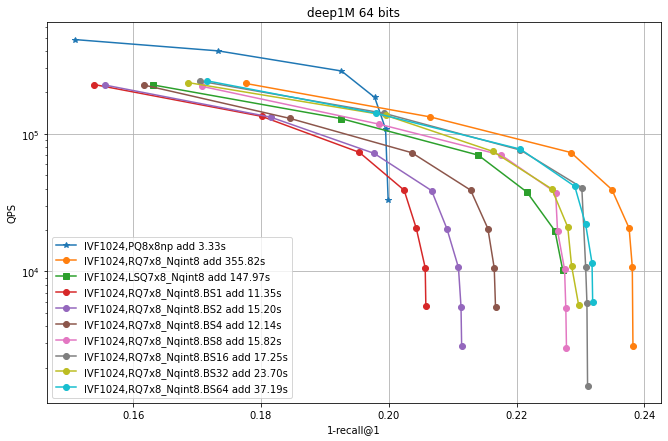

In this experiment the residual quantizer beam size was fixed at 30. In the following we vary this parameter.

Here we enable LUTs, with use_beam_LUT=1.

Then we vary the beam size (hence the BSx suffix).

Observations:

-

enabling look-up tables makes RQ encoding much faster

-

the largest beam is not necessarily the most accurate.

How to generate

Code: benchs/bench_all_ivf/bench_all_ivf.py

Driver script:

for dsname in sift1M deep1M; do

for indexkey in \

IVF1024,RQ7x8_Nqint8 IVF1024,RQ7x8_Nfloat IVF1024,PQ8x8 \

IVF1024,LSQ7x8_Nqint8 IVF1024,LSQ7x8_Nfloat

do

run_on_half_machine IVFQ.$dsname.$indexkey.f \

python -u $code/benchs/bench_all_ivf/bench_all_ivf.py --db $dsname --indexkey $indexkey

done

for indexkey in IVF1024,PQ8x8np

do

run_on_half_machine IVFQ.$dsname.$indexkey.d \

python -u $code/benchs/bench_all_ivf/bench_all_ivf.py --db $dsname --indexkey $indexkey --autotune_range ht:64

done

run_on_half_machine IVFQ.$dsname.IVF1024,RQ8x8_Nnone.c \

python -u $code/benchs/bench_all_ivf/bench_all_ivf.py --db $dsname --indexkey IVF1024,RQ8x8_Nnone

run_on_half_machine IVFQ.$dsname.IP.IVF1024,RQ8x8_Nnone.c \

python -u $code/benchs/bench_all_ivf/bench_all_ivf.py --force_IP --db $dsname --indexkey IVF1024,RQ8x8_Nnone

done

for beam_size in 1 2 4 8 16 32 64; do

for dsname in sift1M deep1M; do

for indexkey in IVF1024,RQ7x8_Nqint8 IVF1024,RQ7x8_Nfloat

do

run_on_half_machine IVFQ.$dsname.$indexkey.BS$beam_size.a \

python -u $code/benchs/bench_all_ivf/bench_all_ivf.py \

--db $dsname --indexkey $indexkey --RQ_use_beam_LUT 1 \

--RQ_beam_size $beam_size

done

done

done

Plot with: this notebook.

Every quantizer has a set of reproduction values, ie. the span of elements that it can decode. If the number of values is not too large, they can be considered as a set of centroids. By performing nearest-neighbor search in the set of centroids, we obtain a coarse quantizer for an IVF index.

Visiting nprobe > 1 buckets is supported naturally by searching for the nprobe nearest centroids.

Compared to a "flat" coarse quantizer, the accuracy is a bit lower. However, the assignment is more efficient and the coarse quantizer codebook is much more compact.

The coarse quantizer versions are AdditiveCoarseQuantizer and its children ResidualCoarseQuantizer and LocalSearchCoarseQuantizer.

For coarse quantizers with L2 distance, the norms of all centroids are stored by default for efficiency in the centroids_norms.

To disable this storage, set the ResidualQuantizer::Skip_codebook_tables bit in rcq.rq.train_type.

There are two ways of searching the nearest centroids, which are selected using the beam_factor field of ResidualCoarseQuantizer:

-

the

ResidualCoarseQuantizeruses beam search by default to find the nearest centroids. The size of the beam isbeam_factor*nprobe. -

exhaustive search (

beam_factoris set to a negative value): the distances to all centroids are computed and thenprobenearest centroids are kept. Here the norms are required soSkip_codebook_tablescannot be set.LocalSearchCoarseQuantizeralways uses exhaustive search.

The set_beam_factor method sets the beam_factor field, does some checks and computes the tables if required.

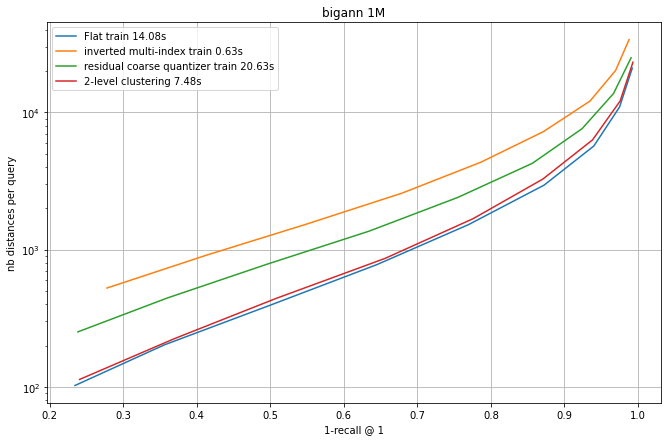

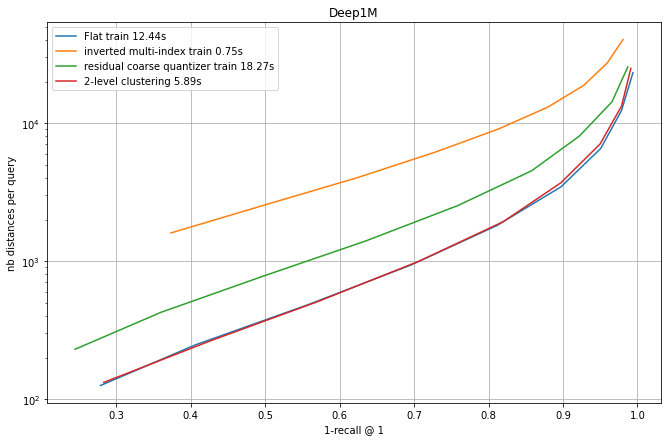

We compare four coarse quantization options: a flat quantizer, an IMI (inverted multi-index) quantizer and the residual coarse quantizer.

The fourth option is a 2-level quantization. This is one very simple approach was suggested by Harsha Simhadri, from Microsoft, who organizes the billion-scale ANN challenge. It simply consists in doing a two-level hierachical clustering to obtain the centroids. However, the centroids are then considered in a full table (ie. there is no hierarchical routing at assignment time).

Each experiment runs an IndexIVFFlat with different quantizers that all produce nlist = 128^2 = 16384 centroids. At search time, different settings of nprobe obtain a tradeoff between the 1-recall@1 and the the number of distances computed (a reproducible proxy for search time).

Observations:

-

flat quantization and 2-level clustering the best options, but they require to store all centroids. Among the "compact" options, RCQ is significantly more accurate than IMI.

-

The training times are indicated. This is a small scale experiment, the training time grows quadratically for the flat index (that is already the slowest).

-

For the 2-level clustering and the flat options the full set of centroids needs to be searched at addition time. It is usual to do this with a HNSW index on top of the centroids. However, in this small-scale case, the HNSW index is so accurate that the difference with the curves is not visible.

Evaluation code: large_coarse_quantizer.ipynb

There is a special property of residual quantizers: a flat IndexResidual can be converted without re-encoding into an IndexIVFResidual. This works as follows:

-

codes are split into a prefix and a suffix, for example

$[i_1,..,i_3]$ and$[i_4,...,i_M]$ -

the residual codec is split into a

ResidualCoarseQuantizerfor the first 3 codebooks and an IndexIVFResidual for the remaining M-3 ones. -

the codes [i_4, ... , i_M] are stored in the inverted lists

At search time, the distances computed are equivalent to the flat IndexResidual, except that the search can be performed in a non-exhaustive way.

This is tested in test_residual_quantizer.TestIndexResidualQuantizer.test_equiv_rq

Faiss building blocks: clustering, PCA, quantization

Index IO, cloning and hyper parameter tuning

Threads and asynchronous calls

Inverted list objects and scanners

Indexes that do not fit in RAM

Brute force search without an index

Fast accumulation of PQ and AQ codes (FastScan)

Setting search parameters for one query

Binary hashing index benchmark