uTensor reduces AI-inference cost significantly, bringing AI to Cortex M devices.

This tutorial discusses at a high level what TensorFlow graphs are and how to start using uTensor to build a handwriting recognition application. This involves training a fully-connected neural network on the MNIST dataset on a host machine, generating embedded code, and building an mbed application that classifies handwritten digits based on user input.

TensorFlow is an open-source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, and the graph edges represent tensors, a type of multidimensional data array.

uTensor translates these TensorFlow graphs into C++ code, which you can run on embedded devices.

Running artificial intelligence on embedded systems involves 3 main steps.

- construct a machine learning model either offline on your local machine or in the cloud.

- feed this model into code generation tools. These tools output uTensor kernels, which you can run on your devices. These kernels are functions that output predictions based on their inputs.

- build embedded applications that call these functions to make informed decisions.

- DISCO_F413ZH

- mbed-cli

- uTensor-cli

pip install utensor-cgen - Tensorflow 1.6+

- Jupyter Notebook

pip install jupyter(Optional)

NOTE: Windows is not currently supported; here are two workarounds:

-

If you are running Windows then you must have both Python2 and Python3 installed. Tensorflow 1.2+ does not support Python 2.7 on Windows, and only officially supports Python 3.5 and 3.6. Meanwhile, mbed officially supports Python2 and has limited support for Python3. You can get the demo working by running the Ipython notebook with the Python3 kernel, and the mbed project build with Python2.

-

Use the Cloud9 Environment

- Import the project:

$ mbed import https://github.com/uTensor/utensor-mnist-demo

Note

-

The repository contains reference model files. You may choose to skip to the Prepare the mbed project section.

-

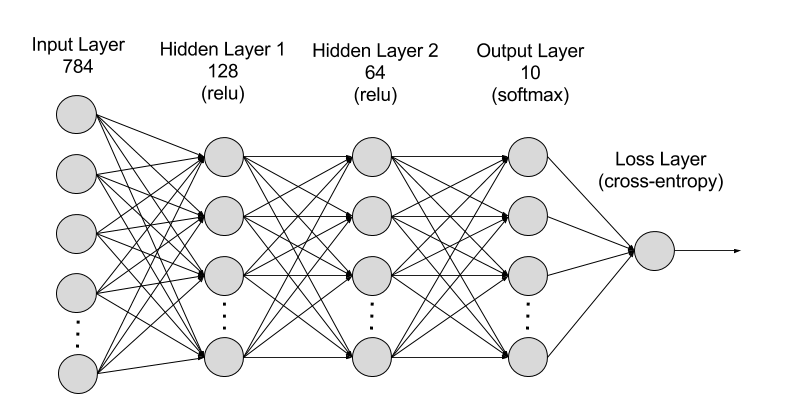

If you prefer traditional Python scripts rather than notebooks, check out

tensorflow-models/deep_mlp.py. This script contains all the same code as the notebook. The process of training and validating the model is exactly the same as in traditional machine learning workflows. This example trains a fully connected neural net with two hidden layers to recognize handwritten digits from the MNIST dataset, but you can apply the concepts to an application of your choice.

In preparation for code generation, you must freeze the TensorFlow model. Freezing a model stores learned graph parameters in a protobuf file.

- Train the Tensorflow model

- Launch IPython

jupyter notebook

- Open the

tensorflow-models/deep_mlp.ipynb - Select

Kernel/Restart & Run All. This will build a 2 layer NN then train, quantize, and save the model intensorflow-models/mnist_model/deep_mlp.pb.

- Go back to project root directory

You can get the output node names from the IPython notebook. This will create a models directory. The models contains the embedded code interface for making inferences in your applications and relevant C++ source code associated with the learned weights and architecture of the neural net. Please refer to the uTensor-cli for installation and usage instructions.

# from project root, run:

$ utensor-cli convert tensorflow-models/mnist_model/deep_mlp.pb --output-nodes=y_pred

This example builds a handwriting recognition application using Mbed and the generated model, but you can apply these concepts to your own projects and platforms. This example uses the ST-Discovery-F413H because it has a touch screen and SD card built in, but you could just as easily build the application using plug-in components.

-

Run

mbed deploy, this fetches the necessary libraries like uTensor -

Build the mbed project:

$ mbed compile -m DISCO_F413ZH -t GCC_ARM -

Finally flash your device by dragging and dropping the binary from

BUILD/DISCO_F413ZH/GCC_ARM/utensor-mnist-demo.binto your device.

After drawing a number on the screen press the blue button to run inference, uTensor should output its prediction in the middle of the screen. Then press the reset button.

Note: The model used in training is very simple and has suboptimal accuracy in practice.