SummerOfCodeIdeas

Google Summer of Code 2024 - Program Page

These are a list of ideas compiled by mlpack developers; they range from simpler code maintenance tasks to difficult machine learning algorithm implementation, which means that there are suitable ideas for a wide range of student abilities and interests. The "necessary knowledge" sections can often be replaced with "willing to learn" for the easier projects, and for some of the more difficult problems, a full understanding of the description statement and some coding knowledge is sufficient.

In addition, these are not all of the possible projects which could be undertaken; new, interesting ideas are also suitable.

A list of projects from 2013, 2014, 2015, 2016, 2017, 2018, 2019, 2020, 2021, 2022 and 2023 that aren't really applicable to this year, can be found here.

For more information on any of these projects, visit #mlpack:matrix.org on Matrix, mlpack/mlpack on Gitter, mlpack.slack.com on Slack or email mlpack@lists.mlpack.org (see also http://knife.lugatgt.org/cgi-bin/mailman/listinfo/mlpack). All channels are synchronized so that you can select the one you are most familiar with.

It is probably worth consulting the mailing list archives first; many of these projects have been discussed extensively in previous years. Also, it is probably not a great idea to contact mentors directly (this is why their emails are not given here); a public discussion on the mailing list is preferred if you need help or more information. Please also do not expect the mentors to respond to individual emails for this very reason.

If you are looking for how to get started with mlpack, see the GSoC page, the community page, and the tutorials and try to compile and run simple mlpack programs. Then, you could look at the list of issues on Github and maybe you can find an easy and interesting bug to solve.

For an application template, see the Application Guide.

- Example Zoo

- Enhance CMA-ES

- Reinforcement learning

- Algorithm optimization

- Multi-objective Optimization

- Adding MobileNet SSD in mlpack models repository

- Adding Yolo v3-Tiny in mlpack models repository

- XGBoost implementation in mlpack

- Procedural Generated Reinforcement Learning Environments

- ONNX to mlpack converter

Over the course of the last years, various machine-learning algorithms have been implemented as a part of mlpack. This project will focus on applying some of the algorithms to interesting datasets to see how they fare. This will allow us to show (off!) the potential usage of mlpack through various real-world domains. But like string theory, there are extra dimensions to this project.

- 1 - When I am new to any library, I learn a great deal about the API seeing and running example code rather than documentation.

- 2 - A project such as these would establish certain robustness. We will be able to provide sample learning curves and code snippets through blogs.

- 3 - Cool graphs will keep the summer enjoyable. Samples such as pictures, sounds, Shakespeare English, or playing games with the RL framework, everything is welcome here.

The project should directly tie in the examples infrastructure we already use, which is a mixture of Jupyter notebooks (C++, Python, Go, R, Julia) and separate executables (C++).

deliverables: new examples in the examples repository, but the details are up to the proposer

difficulty: mlpack-complete, at least as "hard" as any other GSoC project. Just kidding... The difficulty will depend on the algorithms selected for implementation.

necessary knowledge: Familiarity with the several machine-learning algorithms implemented in mlpack and analyzing results with plotting tools. Being able to hack around mlpack.

potential mentor(s): Marcus Edel

project size: medium (~175 hours) and large project (~350 hours)

The Covariance Matrix Adaptation Evolution Strategy (CMA-ES) is an evolutionary strategy that adapts the covariance matrix of a normal search distribution. Compared to many other evolutionary algorithms, an important property of the CMA-ES is its invariance against linear transformations of the search space.

CMA-ES is one of the most robust algorithms for real-world problems. In recent years, several methods have been developed to increase the performance of the CMA-ES e.g:

- saACM-ES: Self-adaptive surrogate-assisted covariance matrix adaptation evolution strategy

- IPOP-CMA-ES: A Restart CMA Evolution Strategy with increasing population size

- Active-CMA-ES: Improving Evolution Strategies throughActive Covariance Matrix Adaptation

This project entails the investigation of methods to improve the original CMA-ES method and the implementation of these methods in a flexible manner.

difficulty: 8/10

deliverable: working CMA-ES based optimizer

necessary knowledge: basic data science concepts, good familiarity with C++ and template metaprogramming, familiarity with mlpack/ensmallen API

relevant ticket(s): #70 recommendations for preparing an application:: This project can't really be designed on-the-fly, so a good proposal will have already gone through the existing optimization codebase and identified what parts of the API will need to change (if any), what the API for the optimization methods should look like

potential mentor(s): Marcus Edel

project size: medium (~175 hours) and large project (~350 hours)

Back in 2015 DeepMind published their landmark Nature article on training deep neural networks to learn to play Atari games using raw pixels and a score function as inputs. Since then there has been a surge of interest in the capabilities of reinforcement learning using deep neural network policy networks, and honestly who didn't always want to play around with RL on their own domain-specific automation tasks. This project revitalizes this desire and combines it with recent developments in reinforcement learning to train neural networks to play some of the unforgettable games. In more detail, this project involves implementing different reinforcement methods over the course of the summer. A good project will select one or two algorithms and implement them (with tests and documentation. Note while in principle, the implementation of an agent that can play one of the Atari games is quite simple, this project concentrates more on the recent ideas, e.g:

-

Accelerated Methods for Deep Reinforcement Learning: Accelerated Methods for Deep Reinforcement Learning Investigate how to optimize existing deep RL algorithms for modern computers, specifically for a combination of CPUs and GPUs.

-

Actor Critic using Kronecker-Factored Trust Region: ACKTR uses an approximation to compute the natural gradient update, and apply the natural gradient update to both the actor and the critic.

-

Rainbow: Combining Improvements in Deep Reinforcement Learning: Rainbow: Combining Improvements in Deep Reinforcement Learning examines six extensions to the standard DQN algorithm (Double Q-learning, Prioritized replay, Dueling networks, Multi-step learning, Distributional RL, Noisy).

-

Proximal Policy Optimization Algorithms: The PPO method was released by OpenAI in 2017, and it made a splash by it's state of the art performance while being much easier to implement.

-

Persistent Advantage Learning DQN: In Google’s DeepMind group presented a novel RL exploration bonus based on an adaptation of count-based exploration for high-dimensional spaces. One of the main benefits is that the agent is able to recognize and adjust its behaviors efficiently to salient events.

The algorithms must be implemented according to mlpack's neural network interface and the existing reinforcement learning structure so that they work interchangeably. In addition, this project could possibly contain a research component -- benchmarking runtimes of different algorithms with other existing implementations.

A Beginner's Guide to Deep Reinforcement Learning

Note: We already have written code to communicate with the OpenAI Gym toolkit. You can find the code here: https://github.com/zoq/gym_tcp_api

difficulty: 7/10

deliverable: Implemented algorithms and proof (via tests) that the algorithms work with the mlpack's codebase.

necessary knowledge: a working knowledge of neural networks and reinforcement learning, willingness to dive into some literature on the topic, basic C++

recommendations for preparing an application: To be able to work on this you should be familiar with the source code of mlpack, especially: src/mlpack/methods/reinforcement_learning/. We suggest that everyone who likes to apply for this idea, compile and explore the source code including the tests: src/mlpack/tests/. If you have more time, try to review the documents linked below, and in your application provide comments/questions/ideas/tradeoffs/considerations based on your brainstorming.

relevant tickets: none are open at this time

references: Reinforcement learning reading list, Deep learning bibliography, Playing Atari with deep reinforcement learning

potential mentor(s): Manish Kumar, Marcus Edel

project size: medium (~175 hours) and large project (~350 hours)

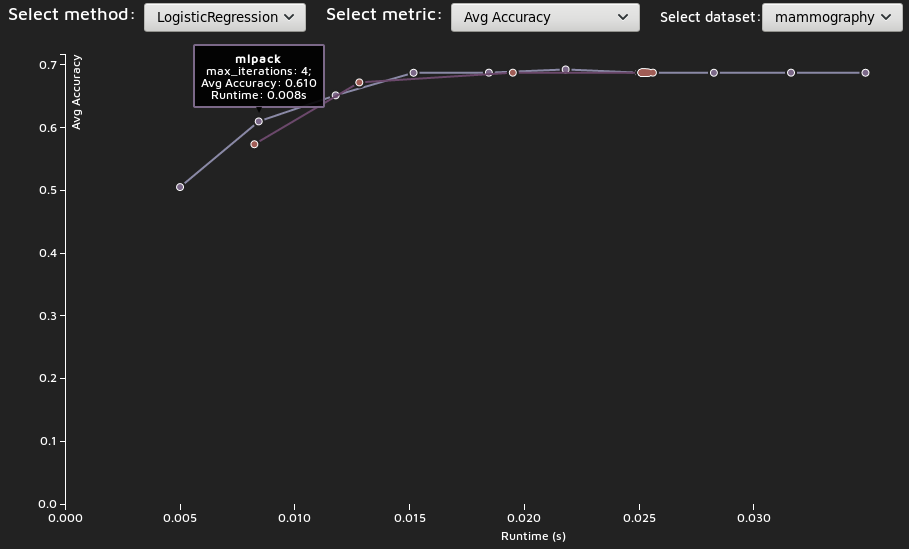

mlpack is renowned for having high-quality, fast implementations of machine learning algorithms. To this end, since 2013, we have maintained a benchmarking system that is able to help us show mlpack's efficient implementations. However, it is always possible to make these implementations a bit faster. Below is some example output from a recent run of the benchmarking system on some logistic regression problems.

Your goal in this project would be to choose a machine learning algorithm that you are interested in and knowledgeable about, then set up a series of datasets and configurations that allows you to provide a nice benchmarking view like the one above, and iteratively improve the mlpack implementation, hopefully until it is the fastest. This could involve high-level optimizations like simply avoiding unnecessary calculations or changing default parameters when needed, or lower-level optimizations like checking that SIMD autovectorization is happening and avoiding small memory allocations and copies, and even algorithm-level optimizations like clever approximations. Almost certainly any successful project here will involve a lot of time with a profiler.

The finished product should be mergable code, but more importantly, the benchmarking output, which can allow users to quickly see and understand that mlpack's implementation is good.

difficulty: 3/10-10/10, depending on the algorithm chosen and the level of interest

deliverable: improved implementation and benchmarking configuration providing a relevant and clear comparison of mlpack to other implementations

necessary knowledge: good knowledge of C++ and Armadillo, knowledge of how to use a profiler, in-depth knowledge of the algorithm to be optimized, strong familiarity with the existing implementation of the algorithm to be optimized

recommendations for preparing an application: You should definitely be familiar with the source code of the algorithm you are interested in working with, and it would not be a bad idea to do some preliminary benchmarking and possibly submit a simple pull request with an improvement (if there is low-hanging fruit that could be easily optimized). When we evaluate these applications it will be important to see a clear plan for progress, so that we can avoid the project "getting stuck".

potential mentor(s): Ryan Curtin, Shikhar Jaiswal, Sangyeon Kim, Saksham Bansal

project size: medium (~175 hours) and large project (~350 hours)

ensmallen, a header-only library for numerical optimization, recently added support for multi-objective optimization with the addition of the NSGA2 optimizer. This project would involve adding more multi-objective optimization algorithms to ensmallen. Optimizers to be implemented can include Directed Search Domain, Successive Pareto Optimization, PGEN etc.

deliverable: Working multi-objective optimizer(s).

difficulty: 2/10-6/10, depending on the algorithm chosen and the level of interest.

necessary knowledge: Familiarity with C++ and template metaprogramming, machine learning methods, multi-objective optimization and ensmallen APIs is helpful.

relevant tickets: mlpack/ensmallen#149, mlpack/ensmallen#176

potential mentor(s): Sayan Goswami

project size: medium (~175 hours) and large project (~350 hours)

mlpack has a unique model format that is based on the cereal library, mlpack model format can be serialized to XML, JSON, or a simple binary. However, this format is completely different from other formats from well-known libraries such as TensorFlow or PyTorch, since each library has its own.

Object detection models and LLM models can be trained locally, they are often downloaded as pre-trained and then fine-tuned to a specific dataset. These common models do exist in TensorFlow or Pytorch and they can be easily transformed to a common model format which is ONNX.

The idea of this project is to build a generic ONNX converter that takes ONNX models and transforms them to mlpack format, in the past we had several trials to build such a translator. However since we had a major refactoring at mlpack a couple of years ago, these can no longer be used. Also, they are not complete, they do not support all mlpack layers, and they are mostly model-oriented.

Therefore, this summer we are taking a different approach, the idea here is to build the converter as follows:

- Make the converter as generic as possible.

- Make the converter support all existing mlpack layers and kernels

- Adapt some existing mlpack layers if necessary

Here are some ideas that you can start with for your proposal:

-

Be able to load several object detection models, and output verbosely the layers, kernels, and weights.

-

Analyse all the internal layers and their order, and output an error if there is a layer that does not exist in mlpack.

-

Creating a MappingContainer that maps ONNX layers to mlpack layers.

-

Create automatically an mlpack equivalent model using the MappingContainer, set the size of the layers and the Weight for each layer,

-

Produce an mlpack model, and save it as a binary.

Note that, to judge the project as successful, the converter should be able to translate MobileNet SSD and Tiny Yolo v3 from ONNX format to mlpack binary, in addition, a set of integration tests would be nice.

deliverable: ONNX converter and two converted models.

difficulty: Medium to Hard, the difficulty depends largely on your programming skills and ANN knowledge.

necessary knowledge: Familiarity with general NN algorithms Being able to hack around mlpack ANN codebase.

relevant tickets: There is not one. However, we had a basic converter that can be found here

potential mentor(s): Omar Shrit, Kartik Dutt, Marcus Edel, TBD.

Recommendations for preparing an application: The best way to have a successful application is to start contributing as soon as possible, be able to identify the missing layers,

project size: large project (~350 hours)

Models repository aims to showcase the power of mlpack, with pre-trained, ready-to-use models written in mlpack. To the same effect, the goals of the project are listed below:

Currently, mlpack has a MobileNet class that could be used for image classification. However, currently, the mlpack model repository does not contain any object detection model that can be used to detect objects in the images instead of classifying each image. SSD, the Single Shot Detector is a very nice method that can be combined with MobileNet to create MobieNet SSD, extending the capabilities from image classification to Object Detection.

One of the interesting things about MobileNet is the capacity to run on low-resource devices, and mobile phones, in addition to leveraging the hardware acceleration. Currently, we are working on integrating mlpack with the bandicoot library, which will allow us to run mlpack models on CUDA or OpenCL-capable devices. It would be nice by the end of the summer to run MobileNet on Nvidia Jetson NANO or something similar.

In this project, you will add MobileNet SSD to the model repository and make it usable on the coco dataset. During this project, you might need to do the following:

- Read the SSD and MobileNet research article to identify any missing kernels or layers that should be added to the mlpack library

- Look at the existing implementations for Object detection inference and training in Tensorflow and PyTorch.

- Explore with your mentors the best approach to implement the model.

- Adding integration tests.

- Writing the documentation about how to use the model and integrate it our new mlpack docs

deliverable: MobileNetSSD model, does not include labels loading from JSON or COCO format.

difficulty: Easy to Medium, as MobileNet is mostly implemented, but requires some refreshing as some layers need to be merged.

necessary knowledge: Familiarity with general NN algorithms and analyzing results with plotting tools. Being able to hack around mlpack ANN codebase.

relevant tickets: 3576

potential mentor(s): Omar Shrit, Kartik Dutt

Recommendations for preparing an application: The best way to have a successful application is to start contributing as soon as possible, be able to identify the missing layers, and propose a strategy for adding SSD, feel free to open relevant issues in the model repository.

project size: large project (~350 hours)

Models repository aims to showcase the power of mlpack, with pre-trained, ready-to-use models written in mlpack. To the same effect, the goals of the project are listed below :

Currently, mlpack has a MobileNet class that could be used for image classification. However, currently, the mlpack model repository does not contain any object detection model that can be used to detect objects in the images instead of classifying each image. Yolo v3-Tiny is an object detection method that is designed for embedded systems and low-resource hardware, in addition to leveraging hardware acceleration. Currently, we are working on integrating mlpack with the bandicoot library, which will allow us to run mlpack models on CUDA or OpenCL-capable devices. It would be nice by the end of the summer to run MobileNet on Nvidia Jetson NANO or something similar.

In this project, you will add TinyYolo v3 to the model repository and make it usable on the coco dataset. During this project, you might need to do the following:

- Read the scientific article to identify any missing kernels or layers that should be added to the mlpack library

- Look at the existing implementations for Object detection inference and training in Tensorflow and PyTorch.

- Explore with your mentors the best approach to implement the model.

- Adding integration tests.

- Writing the documentation about how to use the model and integrate it into our new mlpack docs

deliverable: Tiny Yolo v3 model, does not include labels loading from JSON or COCO format.

difficulty: Easy to Medium, as Yolo is mostly implemented, but requires some refreshing as some layers need to be merged.

necessary knowledge: Familiarity with general NN algorithms and analyzing results with plotting tools. Being able to hack around mlpack ANN codebase.

relevant tickets: none

potential mentor(s): Omar Shrit, Kartik Dutt

Recommendations for preparing an application: The best way to have a successful application is to start contributing as soon as possible, be able to identify the missing layers, and propose a strategy for adding SSD, feel free to open relevant issues in the model repository.

project size: large project (~350 hours)

Tree ensembles have regularly been shown to be among the best-performing machine learning models out there; they regularly win Kaggle competitions. Last year, the support was laid to implement the XGBoost algorithm inside of mlpack (#3011, #3014, #3025, #3022 and others), but a ready-to-use implementation is not yet available.

The goal of this project is to pick up where we left off last year, and finish the XGBoost implementation inside of mlpack. Given that the basic algorithm is mostly ready, there will be a lot of time during the summer to tune, profile, and improve the implementation such that it is competitive with---or better than!---the reference implementation.

The specific deliverables and goals of the project are up to the student, but here are a few ideas of things that could be included:

- A user-facing binding for XGBoost, like the

random_forestanddecision_treebindings insrc/mlpack/methods/random_forest_main.cppandsrc/mlpack/methods/decision_tree_main.cpp, respectively. - Comparisons with the reference implementation of XGBoost to ensure correctness of our implementation.

- Benchmarks against the reference implementation of XGBoost to identify places where our implementation is competitive with or better than the reference implementation.

- Deep investigation and profiling of core inner loops in decision tree training, along with associated optimizations and improvements to the code. (That may apply not just to the XGBoost implementation but also to our decision tree and random forest implementation.)

- Parallelization of the XGBoost, decision tree, and random forest implementations with OpenMP.

deliverable: completed XGBoost algorithm implementation, or other improvements to mlpack's tree ensemble implementations

difficulty: between 4/10 to 6/10

necessary knowledge: a deep understanding of decision trees, random forests, or gradient boosting algorithms. Definitely, familiarity with algorithms like XGBoost will be needed.

potential mentor(s): Germán Lancioni, Ryan Curtin, TBD

project size: medium (~175 hours) and large project (~350 hours)

This project aims to demonstrate the real-life application of our current reinforcement learning algorithms. Often an agent focuses solely on memorizing the terrains of the environment rather than learning how to play against it, i.e. it over-fits. This can be a common occurrence if the environment is limited and static. It would be nice to test our agents against an environment which generates the environment on the fly, this closely mimics the real world since the real world tends to be non-static.

An ideal candidate would have a basic-intermediate knowledge of any of the popular game engines, be willing to learn about procedurally generated environments and of course, be interested in mlpack!

deliverable:

- At least one xeus-cling notebook demonstrating current algorithm usage on the created environment

- C++ API to communicate to the game similar to Open AI gym.

- Hosted game on lab.mlpack servers.

difficulty: 6.5/10 (Only high-level functioning of RL algorithms is required. Usage of simple functionalities provided by the game engine)

necessary knowledge:

- C++ (Intermediate level)

- Familiarity with any Game Engine (Basic to intermediate level of knowledge).

- Socket Programming

- Familiarity with Reinforcement Learning codebase.

- Familiarity with the internal working of pseudo-random generation

relevant tickets: None so far

potential mentor(s): Nanubala Gnana Sai, Shaikh Mohd. Fauz, Marcus Edel

project size: medium (~175 hours) and large project (~350 hours)