Engine Design

This page describes the larger layers, components and features of the project as a whole. It only covers the main project, and skips over 'related but different' side projects such as TUI, VRBRIDGE, and so on.

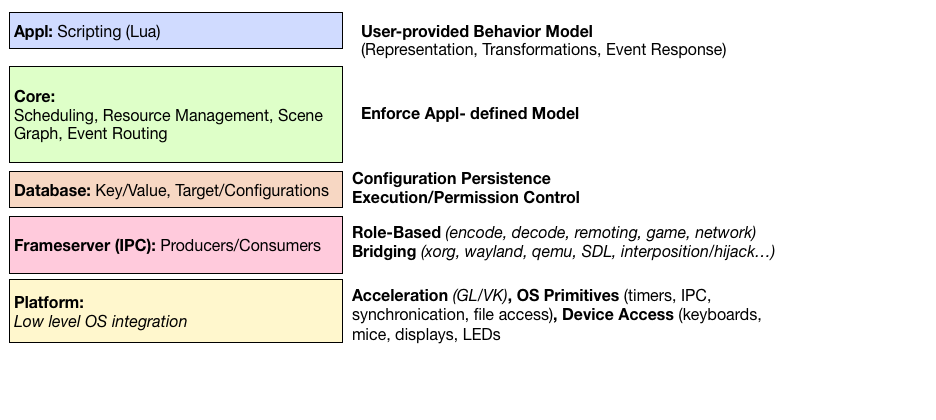

Starting with this overview of the layers of the design:

From top to bottom, we start with the Appl layer. This is the set of scripts and resources that the user provides, refered to as the running 'appl'. It controls what the engine will actually do, how inputs should be interpreted, translated and what should be routed where and when. It also defines the current data model and how it is to be represented in terms of images, sounds, animations and so on.

Then we have the engine core that looks very much like what would be used in a game engine. It is centered around a scene graph of different objects, their visual and aural transformations and an event loop that continously traverserses this graph and transforms/maps it to output devices based on some synchronization profile. It also provides the support functions and setup for the other layers.

There is a small per-user database layer that provides for configuration and persistance. It is mostly used as a key/value store for each appl to track settings in, but is also used for tracking 'targets' and their (one or several) configurations. These are used to specify permitted execution targets and their environment for when the Appl layer wants to initiate the launch of an external client but with a trusted chain of command (in contrast to a client-server model where the client first authenticates itself to a server, though that is also supported, if desired). Launch targets are also used to track custom parameters such as last known position or size.

The Frameserver layer uses an internal API referred to as 'SHMIF' for connecting external processes as both interactive and non-interactive producers and consumers. There are a number of frameserver archetype that dictate how they interact with the different appl layer APIs and how they are expected to behave. The design and use of this API is covered in SHMIF.

The Platform layer specifies a combination of input, audio, video and accelerated graphics implementations, along with a small set of hand-picked 'generic system' functions needed to interact with file systems, as well as basic IPC primitives from which to build more advanced ones. It is statically configured and tuned once during compile-time.

The appl layer interface is the Lua scripting language and virtual machine. The programming model exposed by the API is event driven from a small set of entry points that match the states of the engine loop and special events (like device plug- unplug). The scripting perspective is covered in the scripting guide and in API Overview.

The Lua API is tied to Lua5.1 in order to support both an interpreted Lua VM and the LuaJIT VM. The API model used is (almost) exclusively imperative/ procedural and if you look at the rather big and repetitive 'src/engine/arcan_lua.c' there is a rather quirky format to mapping engine functions and scripting functions. The pattern is simply that of opcode-table and stack based type validation and conversion when doing copy-in to exec to copy-out, with a hookable entry/exit macro in order to make specialized instrumentation builds for tracing test coverage, debugging and profiling.

For most cases, metatables and other 'exotic' language features are avoided. The reasons for this are to be able to rework the stack operations to have the Lua- bindings to also work as a bytecode wrapper format to support an external driver (= other languages in separate proesses) and context-bound rendering (= network transparency). In those settings, having an implicit context in form of a table and have context-dependent symbol names makes things more difficult.

Four major points are still missing for the Lua layer:

-

External REPL- like terminal interface to asynchronously query the Lua VM state.

-

Coordinate concurrency with explicit copy-in-out between computation threads and main thread.

-

Non-fatal+cleanup- invocation of mapped functions, this is for the external-driver and network transparency parts.

-

Debugger support/integration that survives context jump between engine, VM and VM-in-callback.

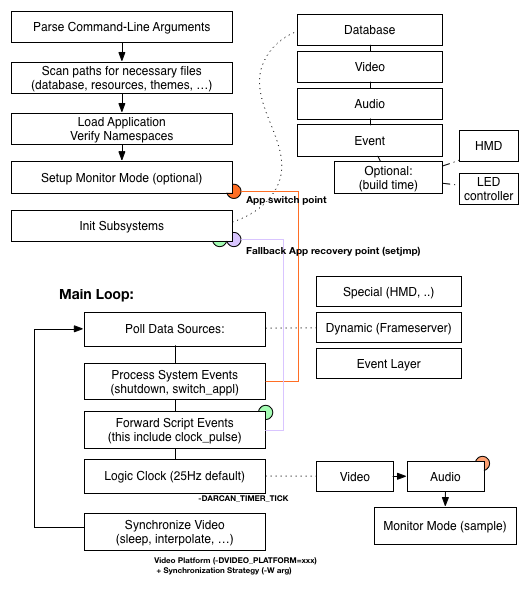

The following figure covers the engine layer main loop.

The following figure,  shows the

basic flow from how events travel to the active scripts and onwards.

What is not illustrated is the internal engine component event flow,

but it is very limited.

shows the

basic flow from how events travel to the active scripts and onwards.

What is not illustrated is the internal engine component event flow,

but it is very limited.

Internally, the core is mainly enforcing the main event loop, polling the platform layer, maintaining the A/V updates and routing events to the consumer (scripting). The more complicated parts are (in order) frameserver coordination, graphics scene graph traversal and audio pipeline. Scene graph traversal is split into two steps, necessary updates (monotonic ticks) and extraneous updates (interpolation) that can be called at different rates based on a synchronization strategy, allowing a controlled balance between latency, animation smoothness and energy consumtion. Intermediate representations such as transformation matrices are resolved and cached every time an object is queried.

Most of the normal graphics (2D) processing and the frameserver coordination is considered stable and in maintenance rather than development state, the same can't be said for the 3D support and for the audio. Audio needs to be split into a platform/ like approach and 3D, even though it has most of the groundwork from 2D graphics, and most of the basic linear algebra math, it lacks in the more advanced culling, materials and physics departments.

The platform layer is comprised of an event provider, an accelerated graphics provider, output control and OS specific glue. In a sense, there's a similar platform layer to the SHMIF- API, but it is smaller and, often, the SHMIF build can cherry-pick functions from the normal platform layer.

It is kept as a static- build time tunable for two reasons. One is to push towards being statically linked, signed and verified to the point that it can run without much else of a 'userspace' up and running - as part of an early component in a locked down trusted boot environment.

The other is simple sanity: graphics, in particular, has such as a rich history of completely broken drivers where virtually any choice shifts the set of platforms that graphics will produce semi-reliable results on. Tracking/dealing with this at any scale when there is also one, two or several layers of dynamic driver selection and loading, takes more effort and resources than what it is typically worth.

The accelerated graphics provider only really comes in one flavor and that is OpenGL 2.1. The reason for that particular choice is that it resides as the last cutoff point in terms of necessary features vs. chances of working drivers at scale. There is GLES2/3 "support", but it disables certain features, and the amount of outright broken implementations out there makes an idea of support a crap shoot - especially in inherently corrosive ecosystems like the embedded ARM space.

This limits what we can do in terms of certain optimizations, graphics features and graphics quality. To combat that, the future plans are to expand in two polarizing directions - software rendering and Vulkan. Software rendering to push towards working with simpler - one day perhaps even open - graphics devices. Vulkan rendering is targeted in order to get access to some of the more recent GPU features that makes sense in our rather narrow scope (primarily better feedback from on-gpu operations and better synchronization of transfers).

The event provider is used to populate event queues with data from input devices. The defining part here is to try and avoid as many OS specific tie-ins as possible, and to push abstract filtering, defer decisions and features to the appl layer, with higher-level filtering (acceleration, averaging, kalman, ...) as script-triggered opt-ins.

The database support fills a number of roles, some mature, some not. The major part is that it acts as a policy tracker of allowed execution targets and their configurations (variations in environments and arguments). This to stop the appl layer for needlessly gaining hard-to-revoke commands like uncontrolled exec, but also to provide a coherent key-value store for user-bound configurations that can be reliably moved, split, replicated and backed up. This means that you can have separate/mobile databases for different permissions contexts (e.g. users) and the per-application configuration will come with it, no hunting for dot files.

The database is also, to a lesser extent, used to define platform configuration options and moving from environment- variable based configuration to database key-values will be encouraged in the future.

The reason for going with sqlite rather than say, normal configuration files or Lua scripts is partly its role of a modern reliable "File" abstraction with most (all?) of the convoluted concurrency- and reliability edge cases sorted, and partly its performance when it comes to high-frequency runtime fetch/updates. Sqlite also opens up for the future possibility easy spliting/merging tables with external clients as a way to get a sandboxed controlled interface for configuration management, snapshot and sharing.

Frameservers are something of rare design decision. The name was borrowed from the VirtualDub windows software from the video/encoding era of the mid-late 90ies where a frameserver was an external provider of raw audio and video frames.

They were added to Arcan quite early in its lifecycle as a way to curb all the obvious and exploitable memory corruptions that ffmpeg libraries spawned as soon as you looked at them in a funny way. The approach worked well enough that it has expanded to be a centerpiece of the system as a whole.

The 'frameserver' concept in Arcan parlerance refers to an external component that can work bidirectionally with both audio, video and input. Frameservers are subdivided by the archetype that they embody, which is used to bin various tasks into distinct privilege models for fine grained sandboxing. To get such sandboxing going, there's a chainloader that intercepts the connection primitives and the archetype that should be realized. The archetype that is likely to be most familiar to people is the external/ non-authoritative, which, in X11 and other display server technology is simply considered 'a client'.

The least common denominator for all the different frameservers is that they are tied together through the API they are using, which is covered in greater detail here: SHMIF. A shmif connection is centered around a segment (video buffers, audio buffers, event queue and metadata) where there is one guaranteed 'primary' segment and negotiable subsegments. These are typed as a hint towards their intended use, like CURSOR or POPUP. These double as a feature- discovery/negotiation mechanism becase the running scripts can dynamically reject requests for additional subsegments on a per-client basis.

Three final transformations are still being worked on for shmif. One is a library- version of the server-side routines used to manage a client. With that in place, the second part: a network proxy - can be built for much more efficient remote connection than going trough a more naive protocol like VNC, and encompassing more data specific compression that what X and friends would allow. Last comes the ability for shmif- clients to chain and either share a connection with a subprocess, or to slice of- and share- parts of its negotiated connection with subprocesses.

A normal Arcan build has one frameserver for each archetype, but they are all separate binaries that can be switched out for different/custom sets. For more tailored/customized systems.

The decode frameserver is responsible for all conversions from binary-datastreams to representations that can be presented and understood by a human. In the end, it will hopefully become the sole place for parsing and decoding contents from unknown and untrusted sources, and be reducible to a handful of syscalls and no file-system access. A critera for decode is reproduciblity, the same input data should yield the same output.

The encode frameserver covers (potentially) lossy translation of data provided by Arcan, and as such it has the rare behavior of its primary shmif segment being mapped for output use. This covers normal media encoding and streaming, but also less obvious transformations such as OCR and text to speech.

Game is more useful in embedded and gaming-console like contexts. The added assumptions on the 'game' archetype is that it is mostly self-contained: a single primary segment, soft-realtime updates, latency and performance being a priority. In the default build, the game archetype frameserver is built around libretro, an API that neatly envelops the typical system- interaction needed by 'retro' games. It also servers as a good target for performance measurements.

The network frameserver provides basic inter- Arcan instance communication routing and is currently in an experimental state, likely to be reworked a number of times. The use-case is local-network service announcement and discovery, with an assumption that there is an Arcan- capable system on both ends that need to synchronize data for mobility or distributed functionality.

The remoting frameserver represents remote- client protocols for outbound connections (inbound is confusingly enough still handled by 'encode'). Though it could've been part of 'decode', there is some merit to being able to discern between this particular context of use and related network behavior and management of authentication primitives.

The external/non-authoritative archetype is a catch-all for everything not covered by the others. A listening socket is allocated by the running appl- scripts on a per-connection basis, with an appl- chosen name. The closest parallel is the DISPLAY environment variable in X, but can be used by the appl for other modularity purposes as it is named. It is, for instance, possible to have a 'use-once' connection point for 'BACKGROUND', 'STATUSBAR' or something else appl- specific and have the script trigger different behavior based on the connection point name alone.

A short example on the internal flow to illustrate how these layers act together. Imagine the scenario that we want an appl that provides a full-screen terminal where the user can type in commands.

function example(args)

connection = launch_avfeed("", "terminal",

function(source, status)

if (status.kind == "preroll") then

target_displayhint(source, VRESW, VRESH, 0,

{ppcm = VPPCM, subpixel_layout = "rgb");

elseif (status.kind == "resized") then

blend_image(source, 1.0, 20);

resize_image(source, status.width, status.height);

elseif (status.kind == "terminated") then

shutdown("terminal exited");

end

end

);

end

function example_input(iotbl)

target_input(connection, iotbl);

endThis script is saved as 'example.lua' in a folder named 'example'. This is a structure that is enforced to make it easy to figure out what is actually running and a traceable entrypoint for initialization. This should (hopefully) start with:

arcan -p $HOME /path/to/example

First the engine will initialize the various subsystems that connect core with platform and appl - database, event, audio, video and scripting VM. Then it will map and verify a number of namespaces, where the important ones right now is ARCAN_APPLBASEPATH (where the engine will look for appls-), ARCAN_RESOURCEPATH (read-only, shared resources) and ARCAN_BINPATH (chainloader - arcan_frameserver and prefix for builtin frameservers, afsrv_terminal, afsrv_decode, ...), although there are a number of them that can be defined in order to restrict and sort how the underlying filesystem will be used. The most important ones can be changed via command line arguments, all of them can also be overridden through environment variables. There is also an initial autodetection of namespace configurations that is built into the platform layer. If the current configuration pass inspection, the main engine loop will take over and the initialization function ('example') in the script above will be invoked.

The first call, launch_avfeed is used to launch a frameserver based on archetype, see doc/launch_avfeed.lua in the source repository for more details on how it works. The specified archetype here is 'terminal'. The function that is defined as an argument to launch_avfeed is a callback that will be triggered with information about every event the frameserver forwards, where the most important ones right now a "preroll", "resized" and "terminated".

The preroll stage is entered when the connection has been setup, but and the scripts has a final chance to tell some things about initial states, desired size, fonts and so on. The 'source' argument act as both an identifier for a graphics resource and a unique connection identifier.

resized comes after each delivered frame that has a size that is different from the last one. It is up to the script to rearrange things, or do some graphics- related magic when that happens. In this example, we just reflect the size of the rendered object to match the new size.

terminated happens when the client exits for whatever reason, which in this case is our queue to exit.

The engine main loop will continuously check if clients or the platform layer has delivered any new events or buffers.

With the model covered here, some odd and possibly unique features have been implemented, and this section will be used to briefly enumerate them.

The Appl layer is set to aggressively fail on the first sign of invalid API use. The default error handler in this case first checks if there is another set of scripts that has been designated as the 'fallback appl' and if that script has a function entry point that match applname_adopt. In that case, each video handle reference that has a frameserver mapping will be filtered through that function, allowing the new set of scripts to adopt external connections without loss of data. This feature allows us to recover from script error without loss of data - essentially replicating the gains from the "X11 window manager as a normal client" model without having to expose sensitive features via the same protocol as clients. It also means that it is possible to do live updates of the script sets and switch without shutting down, but revert/fallback if the new set of scripts induces a crash.

As with X11, if it is the server itself that crashes rather than the window management code - clients are screwed. Shmif has two facilities to counter this problem, one is that the event model covers a 'RESET' state to indicate to a client that important state has been lost, or that the user explicitly requests a return to a previous safe state. It is also possible for the scripts to send a migration- hint, telling of another connection point or a fallback connection point should something happen to the main server connection. If such a point has been provided (for an external connection, the default is reusing the same name as the initial connection was established under) and the server happens to crash, the client will enter a blocking/sleeping state on the next shmif call (typically trying to retrieve an event) that will periodically try to connect to the fallback connection point. When that occurs, it injects a RESET event with a state indicating that subsegments were lost, but the primary connection has been restored (so the subsegments can subsequently be re-requested if they fit the new connection).

This feature opens for a number of possibilities, a big one being recovery from server crashes with no or minimal data-loss, depending on how the client is written. Another one is application mobility when combined with network proxying servers, load balancing or having an LAN- application server that automigrates when your laptop connects.

Lightweight Arcan is the platform build where the underlying video and agp platform outputs to shmif, allowing one build of the engine to work as the display server, while the LWA build runs scripts for other purposes. This becomes more powerful when combined with network transparent rendering via the bytecode format mentioned as an emergent side effect of the appl- layer, and the remoting possiblities from live migration and a proxy server.

As such, it allows for more rapid development of desktop environment related scripts as ideas can be tested without shutting down or risking loss of work from a crash. Furthemore, since most of the engine infrastructure is re-used for secondary purposes, the set of expanding scripts contribute to exercising engine paths for different use cases, indirectly expanding the testing set.

Lastly, the engine can be set to snapshot and provide monitoring of itself in multiple forms. One is a Lua- serialisation format of datastructures and state, that is both periodically generated and forwarded to an external target (which can be an arcan-appl running via LWA) as part of monitoring for performance and bugs, and as a part of crash-dump formats for analysing malfunctions.

Since most important state for external processes are kept as part of the shared memory layout, it too can either be event-triggered analysed by an external tool (see tools/shmmon) or recovered by sharing the connection point mapping or extracted through some system specific means like linux /proc.