This is a Tensorflow implementation of asyncronous 1-step Q learning with improvement on weight update process (use minibatch) to speed up training. Algorithm can be fount at Asynchronous Methods for Deep Reinforcement Learning

- Python

- TensorFlow

- gym (with atari environment)

- OpenCV-Python

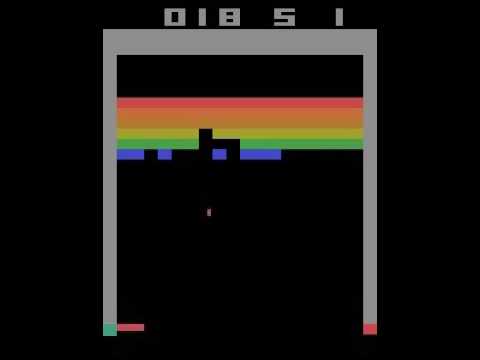

Run play.py to play atari game (default is Breakout-v0) by trained network.

Run train.py to train the network on your computer.

You will get a comparatively good result (40+ score) when t is larger than 2000000. On my computer (i5-4590/16GB/GTX 1060 6GB), the training process need at least 2-3 hours.

You can find the eval at https://gym.openai.com/evaluations/eval_03aUUz45Sc6TBg0vifljwA , which takes 40 hours to train the network.