This repo has moved to princewang1994/RFCN_CoupleNet.pytorch, it will stop updating here.

This project is an pytorch implement R-FCN and CoupleNet, large part code is reference from jwyang/faster-rcnn.pytorch. The R-FCN structure is refer to Caffe R-FCN and Py-R-FCN

- For R-FCN, mAP@0.5 reached 73.2 in VOC2007 trainval dataset

- For CoupleNet, mAP@0.5 reached 75.2 in VOC2007 trainval dataset

arXiv:1605.06409: R-FCN: Object Detection via Region-based Fully Convolutional Networks

This repo has following modification compare to jwyang/faster-rcnn.pytorch:

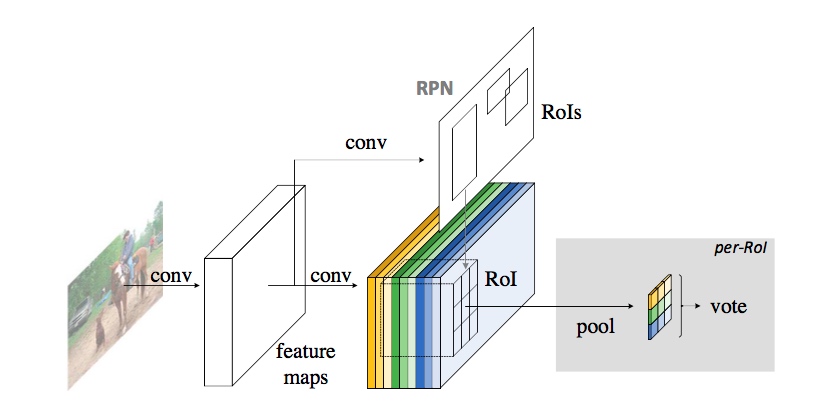

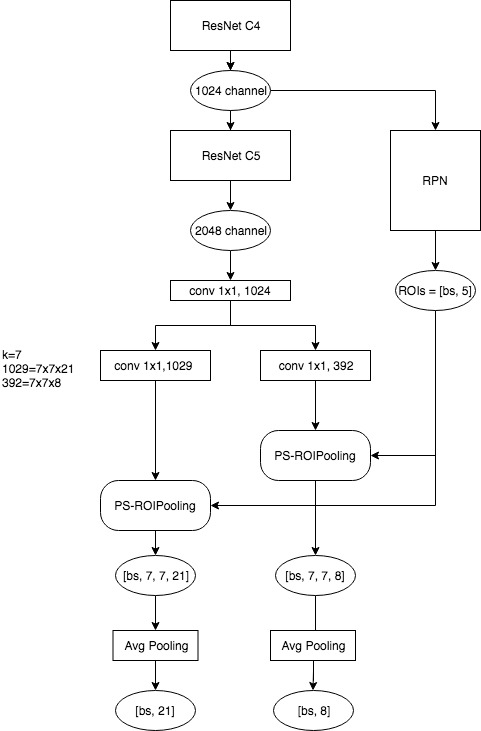

- R-FCN architecture: We refered to the origin [Caffe version] of R-FCN, the main structure of R-FCN is show in following figure.

- PS-RoIPooling with CUDA :(refer to the other pytorch implement R-FCN, pytorch_RFCN). I have modified it to fit multi-image training (not only batch-size=1 is supported)

- Implement multi-scale training: As the original paper says, each image is randomly reized to differenct resolutions (400, 500, 600, 700, 800) when training, and during test time, we use fix input size(600). These make 1.2 mAP gain in our experiments.

- Implement OHEM: in this repo, we implement Online Hard Example Mining(OHEM) method in the paper, set

OHEM: Falseincfgs/res101.ymlfor using OHEM. Unluckly, it cause a bit performance degration in my experiments

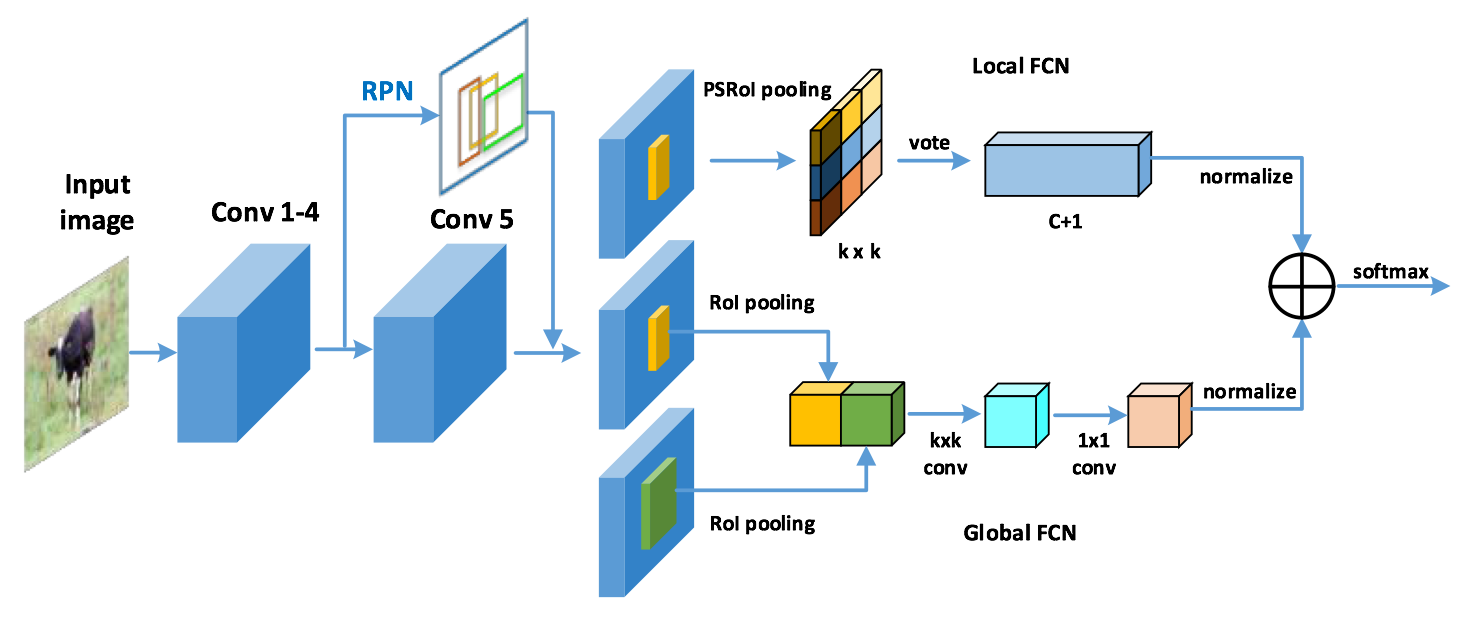

arXiv:1708.02863:CoupleNet: Coupling Global Structure with Local Parts for Object Detection

- Making changes based on R-FCN

- Implement local/global FCN in CoupleNet

We benchmark our code thoroughly on three datasets: pascal voc using two different architecture: R-FCN and CoupleNet. Results shows following:

1). PASCAL VOC 2007 (Train: 07_trainval - Test: 07_test, scale=400, 500, 600, 700, 800)

| model | #GPUs | batch size | lr | lr_decay | max_epoch | time/epoch | mem/GPU | mAP |

|---|---|---|---|---|---|---|---|---|

| R-FCN | 1 | 2 | 4e-3 | 8 | 20 | 0.88 hr | 3000 MB | 73.8 |

| CouleNet | 1 | 2 | 4e-3 | 8 | 20 | 0.60 hr | 8900 MB | 75.2 |

- Pretrained model for R-FCN(VOC2007) has released~, See

Testpart following

First of all, clone the code

$ git clone https://github.com/princewang1994/R-FCN.pytorch.git

Then, create a folder:

$ cd R-FCN.pytorch && mkdir data

$ cd data

$ ln -s $VOC_DEVKIT_ROOT .

- Python 3.6

- Pytorch 0.3.0, NOT suport 0.4.0 because of some errors

- CUDA 8.0 or higher

- PASCAL_VOC 07+12: Please follow the instructions in py-faster-rcnn to prepare VOC datasets. Actually, you can refer to any others. After downloading the data, creat softlinks in the folder data/.

- Pretrained ResNet: download from here and put it to

$RFCN_ROOT/data/pretrained_model/resnet101_caffe.pth.

As pointed out by ruotianluo/pytorch-faster-rcnn, choose the right -arch in make.sh file, to compile the cuda code:

| GPU model | Architecture |

|---|---|

| TitanX (Maxwell/Pascal) | sm_52 |

| GTX 960M | sm_50 |

| GTX 1080 (Ti) | sm_61 |

| Grid K520 (AWS g2.2xlarge) | sm_30 |

| Tesla K80 (AWS p2.xlarge) | sm_37 |

More details about setting the architecture can be found here or here

Install all the python dependencies using pip:

$ pip install -r requirements.txt

Compile the cuda dependencies using following simple commands:

$ cd lib

$ sh make.sh

It will compile all the modules you need, including NMS, ROI_Pooing, ROI_Align and ROI_Crop. The default version is compiled with Python 2.7, please compile by yourself if you are using a different python version.

To train a R-FCN model with ResNet101 on pascal_voc, simply run:

$ CUDA_VISIBLE_DEVICES=$GPU_ID python trainval_net.py \

--arch rfcn \

--dataset pascal_voc --net res101 \

--bs $BATCH_SIZE --nw $WORKER_NUMBER \

--lr $LEARNING_RATE --lr_decay_step $DECAY_STEP \

--cuda

- Set

--sto identified differenct experiments. - For CoupleNet training, replace

--arch rfcnwith--arch couplenet, other arguments should be modified according to your machine. (e.g. larger learning rate for bigger batch-size) - Model are saved to

$RFCN_ROOT/save

If you want to evlauate the detection performance of a pre-trained model on pascal_voc test set, simply run

$ python test_net.py --dataset pascal_voc --arch rfcn \

--net res101 \

--checksession $SESSION \

--checkepoch $EPOCH \

--checkpoint $CHECKPOINT \

--cuda

- Specify the specific model session(

--sin training phase), chechepoch and checkpoint, e.g., SESSION=1, EPOCH=6, CHECKPOINT=5010.

- R-FCN VOC2007: faster_rcnn_2_12_5010.pth

Download from link above and put it to save/rfcn/res101/pascal_voc/faster_rcnn_2_12_5010.pth. Then you can set $SESSiON=2, $EPOCH=12, $CHECKPOINT=5010 in test command. It'll got 73.2 mAP.

Below are some detection results:

- Keeping updating structures to reach the state-of-art

- More benchmarking in VOC0712/COCO

RFCN Pretrained model for VOC07- CoupleNet pretrained model for VOC07

- Adapt to fit PyTorch 0.4.0

This project is writen by Prince Wang, and thanks the faster-rcnn.pytorch's code provider jwyang