August 2020

tl;dr: Use deep reinforcement learning to refine mono3D results.

The proposed RAR-Net is a plug-and-play refinement module and can be used with any mono3D pipeline. This paper comes from the same authors as FQNet. Instead of passively scoring densely generated 3D proposals, RAR-Net uses an DRL agent to actively refine the coarse prediction. Similarly, shift RCNN actively learns to regress the differene.

RAR-Net encodes the 3D results as a 2D rendering with color coding. The idea is very similar to that of FQNet which encodes the 2D projection of 3D bbox as a wireframe and directly rendered on top of the input patch. This is the "direct projection" baseline in RAR-Net. Instead, RAR-Net uses a parameter aware data enhancement. and encodes semantic meaning of the surfaces as well (each surface of the box is painted in a specific color).

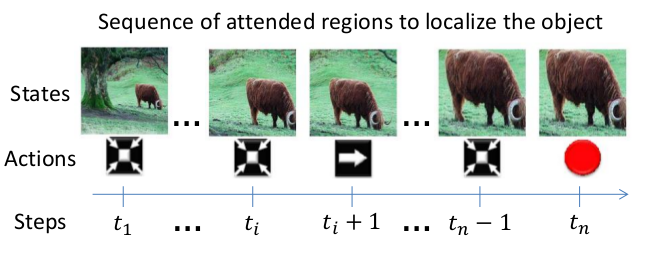

The idea of training a DRL agent to do object detection or refinement is not new. It is very similar to the idea of Active Object Localization with Deep Reinforcement Learning ICCV 2015.

- The curse of sampling in the 3D space. The probability to generate a good sample is lower than in 2D space.

- Single action: moving in one direction at a time is the most efficient, as the training data collected in this way is the most concentrated, instead of scattered throughout 3D space.

- One stage vs Two stage vs MDP

- one stage is not good enough. Two stages are hard to train separately. Thus MDP via DRL, as there is no optimal path to supervise the agent.

- DQN

- input: cropped image patch + parameter-aware data enhancement (color coded cuboid projection)

- output: Q-values for 15 actions. (2 * 7 DoF adjustment + one STOP/None action)

- Each action is discrete during each iteration, and it is in allocentric coordinate system instead of global system. This helps to learn the DQN same action for the same appearance.

- Reward is +1 if the 3D IoU increase, -1 if decreases.

- The training data is generated from jittering the GT.

- There are two commonly used split for KITTI validation dataset, one from mono3D from UToronto, and one from Savarese from Stanford. It should be checked to perform apple-to-apple comparison. Similarly, there are different validation split for monocular depth estimation.

- What is the alternative to RL? There is one baseline with all the features as RAR-Net but without RL in Table 5.