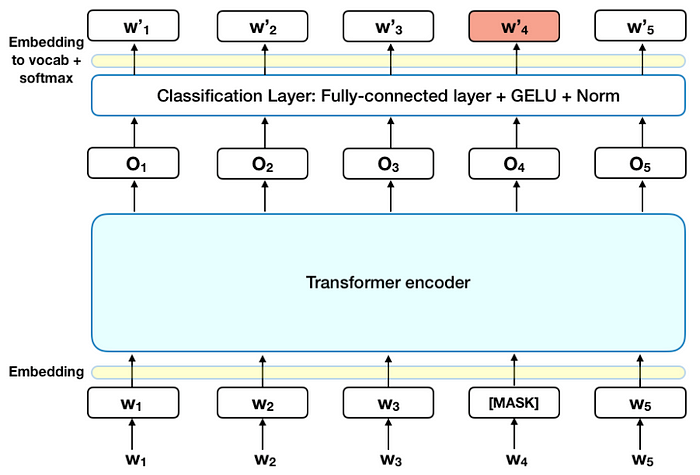

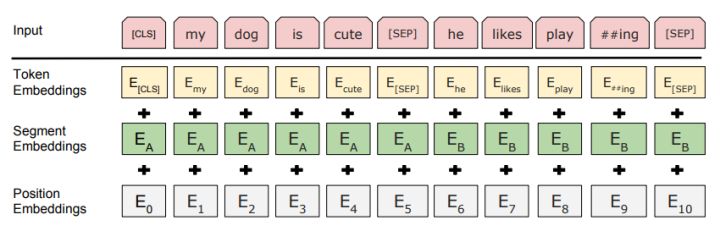

Bidirectional Encoder Representations from Transformers (BERT) is a Transformer-based machine learning technique for natural language processing (NLP) pre-training developed by Google.BERT is a deeply bidirectional, unsupervised language representation, pre-trained using only a plain text corpus. Context-free models such as word2vec or GloVe generate a single word embedding representation for each word in the vocabulary, where BERT takes into account the context for each occurrence of a given word.

BERT has inspired many variants: RoBERTa, XLNet, MT-DNN, SpanBERT, VisualBERT, K-BERT, HUBERT and more. Some variants attempt to compress the model: TinyBERT, ALERT, DistilBERT and more. We describe a few of the variants that outperform BERT in many tasks

RoBERTa: Showed that the original BERT was undertrained. RoBERTa is trained longer, on more data; with bigger batches and longer sequences; without NSP; and dynamically changes the masking pattern.

ALBERT: Uses parameter reduction techniques to yield a smaller model. To utilize inter-sentence coherence, ALBERT uses Sentence-Order Prediction (SOP) instead of NSP. XLNet: Doesn't do masking but uses permutation to capture bidirectional context. It combines the best of denoising autoencoding of BERT and autoregressive language modelling of Transformer-XL.

MT-DNN: Uses BERT with additional multi-task training on NLU tasks. Cross-task data leads to regularization and more general representations.

We will use the SMILE Twitter DATASET

1️⃣ To Understand what Sentiment Analysis is, and how to approach the problem from a neural network perspective.

2️⃣ Loading in pretrained BERT with custom output layer.

3️⃣ Train and evaluate finetuned BERT architecture on Sentiment Analysis.

- BERT is basically the advancement of the RNNs, as its able to Parallelize the Processing and Training. For Example - In sentence we have to process each word sequentially, BERT allow us to do the things in Parellel.

- BERT is a large-scale transformer-based Language Model that can be finetuned for a variety of tasks.

We will be using the Hugging Face Transformer library that provides a high-level API to state-of-the-art transformer-based models such as BERT, GPT2, ALBERT, RoBERTa, and many more. The Hugging Face team also happens to maintain another highly efficient and super fast library for text tokenization called Tokenizers.

- Bidirectional: Bert is naturally bi-directional

- Generalizable: Pre-trained BERT model can be fine-tuned easily for downstream NLp task.

- High Performace: Fine-tuned BERT models beats state-of-art results for many NLP tasks.

- Universal: Framework pre-trained on a large corpus of unlabelled text that includes the entire Wikipedia( 2,500 million words!) & Book Corpus (800 million words)

Use the package manager pip

pip install torch torchvision

pip install tqdm

pip install transformers

0️⃣1️⃣ An introduction to some basic theory behind BERT, and the problem we will be using it to solve

0️⃣2️⃣ Explore dataset distribution and some basic preprocessing

0️⃣3️⃣ Split dataset into training and validation using stratified approach

0️⃣4️⃣ Loading pretrained tokenizer to encode our text data into numerical values (tensors)

0️⃣5️⃣ Load in pretrained BERT with custom final layer

0️⃣6️⃣ Create dataloaders to facilitate batch processing

0️⃣7️⃣ Choose and optimizer and scheduler to control training of model

0️⃣8️⃣ Design performance metrics for our problem

0️⃣9️⃣ Create a training loop to control PyTorch finetuning of BERT using CPU or GPU acceleration

1️⃣0️⃣ Loading finetuned BERT model and evaluate its performance

1️⃣1️⃣ Oth-Resources