Riax is an Elixir wrapper for Riak Core. Riak Core is a building block for distributed and scalable systems in the form of an Erlang Framework.

To learn more about Riak you can check the Riak Core and useful links section sections. To learn more about Riax, check the setup, the tutorial or the API Reference.

If you want to set it up with Erlang, we also have an up-to-date (OTP 25) tutorial.

iex(dev1@127.0.0.1)1> #### Check the Ring Status

iex(dev1@127.0.0.1)2> Riax.ring_status

==================================== Nodes ====================================

Node a: 64 (100.0%) dev1@127.0.0.1

==================================== Ring =====================================

aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|aaaa|

:ok

iex(dev1@127.0.0.1)3> #### Join an already running Node

iex(dev1@127.0.0.1)4> Riax.join('dev2@127.0.0.1')

13:51:21.258 [debug] Handoff starting with target: {:hinted, {913438523331814323877303020447676887284957839360, :"dev2@127.0.0.1"}}

iex(dev1@127.0.0.1)5> #### Send a command to a VNode

iex(dev1@127.0.0.1)6> Riax.sync_command(1, "riax", {:ping, 1})

13:13:08.004 [debug] Received ping command!

{:pong, 2, :"dev1@127.0.0.1", 822094670998632891489572718402909198556462055424}Add Riax to your dependencies:

defp deps do

[

{:riax, ">= 0.1.0", github: "lambdaclass/elixir_riak_core", branch: "main"}

]

endAnd follow the setup

It is based on the Dynamo architecture, meaning it is easy to scale horizontally and distributes work in a decentralized manner. The great thing about Riak it's that it provides this architecture as a reusable Erlang library, meaning it can be used in any context that benefits from a decentralized distribution of work.

You must be thinking "ok, fine, this is an Erlang lib, I'll use it directly". The setup of Riak Core can be tricky, specially from Elixir, this library takes care of all the gory details for you - we suffered so you don't have to.

The key here is that Riak Core provides Consistent Hashing and Virtual Nodes. Virtual Nodes distribute work between them, and Consistent Hashing lets us route commands to these Virtual Nodes. Note that many Virtual Nodes can run in a Physical Node (i.e. a physical server) and can be easily set up or taken down. Plus, the only thing that you have to do using this library is giving them names and implement a behaviour, Riak handles the rest for you.

The most intuitive and straight-forward use case is a key-value store in memory, we've actually implemented one here for our tests.

A game server which handles requests from players could partition players through said hashing to handle load, and ensure that players requests are always handled on the same Virtual Node to ensure data locality.

A distributed batch job handling system could also use consistent hashing and routing to ensure jobs from the same batch are always handled by the same node, or distribute the jobs across several partitions and then use the distributed map-reduce queries to gather results.

Another example: Think about serving a dataset which you want quick access to, but It's too big to fit in memory. We could distribute said files (or file) between Virtual Nodes, use an identifier (say, like an index) hash it and assign it to a Virtual Node. Riak fits really well here as it scales easily horizontally. This last use case is actually explained below.

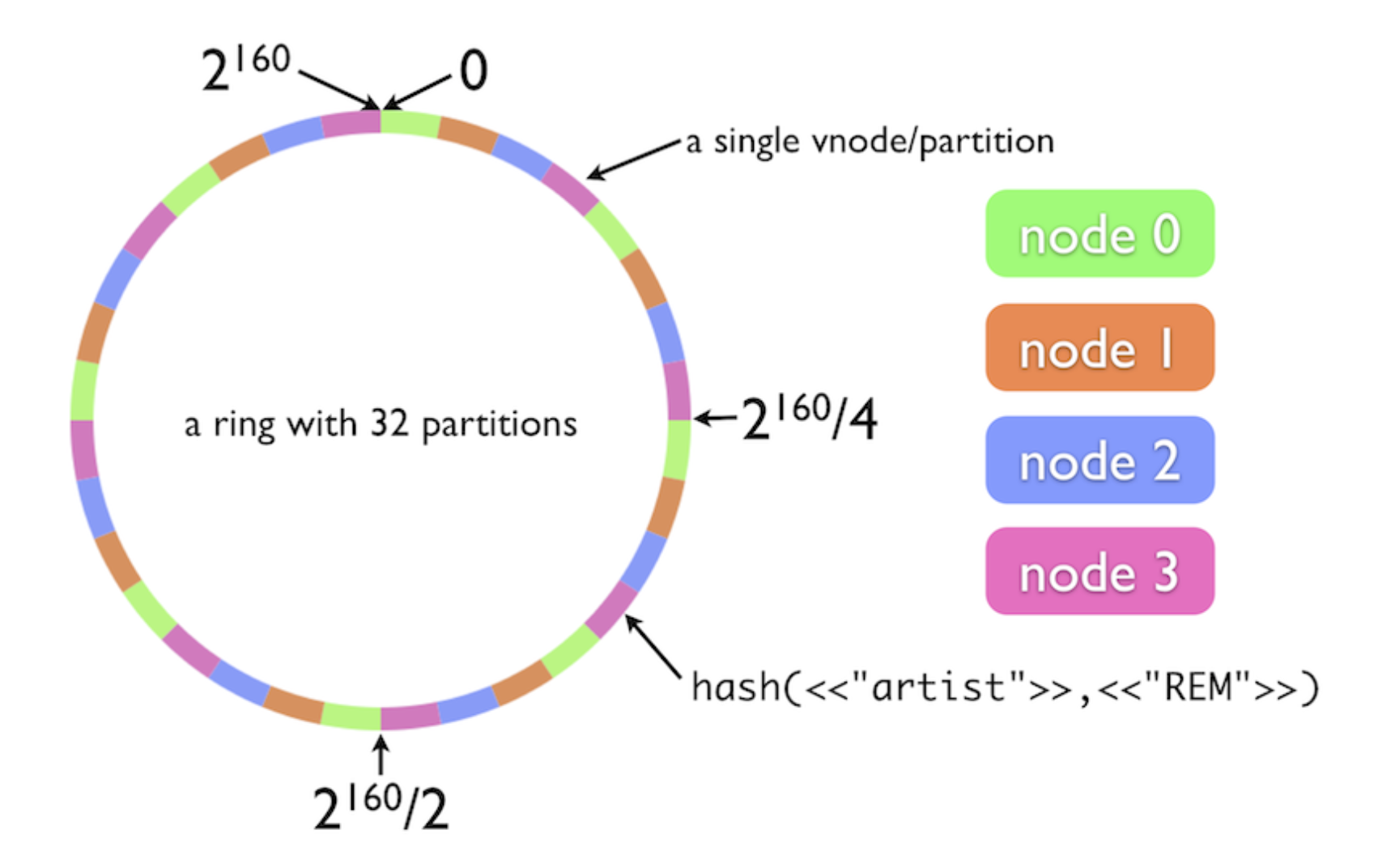

Before performing an operation, a hashing function is applied to some data, a key. The key hash will be used to decide which node in the cluster should be responsible for executing the operation. The range of possible values the key hash can take (the keyspace, usually depicted as a ring), is partitioned in equally sized buckets, which are assigned to Virtual Vodes.

Virtual Nodes share what is called a keyspace. The number of VNodes is fixed at cluster creation and a given hash value will always belong to the same partition (i.e. the same VNode). The VNodes in turn are evenly distributed across all available physical nodes. Note this distribution isn't fixed as the keyspace partitioning is: the VNode distribution can change if a physical node is added to the cluster or goes down.

After this, be sure to check the tutorial to see this in action

- Introducing Riak Core

- Riak Core Wiki

- Masterless Distributed Computing with Riak Core

- Ryan Zezeski's "working" blog: First, multinode and The vnode

- Little Riak Core Book

- riak_core in Elixir: Part I, Part II, Part III, Part IV and Part V

- A Gentle Introduction to Riak Core

- Understanding Riak Core: Handoff, Building Handoff and The visit fun

- udon_ng example application.