Assignments for the course CSCI 599: 3D Vision

Repo template here

git clone https://github.com/jingyangcarl/CSCI599.git

cd CSCI599

ls ./ # you should see index.html and README.md showup in the terminal

code ./ # open this folder via vscode locally

# open and right click on the index.html

# select "Open With Live Server" to run the code over localhost.In this assignment, you will implement surface subdivision and simplification using Loop Subdivision and Quadric Error Metrics, respectively. The task requires the construction of a data structure with adjacency, such as half-edge or incidence matrices, to facilitate quick traversal of mesh regions for computations. You can find the algorithms in the class lectures. The outcome will be an upsampled or downsampled version of a given mesh.

The following files are used:

assignments/assignment1.pyhtml/assignment1.htmljs/assignment1.js

- +40 pts: Implement loop subdivision.

- +40 pts: Implement Quadratic Error based mesh decimation.

- +20 pts: Write up your project, detials of data structure, algorithms, reporting runtime and visualizations of results with different parameters.

- +10 pts: Extra credit (see below)

- -5*n pts: Lose 5 points for every time (after the first) you do not follow the instructions for the hand in format

Forbidden You are not allowed to call subdivision or simplification functions directly. Reading, visualization and saving of meshes are provided in the start code.

Extract Credit You are free to complete any extra credit:

- up to 5 pts:Analyze corner cases (failure cases) and find solutions to prevent them.

- up to 10 pts: Using and compare two different data structures.

- up to 10 pts: Implement another subdivision or simplification algorithm.

- up to 10 pts: Can we preserve the original vertices after decimation (the vertices of the new meshes are a subset of the original vertices) ? Give it a try.

For all extra credit, be sure to demonstrate in your write up cases where your extra credit.

In this assignment, you will implement structure from motion in computer vision. Structure from motion (SFM) is a technique used to reconstruct the 3D structure of a scene from a sequence of 2D images or video frames. It involves estimating the camera poses and the 3D positions of the scene points.

The goal of SFM is to recover the 3D structure of the scene and the camera motion from a set of 2D image correspondences. This can be achieved by solving a bundle adjustment problem, which involves minimizing the reprojection error between the observed 2D points and the projected 3D points.

To implement SFM, you will need to perform the following steps:

- Feature extraction: Extract distinctive features from the input images.

- Feature matching: Match the features across different images to establish correspondences.

- Camera pose estimation: Estimate the camera poses for each image.

- Triangulation: Compute the 3D positions of the scene points using the camera poses and the corresponding image points.

- Bundle adjustment: Refine the camera poses and the 3D points to minimize the reprojection error.

By implementing SFM, you will gain hands-on experience with fundamental computer vision techniques and learn how to reconstruct 3D scenes from 2D images. This assignment will provide you with a solid foundation for further studies in computer vision and related fields.

The following files are used:

assignments/assignment2/assignment2.pyassignments/assignment2/feat_match.pyassignments/assignment2/sfm.pyassignments/assignment2/utils.pyhtml/assignment2.htmljs/assignment2.js

- +80 pts: Implement the structure-from-motion algorithm with the start code.

- +20 pts: Write up your project, algorithms, reporting results (reprojection error) and visualisations (point cloud and camera pose), compare your reconstruction with open source software Colmap.

- +10 pts: Extra credit (see below)

- -5*n pts: Lose 5 points for every time (after the first) you do not follow the instructions for the hand in format

Extract Credit You are free to complete any extra credit:

- up to 5 pts: Present results with your own captured data.

- up to 10 pts: Implement Bundle Adjustment in incremental SFM.

- up to 10 pts: Implement multi-view stereo (dense reconstruction).

- up to 20 pts: Create mobile apps to turn your SFM to a scanner.

- up to 10 pts: Any extra efforts you build on top of basic SFM.

For all extra credit, be sure to demonstrate in your write up cases where your extra credit.

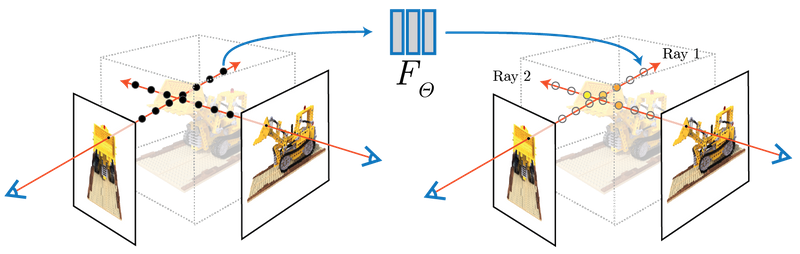

A Neural Radiance Field (NeRF) is a computer vision technique for constructing highly detailed 3D scenes from sets of 2D images. It uses a deep neural network to map spatial coordinates and viewing angles to color and density, enabling the rendering of new viewpoints in complex environments. This makes NeRF valuable for applications in virtual reality and visual effects

Recent advancements in novel-view synthesis have introduced a method using 3D Gaussians to improve visual quality while maintaining real-time display rates. This technique employs sparse points from camera calibration to represent scenes with 3D Gaussians, preserving the characteristics of volumetric radiance fields. It includes an optimization and density control mechanism, along with a rapid, visibility-aware rendering algorithm that supports anisotropic splatting. This method effectively generates high-quality 3D scenes from 2D images in real-time.

In this assignment, you'll play around with these neural renderings and train your own NeRF/3DGS.

The following files are used:

community colabs or reposhtml/assignment3.htmljs/assignment3.js

- +80 pts: Complete the training using your own data.

- +20 pts: Write up your project, algorithms, reporting results (reprojection error) and visualisations, compare your reconstruction with open source software Colmap.

- +10 pts: Extra credit (see below)

- -5*n pts: Lose 5 points for every time (after the first) you do not follow the instructions for the hand in format

Extract Credit You are free to complete any extra credit:

- up to 5 pts: Present results with your own captured data.

- up to 10 pts: Train your models with both Nerf and 3DGS.

- up to 20 pts: Train your model with language embedding (e.g., LERF).

For all extra credit, be sure to demonstrate in your write up cases where your extra credit.