New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Polling khstate from K8s API-server every 5sec for khcheck leading to client side throttling and high latency #1061

Comments

|

This makes sense, and repeatedly polling the API server is not optimal. It seems like Kuberhealthy needs a refactor to a reflector cache for The reason that Implementing a reflector would stop the API spam, improve the speed of Kuberhealthy, and prevent the issue you are seeing here. |

|

I just did some work on this... I am refactoring this to use the existing cache for |

@integrii When can we expect a release for this? Also I would like to contribute to this change in case anything is pending |

|

Trying to understand the code base. Could the StateReflector be passed into the external.Check either instead of or along with the khstatechlient? Looking at this line. That way the |

|

@integrii I did a quick and dirty pass of using the state reflector store to alleviate some API requests. It improved the throughput a little bit. Don't have the numbers handy. |

|

This issue is stale because it has been open 30 days with no activity. Remove stale label or comment on the issue or this will be closed in 15 days. |

Currently kuberhealthy-operator creates a resource called khstate for every khcheck. It keeps on polling khstate for each khcheck from k8s API server every 5 sec Here. It leads to a lot of requests to K8s API server.

We are seeing a lot of ### Waited for Xs due to client-side throttling, not priority and fairness. message in kuberhealthy-operator pods .

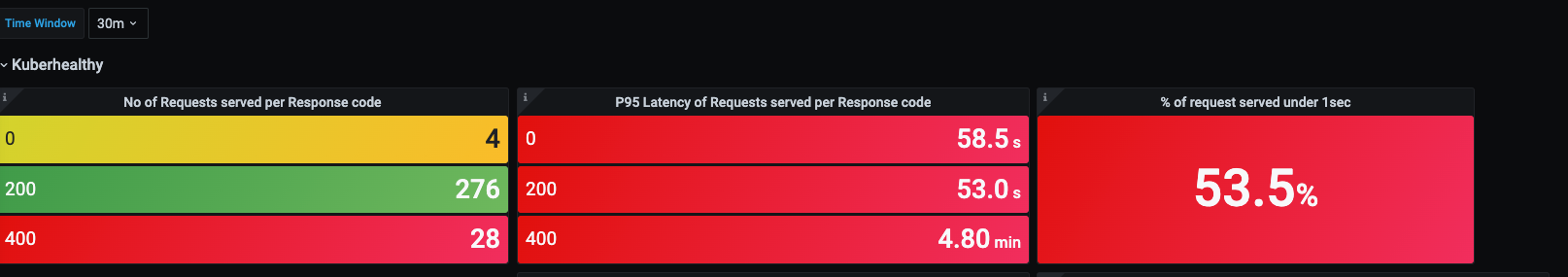

Also We are seeing a lot of latency in request served by kuberhealthy server (P95 ~ 53 sec for 2xx, only 50% request are served under 1sec). These metrics have been generated using standard metrics exposed by istio sidecar

Note: <50 checks are running in cluster. All above snapshots are for master pod

@integrii What's the motvation behind storing khstate as crd instead in inmemory (maybe as a concurrent hash map)?

The text was updated successfully, but these errors were encountered: