Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

ref(sdk): Remove excessive json.loads spans (#64883)

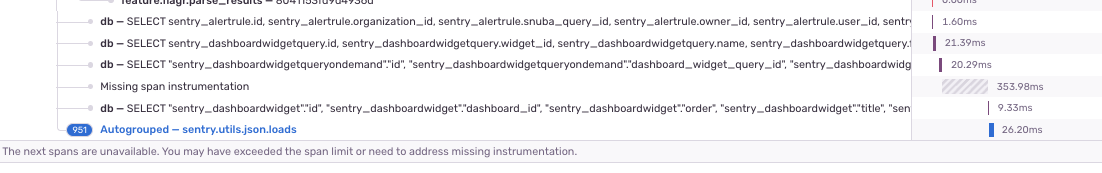

### Summary Many of our transactions use up their span limit immediately because of json.loads creating spans for every field call etc.  I ran the devserver and tried a couple endpoints and removed spans for anything I saw spamming. Feel free to re-add it if you find a python profile for your transaction that shows json.loads is taking up a significant amount of time, I'm just trying to get most of them that concern me at the moment without opening a bunch of PRs one by one. In the future we should probably consider moving these over to a metric as the span offers no additional information, so we can avoid this altogether. At the moment, since json can be called at startup there is currently an issue with config not being loaded so metrics can be used here so that would need to be resolved first.

- Loading branch information

Showing

6 changed files

with

10 additions

and

8 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters