How to deal with toxic content is one of the problems among the websites today. Quora wants to tackle this problem to let users feel safe when sharing knowledge on the platform. In this competition, we are challenged to develop models that identify and flag insincere questions, which would be helpful for creating more scalable methods to detect toxic and misleading content.

- 2-hour kernel running time limitation, so how to let models converge in a short time but keep robust is the key.

- Without further preprocessing of text, on average, just 25% of vocabulary has their corresponding embeddings. Many words are not presented in the training phase.

- "Real" test samples are 6 times larger, I cannot fully trust the public lb score and should focus more on local performance.

Training Samples: 1,306,122

- Sincere questions: 1,225,312 (93.81%)

- Insincere questions: 80,810 (6.19%)

Test Samples: 375,806 (28.77% of train samples)

- Public Test: 56,000 (13.30%)

- Private Test: 325,806 (86.70%)

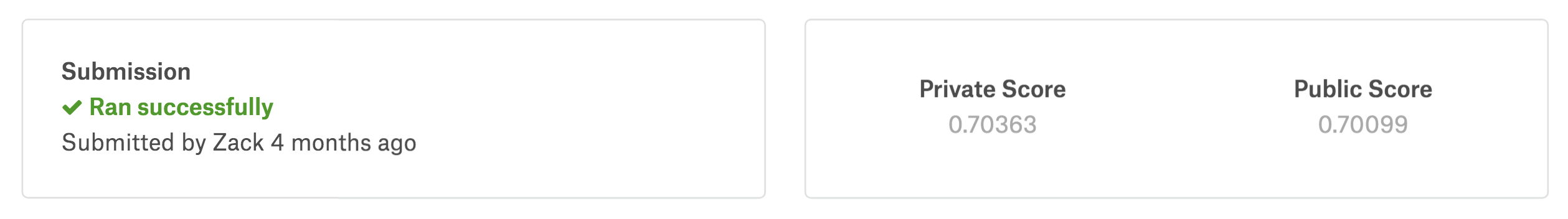

Prediction was evaluated on F1-score. There were 56,000 test samples selected for generating public lb scores, but 325,806 samples for calculating private lb scores. Therefore, how to avoid a huge shakeup is one of the problems that needs to be considered.

- Lower Case Letters and Remove Punctuations

- Clean Numbers

- Process Misspells

- Clean Contractions

After the work above, the proportion of embedding found for vocabulary increased from 25% to 64%.

- Sentence Length

- Number of Capital Letters

- Number of Capital Letters/Sentence Length

- Number of Words

- Number of Unique Words

- Number of Unique Words/Number of Words

- Number of Sensitive Words

- Number of Toxic Words

- Standardize Features

- Tokenize Sentences+Paddings

- Shuffling

4 types of embeddings provided:

- GoogleNews-vectors-negative300

- glove.840B.300d

- paragram_300_sl999

- wiki-news-300d-1M

Note: For those words that have no pretrained embeddings, their embeddings would be randomly initialized with the same mean and standard deviation in that matrix.

After several experiments, I found the combinations of GLOVE and PARAGRAM achieved the best performance. 2 kinds of combination I used in this competition:

- Weighted Average

(Ensemble of embeddings is a feasible way for improvement: https://arxiv.org/pdf/1804.07983.pdf)

- Concatenation

(There are 600 dimensions and correspondingly, the time used for training is much longer, but it's good for training diverse models)

4 various of architectures are adopted:

- LSTM+GRU+CapsNet

- GRU+2Poolings(Max and Avg)

- LSTM+Attention+2Poolings(Max and Avg)

- CNN with filter_size 3,4,5,10