-

Notifications

You must be signed in to change notification settings - Fork 8

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

WIP: Add Gmail takeout mbox import #5

base: master

Are you sure you want to change the base?

Conversation

|

Also, @simonw I created a test based off the existing tests. I think it's working correctly |

|

I noticed that @simonw is using black for formatting. I ran black on my additions in this PR. |

|

Thanks! I requested my Gmail export from takeout - once that arrives I'll test it against this and then merge the PR. |

|

Wow, my mbox is a 10.35 GB download! |

|

The command takes quite a while to start running, presumably because this line causes it to have to scan the WHOLE file in order to generate a count: google-takeout-to-sqlite/google_takeout_to_sqlite/utils.py Lines 66 to 67 in a3de045

I'm fine with waiting though. It's not like this is a command people run every day - and without that count we can't show a progress bar, which seems pretty important for a process that takes this long. |

|

I'm not sure if it would work, but there is an alternative pattern for showing a progress bar against a really large file that I've used in https://github.com/dogsheep/healthkit-to-sqlite/blob/3eb2b06bfe3b4faaf10e9cf9dfcb28e3d16c14ff/healthkit_to_sqlite/cli.py#L24-L57 and https://github.com/dogsheep/healthkit-to-sqlite/blob/3eb2b06bfe3b4faaf10e9cf9dfcb28e3d16c14ff/healthkit_to_sqlite/utils.py#L4-L19 (the It can be a bit of a convoluted pattern, and I'm not at all sure it would work for |

|

I got 9 warnings that look like this: It would be useful if those warnings told me the message ID (or similar) of the affected message so I could grep for it in the |

|

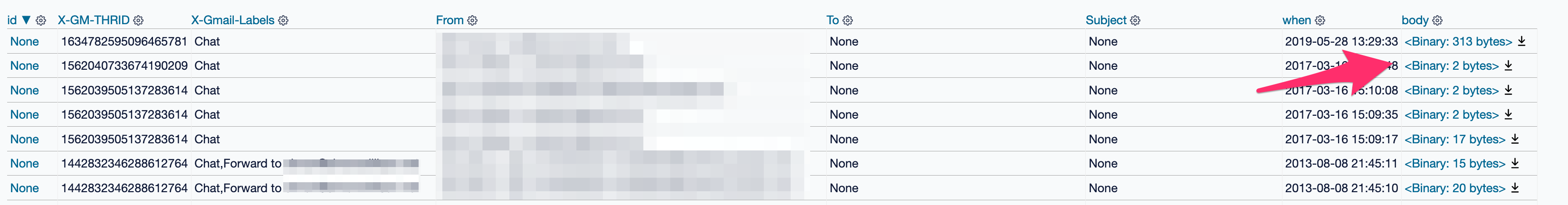

It looks like the If I It would be great if we could store the |

|

Confirmed: removing the |

|

Looks like you're doing this: elif message.get_content_type() == "text/plain":

body = message.get_payload(decode=True)So presumably that decodes to a unicode string? I imagine the reason the column is a |

| """ | ||

| Import Gmail mbox from google takeout | ||

| """ | ||

| db["mbox_emails"].upsert_all( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

A fix for the problem I had where my body column ended up being a BLOB rather than text would be to explicitly create the table first.

You can do that like so:

if not db["mbox_emails"].exists():

db["mbox_emails"].create({

"id": str,

"X-GM-THRID": str,

"X-Gmail-Labels": str,

"From": str,

"To": str,

"Subject": str,

"when": str,

"body": str,

}, pk="id")I had to upgrade to the latest sqlite-utils for this to work because prior to sqlite-utils 2.0 the table.exists property was a boolean not a method.

The wait is from python loading the mbox file. This happens regardless if you're getting the length of the mbox. The mbox module is on the slow side. It is possible to do one's own parsing of the mbox, but I kind of wanted to avoid doing that. |

Ah, that's good to know. I think explicitly creating the tables will be a great improvement. I'll add that. Also, I noticed after I opened this PR that the Thanks for the feedback. I should have time tomorrow to put together some improvements. |

|

I added this code to output a message ID on errors: print("Errors: {}".format(num_errors))

print(traceback.format_exc())

+ print("Message-Id: {}".format(email.get("Message-Id", "None")))

continueHaving found a message ID that had an error, I ran this command to see the context: This was for the following error: Here's what I spotted in the So it could it be that |

|

Solution could be to pre-process that string by splitting on |

|

I imported my 10GB mbox with 750,000 emails in it, ran this tool (with a hacked fix for the blob column problem) - and now a search that returns 92 results takes 25.37ms! This is fantastic. |

|

I just tried to run this on a small VPS instance with 2GB of memory and it crashed out of memory while processing a 12GB mbox from Takeout. Is it possible to stream the emails to sqlite instead of loading it all into memory and upserting at once? |

@maxhawkins a limitation of the python mbox module is it loads the entire mbox into memory. I did find another approach to this problem that didn't use the builtin python mbox module and created a generator so that it didn't have to load the whole mbox into memory. I was hoping to use standard library modules, but this might be a good reason to investigate that approach a bit more. My worry is making sure a custom processor handles all the ins and outs of the mbox format correctly. Hm. As I'm writing this, I thought of something. I think I can parse each message one at a time, and then use an mbox function to load each message using the python mbox module. That way the mbox module can still deal with the specifics of the mbox format, but I can use a generator. I'll give that a try. Thanks for the feedback @maxhawkins and @simonw. I'll give that a try. @simonw can we hold off on merging this until I can test this new approach? |

|

Any updates? |

|

How does this commit look? maxhawkins@72802a8 It seems that Takeout's mbox format is pretty simple, so we can get away with just splitting the file on lines begining with I was able to load a 12GB takeout mbox without the program using more than a couple hundred MB of memory during the import process. It does make us lose the progress bar, but maybe I can add that back in a later commit. |

|

One thing I noticed is this importer doesn't save attachments along with the body of the emails. It would be nice if those got stored as blobs in a separate attachments table so attachments can be included while fetching search results. |

|

I added a follow-up commit that deals with emails that don't have a |

|

Hi @maxhawkins , I'm sorry, I haven't had any time to work on this. I'll have some time tomorrow to test your commits. I think they look great. I'm great with your commits superseding my initial attempt here. |

I did some investigation into this issue and made a fix here. The problem was that some messages (like gchat logs) don't have a @simonw While looking into this I found something unexpected about how sqlite_utils handles upserts if the pkey column is |

WIP

This PR adds the ability to import emails from a Gmail mbox export from Google Takeout.

This is my first PR to a datasette/dogsheep repo. I've tested this on my personal Google Takeout mbox with ~520,000 emails going back to 2004. This took around ~20 minutes to process.

To provide some feedback on the progress of the import I added the "rich" python module. I'm happy to remove that if adding a dependency is discouraged. However, I think it makes a nice addition to give feedback on the progress of a long import.

Do we want to log emails that have errors when trying to import them?

Dealing with encodings with emails is a bit tricky. I'm very open to feedback on how to deal with those better. As well as any other feedback for improvements.