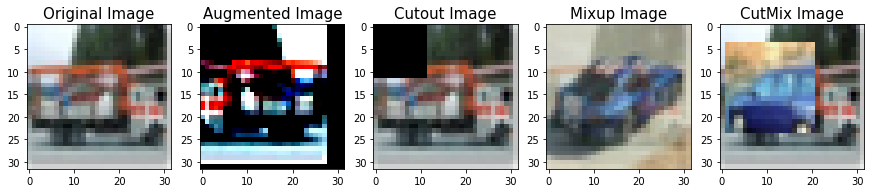

This project aims to analyse the impact of various VRM techniques(applied on teacher models) on the generalization performance of a student model. The VRM techniques being analysed here are:

Work accepted at the ICML-UDL Workshop, 2020

conda create -n ml

conda install --name ml --file spec-file.txt

conda activate mlTrain a set of techer models with these VRM techniques.

Use dark knowledge from teacher models trained in Step 2.

Use different datasets and performance metrics to analyse generalization performance of the different student models. To measure generalization, we can evaluate the models on the unseen CIFAR test set. In addition to that, we also consider the following datasets:

- CIFAR 10.1 v6: Small natural variations in the dataset

- CINIC (ImageNet Fold): Distributional shift in images

- CIFAR 10H: CIFAR Test Set but with human labels - can help us in analysing prediction structure.