Simple working Pytorch implementation of Stylegan2 based on https://arxiv.org/abs/1912.04958

Below are some flowers that do not exist.

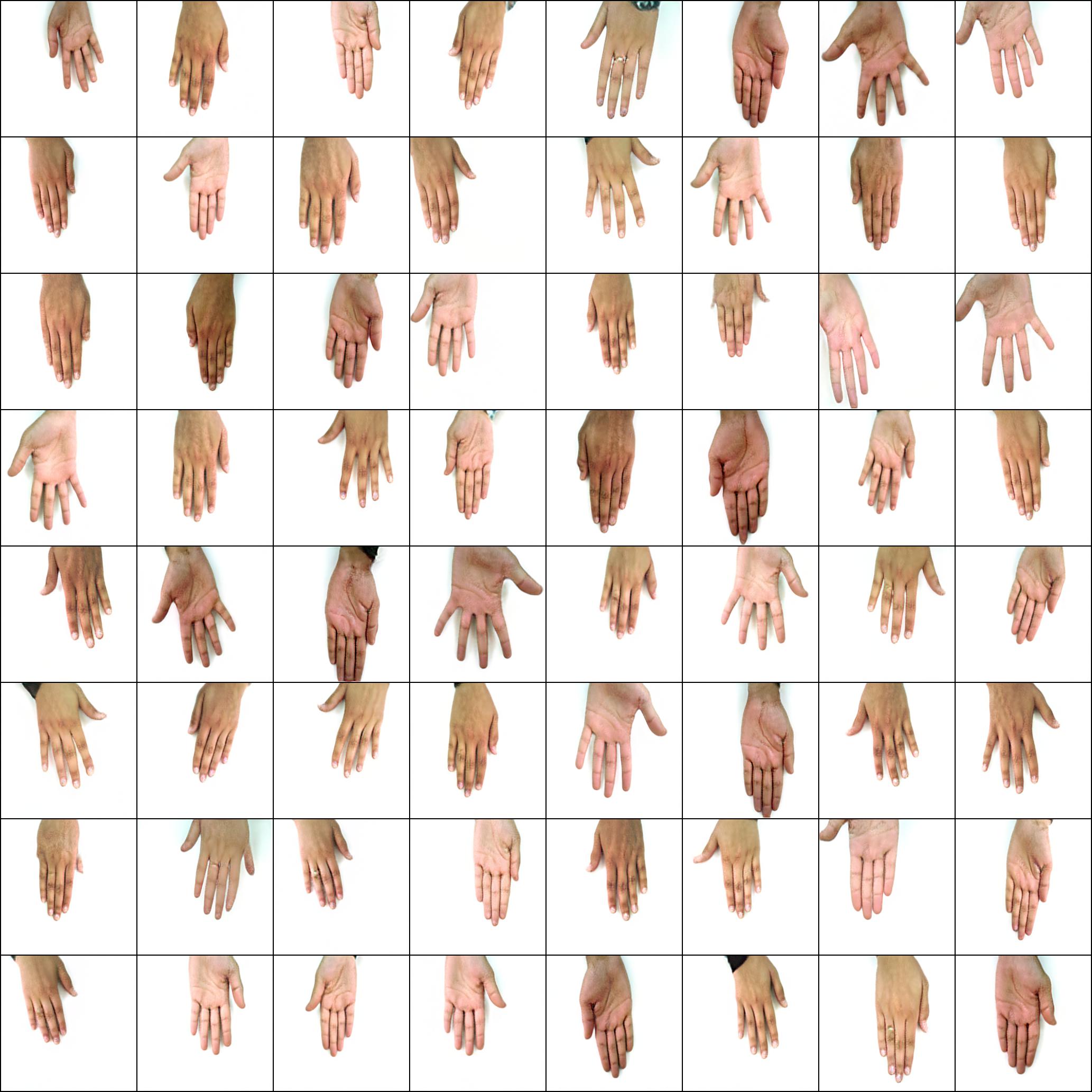

Neither do these hands

Nor these cities

Check GPU

!nvidia-smiMount Google drive

from google.colab import drive

drive.mount('/content/drive')

!pip install torch==1.6.0

!pip install torchvision

!pip install stylegan2_pytorch# !git clone https://github.com/lucidrains/stylegan2-pytorch

!git clone https://github.com/cwvisuals/StyleGAN2cd /content/stylegan2-pytorch

cd /content/stylegan2-pytorch/stylegan2_pytorch!pwd

%lsThat's it. Sample images will be saved to `results/default` and models will be saved periodically to `models/default`.

To continue training drop .pt model file into /content/stylegan2-pytorch/stylegan2_pytorch/models/sg2_ga

Edit /content/stylegan2-pytorch/bin/stylegan2_pytorch to change output file size and number of runs etcYou can specify the name of your project with

$ stylegan2_pytorch --data /path/to/images --name my-project-nameYou can also specify the location where intermediate results and model checkpoints should be stored with

$ stylegan2_pytorch --data /path/to/images --name my-project-name --results_dir /path/to/results/dir --models_dir /path/to/models/dirBy default, if the training gets cut off, it will automatically resume from the last checkpointed file. If you want to restart with new settings, just add a new flag

$ stylegan2_pytorch --new --data /path/to/images --name my-project-name --image-size 512 --batch-size 1 --gradient-accumulate-every 16 --network-capacity 10Once you have finished training, you can generate images from your latest checkpoint like so.

$ stylegan2_pytorch --generateTo generate a video of a interpolation through two random points in latent space.

$ stylegan2_pytorch --generate-interpolationTo save each individual frame of the interpolation

$ stylegan2_pytorch --generate-interpolation --save-framesIf a previous checkpoint contained a better generator, (which often happens as generators start degrading towards the end of training), you can load from a previous checkpoint with another flag

$ stylegan2_pytorch --generate --load-from {checkpoint number}In the past, GANs needed a lot of data to learn how to generate well. The faces model took 70k high quality images from Flickr, as an example.

However, in the month of May 2020, researchers all across the world independently converged on a simple technique to reduce that number to as low as 1-2k. That simple idea was to differentiably augment all images, generated or real, going into the discriminator during training.

If one were to augment at a low enough probability, the augmentations will not 'leak' into the generations.

In the setting of low data, you can use the feature with a simple flag.

# find a suitable probability between 0. -> 0.7 at maximum

$ stylegan2_pytorch --data ./data --aug-prob 0.25This framework also allows for you to add an efficient form of self-attention to the designated layers of the discriminator (and the symmetric layer of the generator), which will greatly improve results. The more attention you can afford, the better!

# add self attention after the output of layer 1

$ stylegan2_pytorch --data ./data --attn-layers 1# add self attention after the output of layers 1 and 2

# do not put a space after the comma in the list!

$ stylegan2_pytorch --data ./data --attn-layers [1,2]Training on transparent images

$ stylegan2_pytorch --data ./transparent/images/path --transparentUsing half precision for greater memory savings

$ stylegan2_pytorch --data ./data --image-size 256 --fp16The more GPU memory you have, the bigger and better the image generation will be. Nvidia recommended having up to 16GB for training 1024x1024 images. If you have less than that, there are a couple settings you can play with so that the model fits.

$ stylegan2_pytorch --data /path/to/data \

--batch-size 3 \

--gradient-accumulate-every 5 \

--network-capacity 16-

Batch size - You can decrease the

batch-sizedown to 1, but you should increase thegradient-accumulate-everycorrespondingly so that the mini-batch the network sees is not too small. This may be confusing to a layperson, so I'll think about how I would automate the choice ofgradient-accumulate-everygoing forward. -

Network capacity - You can decrease the neural network capacity to lessen the memory requirements. Just be aware that this has been shown to degrade generation performance.

Below are some steps which may be helpful for deployment using Amazon Web Services. In order to use this, you will have to provision a GPU-backed EC2 instance. An appropriate instance type would be from a p2 or p3 series. I (iboates) tried a p2.xlarge (the cheapest option) and it was quite slow, slower in fact than using Google Colab. More powerful instance types may be better but they are more expensive. You can read more about them here.

- Archive your training data and upload it to an S3 bucket

- Provision your EC2 instance (I used an Ubuntu AMI)

- Log into your EC2 instance via SSH

- Install the aws CLI client and configure it:

sudo snap install aws-cli --classic

aws configureYou will then have to enter your AWS access keys, which you can retrieve from the management console under AWS Management Console > Profile > My Security Credentials > Access Keys

Then, run these commands, or maybe put them in a shell script and execute that:

mkdir data

curl -O https://bootstrap.pypa.io/get-pip.py

sudo apt-get install python3-distutils

python3 get-pip.py

pip3 install stylegan2_pytorch

export PATH=$PATH:/home/ubuntu/.local/bin

aws s3 sync s3://<Your bucket name> ~/data

cd data

tar -xf ../train.tar.gzNow you should be able to train by simplying calling stylegan2_pytorch [args].

Notes:

- If you have a lot of training data, you may need to provision extra block storage via EBS.

- Also, you may need to spread your data across multiple archives.

- You should run this on a

screenwindow so it won't terminate once you log out of the SSH session.

A recent paper reported improved results if intermediate representations of the discriminator are vector quantized. Although I have not noticed any dramatic changes, I have decided to add this as a feature, so other minds out there can investigate. To use, you have to specify which layer(s) you would like to vector quantize. Default dictionary size is 256 and is also tunable.

# feature quantize layers 1 and 2, with a dictionary size of 512 each

# do not put a space after the comma in the list!

$ stylegan2_pytorch --data ./data --fq-layers [1,2] --fq-dict-size 512I have tried contrastive learning on the discriminator (in step with the usual GAN training) and possibly observed improved stability and quality of final results. You can turn on this experimental feature with a simple flag as shown below.

$ stylegan2_pytorch --data ./data --cl-regBy default, the StyleGAN architecture styles a constant learned 4x4 block as it is progressively upsampled. This is an experimental feature that makes it so the 4x4 block is learned from the style vector w instead.

$ stylegan2_pytorch --data ./data --no-const