A useful list of Deep Model Compression related research papers, articles, tutorials, libraries, tools and more.

Currently the Repos are additional given tags either [Pytorch/TF]. To quickly find hands-on Repos in your commonly used framework, please Ctrl+F to get start 😃

- Pruning deep neural networks to make them fast and small [Pytorch] By using pruning a VGG-16 based Dogs-vs-Cats classifier is made x3 faster and x4 smaller.

- All The Ways You Can Compress BERT - An overview of different compression methods for large NLP models (BERT) based on different characteristics and compares their results.

- Deep Learning Model Compression methods.

- Do We Really Need Model Compression in the future?

- torch.nn.utils.prune [Pytorch]

Pytorch official supported sparsify neural networks and custom pruning technique. - Neural Network Intelligence[Pytorch/TF]

There are some popular model compression algorithms built-in in NNI. Users could further use NNI’s auto tuning power to find the best compressed model, which is detailed in Auto Model Compression. - Condensa [Pytorch]

A Programming System for Neural Network Compression. | paper - IntelLabs distiller [Pytorch]

Neural Network Distiller by Intel AI Lab: a Python package for neural network compression research. | Documentation - Torch-Pruning[Pytorch]

A pytorch toolkit for structured neural network pruning and layer dependency. - CompressAI [Pytorch]

A PyTorch library and evaluation platform for end-to-end compression research. - Model Compression[Pytorch]

A onestop pytorch model compression repo. | Reposhub - TensorFlow Model Optimization Toolkit [TF]

Accompanied blog post, TensorFlow Model Optimization Toolkit — Pruning API - XNNPACK

XNNPACK is a highly optimized library of floating-point neural network inference operators for ARM, WebAssembly, and x86 (SSE2 level) platforms. It's a based on QNNPACK library. However, unlike QNNPACK, XNNPACK focuses entirely on floating-point operators.

- Loading a TorchScript Model in C++ [Pytorch x C++]

From an existing Python model to a serialized representation that can be loaded and executed purely from C++, with no dependency on Python. - Open Neural Network Exchange (ONNX)[Pytorch, TF, Keras...etc]

An open standard format for representing machine learning models.

-

TensorRT (NVIDIA) [Pytorch, TF,Keras ...etc]

- torch2trt [Pytorch]

An easy to use PyTorch to TensorRT converter - How to Convert a Model from PyTorch to TensorRT and Speed Up Inference [Pytorch]

- torch2trt [Pytorch]

-

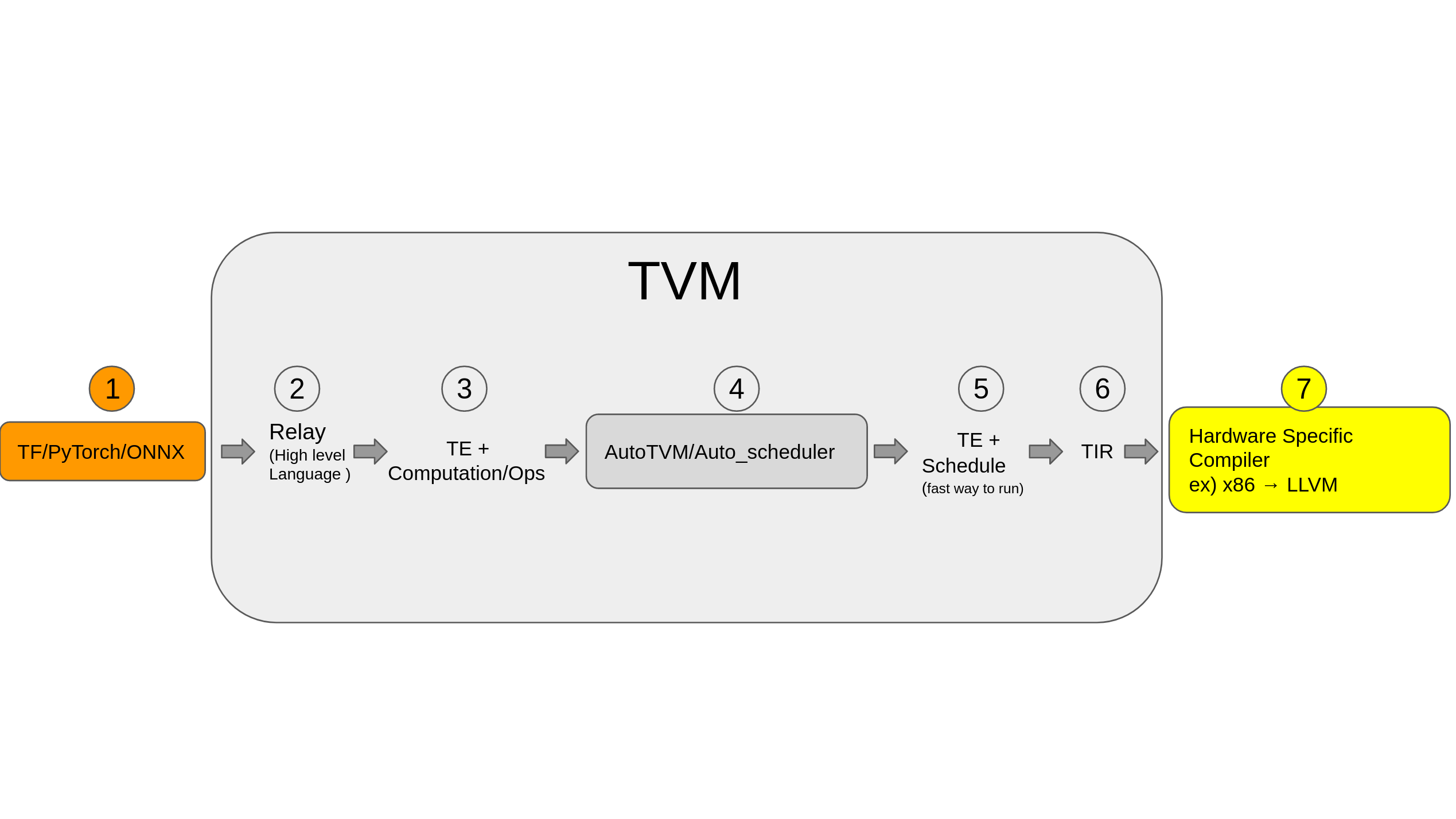

TVM (Apache) [Pytorch, TF]

Open deep learning compiler stack for cpu, gpu and specialized accelerators

- Pytorch Glow

Glow is a machine learning compiler and execution engine for hardware accelerators. It is designed to be used as a backend for high-level machine learning frameworks. The compiler is designed to allow state of the art compiler optimizations and code generation of neural network graphs.

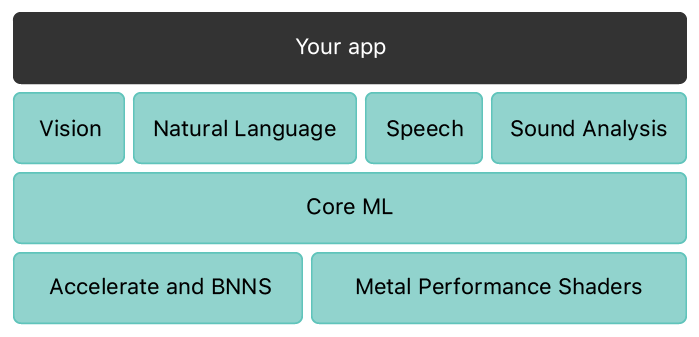

- CoreML (Apple) [Pytorch,TF,Keras,SKLearn ...etc]

Core ML provides a unified representation for all models. Your app uses Core ML APIs and user data to make predictions, and to train or fine-tune models, all on the user’s device.

Introduction

- Tensorflow Lite (Google) [TF]

An open source deep learning framework for on-device inference.

Intel Nervana Neon[Deprecated]