Information retrieval-based question answering (IR QA) system using JaQuAD dataset is proposed. About the JaQuAD (Japanese Question Answering Dataset), please check their github and the paper from skelter labs.

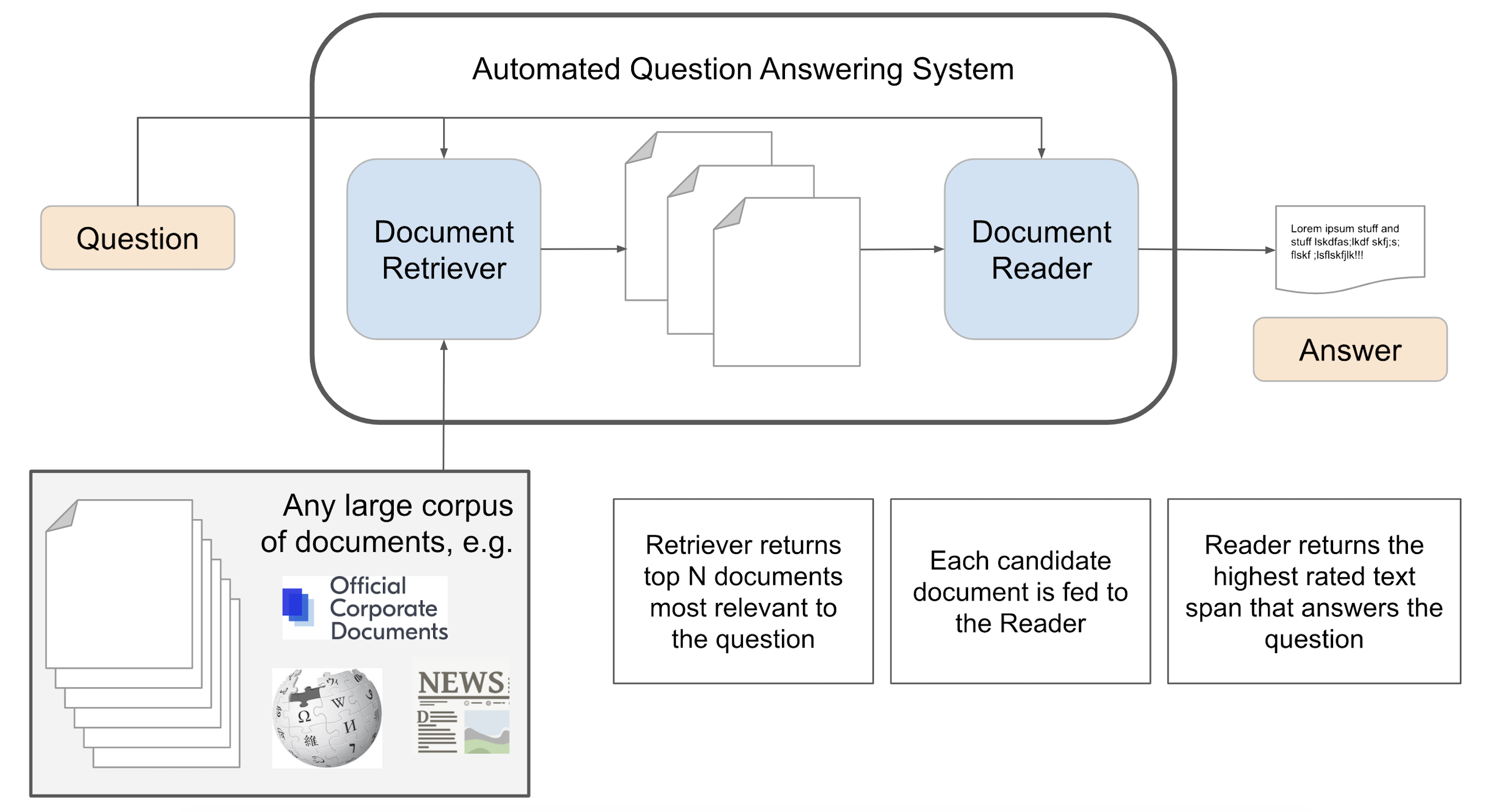

QA system is definitely one of the most important and popular NLP tasks in recent years. However, the existing QA system tutorial are mainly designed for english dataset like SQuAD and WebQA, datasets for QA system in other language are rare and the corresponding QA model are accodingly rare as well. It is noted that the purpose of this project is not trying to reach the baseline of the state-of-the-art QA system. Instead, the real purpose of this project is trying to explore the present language models and NLP tools to implement a japanese QA system. As you could see in the main and componenets files, the whole QA system comprises three main parts: a document retriever, a paragraph retriever, and a document reader. The whole construction is basically shown as the following figure from the article. For further explanation about how did I really implement the QA system based on JaQuAd dataset, each of the componenets of QA system is described in the following sections.

In the Document Retrieval (DR) part, the question and contexts (Japanese sentence) were initially tokenized by using spacy module. Furthermore, the stopword list was also used to filter the unnecessary stopwords in the sentences. After receiving the tokenized sentences, the TF-IDF and cosine similarity are used to search the top-5 most similar tokenized contexts relative to the tokenized question. The result is excellent, even though we used conventional TF-IDF instead of other advanced tricks such as bm25 or Dense Passage Retrieval (DPR), the accuracy (to the train dataset) is high as 99.95% as shown in the file train document retrieval.ipynb.

For the paragraph retriever, we split the contexts obtained from the document retriever into plural paragraphs, and used sentence transformer to embed each paragraph. In this process, the key is choosing appropriate sentence transformer to embed the question and paragraph, since the result of the similarity computataion hugely depends on the quality and performance of the sentence transformer model. In this part, the state-of-the-art sentence transformer model "paraphrase-multilingual-mpnet-base-v2" is used. For detailed description of paraphrase-multilingual-mpnet-base-v2, please visit their model card in huggingface.

After obtaining the embedding vector of each paragraph, cosine similarity was used again to compute the similarity between the question and paragraphs. Based on the observation, top-5 most sililar paragraphs were picked for next process. The concept idea could be seen from the paper.

In this part, I fine tuned the bert model for reading comprehension task. Especially, the popular cl-tohoku/bert-base-japanese in huggingface is used. The crucial challenge for fine tuning this model for QA task is the lack of fast tokenizer, which is mentioned in other website as well; therefore, in this part, I wrote several important function like char_to_token by myself and used them to label the start token and end token of the answer. The corresponding code could be seen in the Fine_tuning director. In the fine tuning process, the epoch is set to be 15, the batch size is set to be 8, and the sequence length is set to be 512. The train part of JaQUAd dataset is used for training and the eval dataset is used for testing later. After fine tuning, we test several weights saved during the fine tuning to pick out the best two or three results among them. The best result for EM (exact match) score is about 0.35, and the best F1 score is about 0.545.

Several improvements are considered and may be fulfilled in the future:

- JaQUAd Dataset: According to the Table 1 of the paper, JaQUAd dataset is relatively smaller than most of the existing QA dataset of other languages. Expansion of the dataset is thus expected and people are encouraged to release new Japanese QA datasets based on new forms and other data sources. However, as a Japanese NLP enthusiast, I am personally glad to see people dedicate themselves into the Japanese NLP activities since the NLP resources for Japanese is still growing and is still relatively little comparing to English.

- Improvement on the document retriever: As mentioned above, several tools are considered before but did not be used for extracting similar contexts, especially the dense passsage retrieval. Even though TF-IDF is enough and got high accuracy in this project, for future work on larger dataset, dense passage retriever and other tools using neural network may be used and considered.

- Improvement on the paragraph retriever: It is also interesting to consider whether this part can be improved by using other tools. That is, for question answering task, after we extracted the similar documents based on the question and evaluated accuracy according to the label, how do we extract the similar paragraphs and evaluate the accuracy without any labels? As mentioned above, in the paragraph retrieveer part, I used the sentence transformer to perform contextualized word embedding and compute the similarity between the paragraphs and the question, but the state-of-the-art sentence transformer or even existing "paragraph transformer" seem to lose their power and accuracy (at least in Japanese NLP task) when they are dealing with the short question and long paragraphs. In a short way to say, long contexts but has tokens identical to those tokens in short question, short context and has tokens semantically similar with the those in short question, sentence transformer usually prefer later since sequence length is a crucial factor for sentence transformer to implement the word embedding.

- Training: Larger batch size may be helpful to improve the fine tuning of the bert base japanese model.

Thanks for reading, for any discussion and opinion, people are welcomed to contact me by my gmail blaze7451@gmail.com.