PyTorch implementation of the paper:

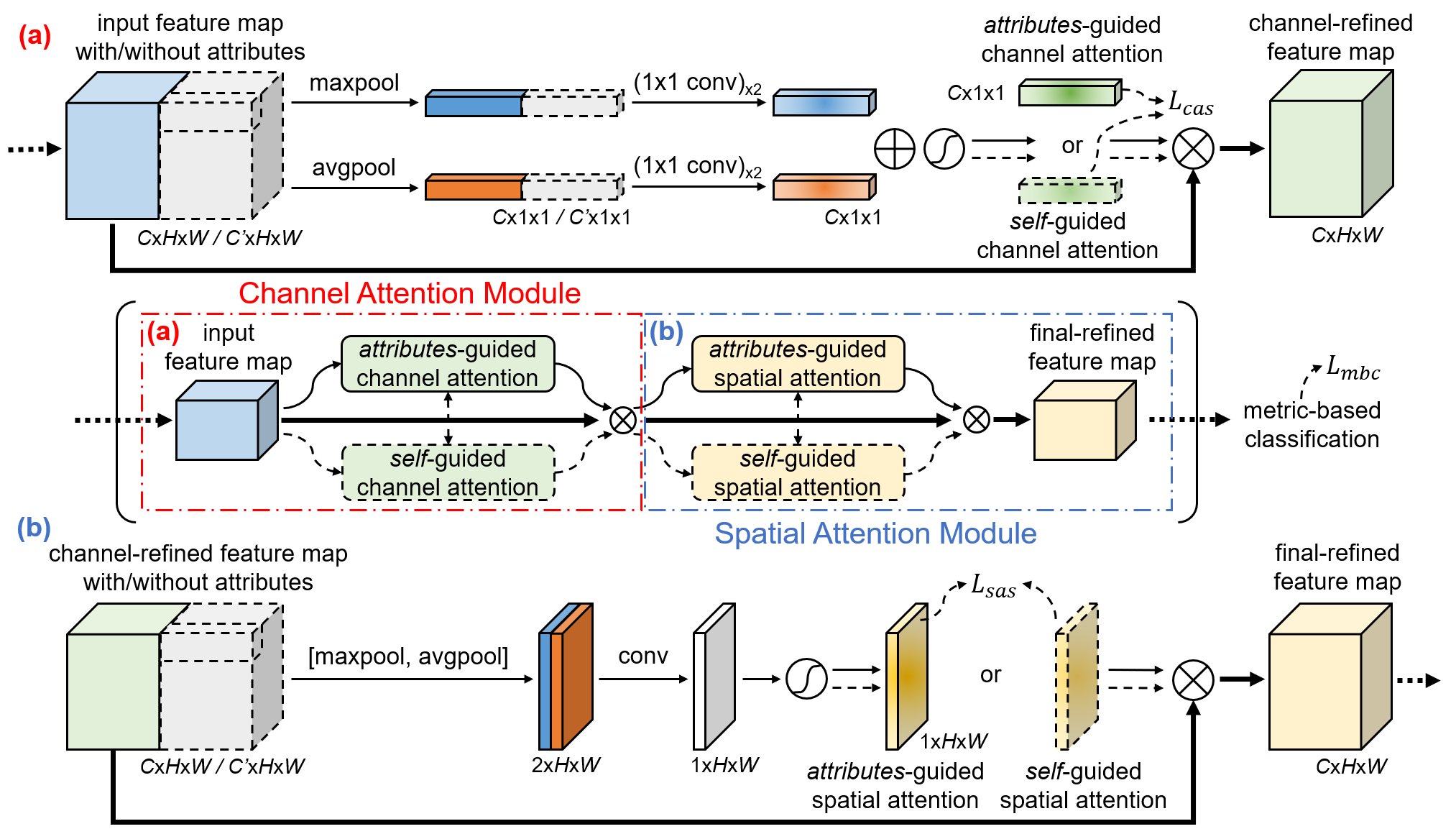

- Title: Attributes-Guided and Pure-Visual Attention Alignment for Few-Shot Recognition

- Author: Siteng Huang, Min Zhang, Yachen Kang, Donglin Wang

- Conference: Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI 2021)

- More details: [arXiv] | [homepage]

The code runs correctly with

- Python 3.6

- PyTorch 1.2

- Torchvision 0.4

# clone project

git clone https://github.com/bighuang624/AGAM.git

cd AGAM/models/agam_protonet

# download data and run on multiple GPUs with special settings

python train.py --train-data [train_data] --test-data [test_data] --backbone [backbone] --num-shots [num_shots] --train-tasks [train_tasks] --semantic-type [semantic_type] --multi-gpu --download

# Example: run on CUB dataset, Conv-4 backbone, 1 shot, single GPU

python train.py --train-data cub --test-data cub --backbone conv4 --num-shots 1 --train-tasks 50000 --semantic-type class_attributes

# Example: run on SUN dataset, ResNet-12 backbone, 5 shot, multiple GPUs

python train.py --train-data sun --test-data sun --backbone resnet12 --num-shots 5 --train-tasks 40000 --semantic-type image_attributes --multi-gpuUpdate: Now you can reproduce the main results of the CUB dataset using the trained model weights downloaded from here. Note that the reproduced results may be slightly different from the reported ones as the random sampling of episodes is affected by random seeds. After downloading the weights, you can use the command:

python eval.py --train-data cub --test-data cub --backbone [backbone] --num-shots 5 --semantic-type class_attributes --test-only --model-path [model_path]You can download datasets automatically by adding --download when running the program. However, here we give steps to manually download datasets to prevent problems such as poor network connection:

CUB:

- Create the dir

AGAM/datasets/cub; - Download

CUB_200_2011.tgzfrom here, and put the archive intoAGAM/datasets/cub; - Running the program with

--download.

Update: As the CUB dataset need to be converted into hdf5 format with--download, we now release the generated hdf5 and json files for your convenience. You can download these files from here and put them into AGAM/datasets/cub, and then --download is no longer needed when running the program.

SUN:

- Create the dir

AGAM/datasets/sun; - Download the archive of images from here, and put the archive into

AGAM/datasets/sun; - Download the archive of attributes from here, and put the archive into

AGAM/datasets/sun; - Running the program with

--download.

If our code is helpful for your research, please cite our paper:

@inproceedings{Huang2021AGAM,

author = {Siteng Huang and Min Zhang and Yachen Kang and Donglin Wang},

title = {Attributes-Guided and Pure-Visual Attention Alignment for Few-Shot Recognition},

booktitle = {Proceedings of the 35th AAAI Conference on Artificial Intelligence (AAAI 2021)},

month = {February},

year = {2021}

}

Our code references the following projects: