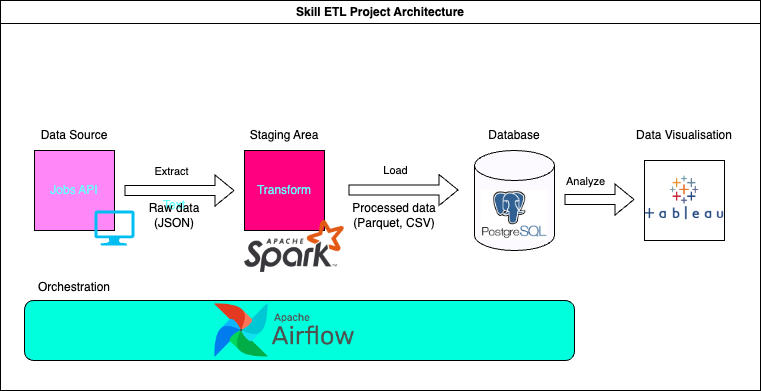

ETL Data pipeline project developed to process online job posts using Airflow, Spark, Postgres and Tableau.

Job Posts is fetched from Google Jobs API based on job role and the data for each job role is stored as individual JSON file in raw folder.

JSON files for each job role is read using PySpark and the data is processed to fetch required data columns in required format. The cleaned data is then stored in parquet and CSV format in the processed folder.

The CSV files are loaded into PostgreSQL database using Airflow copy_expert operation using Postgres Hook.

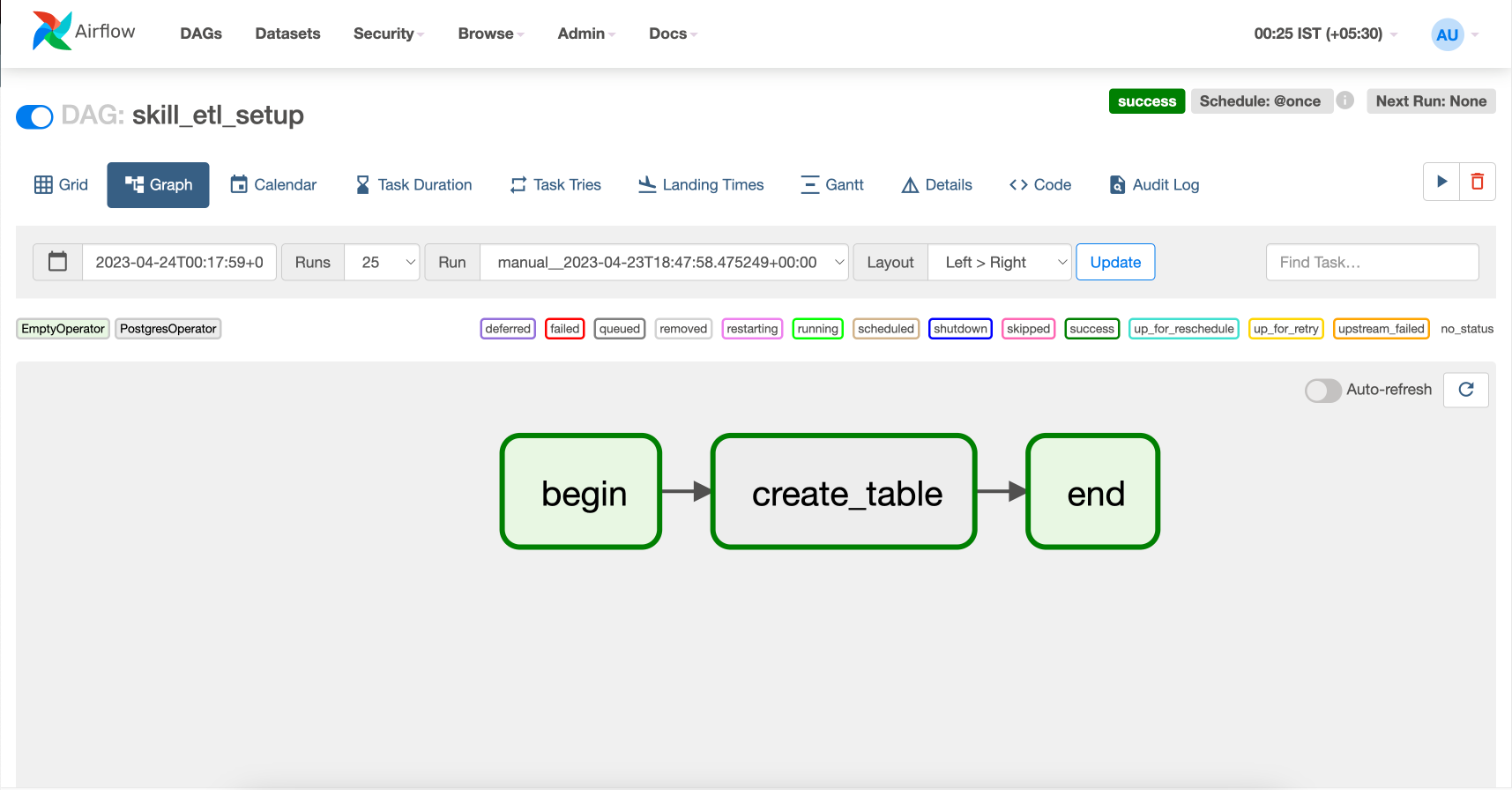

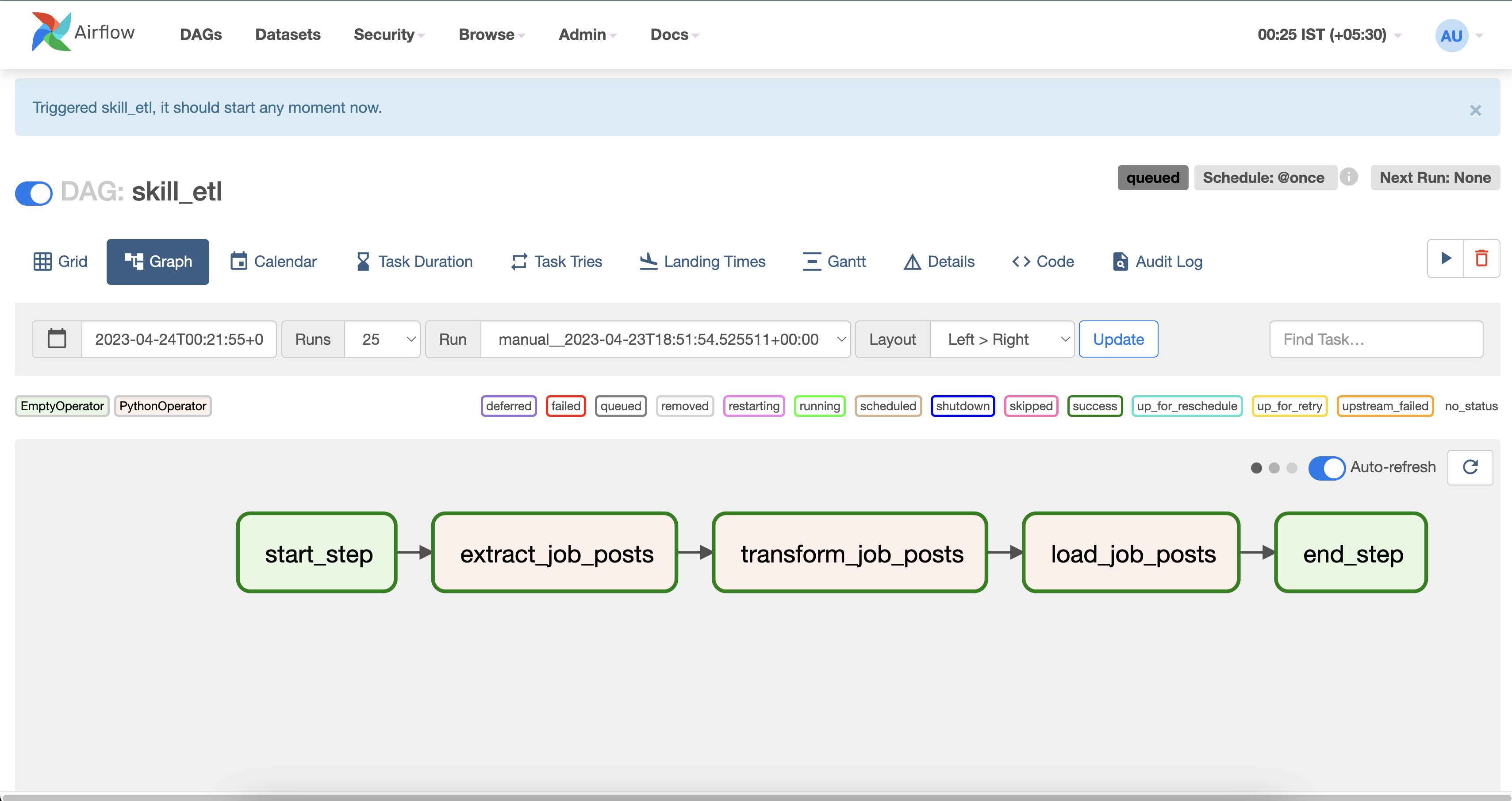

The project uses the following two DAGs (Directed Acyclic Graph)

Setup pipelines runs the SQL script to create destination table in postgres db if it does not exist.

This pipeline extract the Extract, Transform and Load tasks to process the data from API ingestion layer to data storage layer.

A tableau dashboard is created in order to visualise the insights from the data stored in PostgreSQL. A PL/SQL function is created to convert the postgres table to csv which is then converted to excel file. This excel file is used as the source for Tableau visualisation. It is stored in reporting folder.

Link to Dashboard - https://public.tableau.com/app/profile/antony.prince.j/viz/skill_etl/Dashboard1?publish=yes

The Config file in the project under config/ is used to configure the job roles to be queried and the path in which each stage of the pipeline should store the data during the Airflow DAG execution.

{

"job_roles" :

[

"data%20engineer%20india",

"backend%developer%20india",

"blockchain%developer%20india",

"data%scientist%20india",

"fullstack%developer%20india"

],

"project_path": "/Users/antonyprincej/airflow/dags/skill_graph/",

"raw" : {

"folder" : "raw",

"type" : "json"

},

"processed" : {

"folder" : "processed",

"type" : ["parquet","csv"]

},

"destination" : {

"type" : "sql"

}

}