Implementation of a mini-project to demonstrate the use of projective geometry in augmented reality applications. The project consists of a camera calibration script opencv-calibrate.py and a virtual cube reprojection script under the flashy virtual-reality.py name. Camera calibration script is based on the OpenCV camera calibration tutorial.

Projective geometry is the mathematical framework that allows one to model the process of capturing 3D scenes into 2D images. Using this framework, the action of taking a photo with a camera may be modeled mathematically as a set of sequential linear transformations applied to spatial coordinates using a special vector representation, called homogeneous coordinates representation. That is,

describes how any 3D point or object point

In a nutshell, camera calibration consists any algorithm for computing or estimating camera matrix

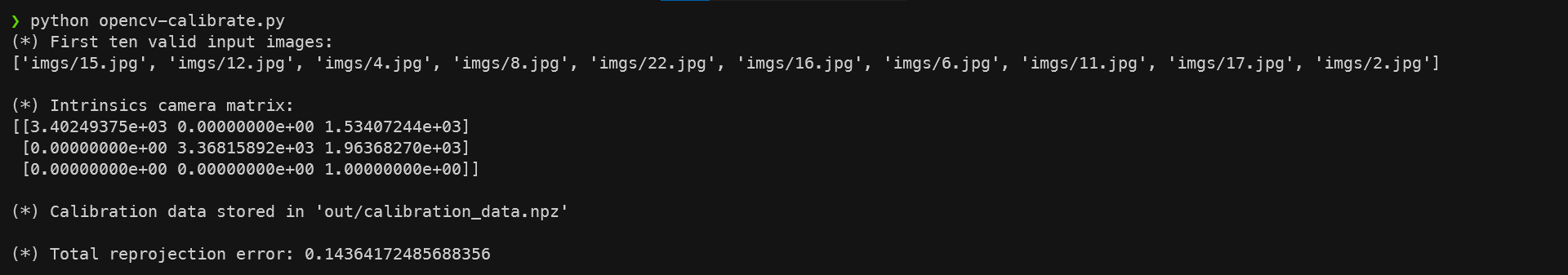

We use OpenCV functionalities to compute imgs/ folder. To perform camera calibration simply run python opencv-calibrate.py. The script takes the first 10 valid images for running the calibration algorithm:

As the script filters input images, it stores the set of object points drawed on the input images in the out/points/ folder. Once it finishes filtering and plotting points, performs camera calibration, displays camera matrix, stores the calibration data into out/calibration_data.npz and computes average reprojection error in pixels.

It is important to note that camera calibration also stores extrinsic information for all valid views. Now that we have an internal and external mathematical modelling for each of the valid frames, it is time to use this information to create something cool!

Now that we have our previously found camera matrix

To project our virtual cube, just run python virtual-reality.py and check out/projected/ folder where you should find a set of images like these:

Pretty cool, isn't it? Now you have a small glimpse on how augmented reality applications may be created. Of course, this is a simple case where we conveniently chose our object and our set of images to make a small demonstration. In practice, you may add more complex objects with textures as long as you have a way to describe them geometrically in object coordinate frame.

Another important caveat is that we conveniently used our calibrated views as the target images since we already knew the camera pose for those frames. In more complex scenarios, you are likely to have to localize your camera to correctly project your virtual objects.