Note: You need anaconda installed to use this well.

- Clone the repo:

git clone https://github.com/Rohith04MVK/Fashion-MNIST - Create a virtualenv:

conda create -n fashion_mnist python=3.8.5 - Activate the environment:

conda activate fashion_mnist - Install the requirements:

pip install -r requirements.txt - Run the main file:

python makePredictions.py

- Add them to the images folder

- Replace the names in predictions file line 16 with your file names

Example: images = np.array([get_image("something.png"), get_image("any.png")])

Neural Network is essentially a network of mathematical equations. It takes one or more input variables, and by going through a network of equations, results in one or more output variables. You can also say that a neural network takes in a vector of inputs and returns a vector of outputs

In a neural network, there’s an input layer, one or more hidden layers, and an output layer. The input layer consists of one or more feature variables (or input variables or independent variables) denoted as x1, x2, …, xn. The hidden layer consists of one or more hidden nodes or hidden units. A node is simply one of the circles in the diagram above. Similarly, the output variable consists of one or more output units.

A convolutional neural network (CNN) is a type of neural network that uses a mathematical operation called convolution. According to Wikipedia Convolution is a mathematical operation on two functions that produces a third function expressing how the shape of one is modified by the other. Thus, CNNs use convolution instead of general matrix multiplication in at least one of their layers.

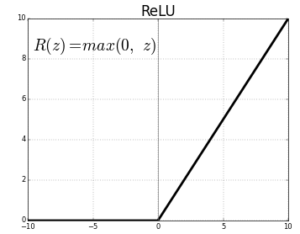

Traditionally, some prevalent non-linear activation functions, like sigmoid functions (or logistic) and hyperbolic tangent, are used in neural networks to get activation values corresponding to each neuron. Recently, the ReLu function has been used instead to calculate the activation values in traditional neural networks or deep neural network paradigms. The reason for replacing sigmoid is that the ReLu function is able to accelerate the training speed of deep neural networks compared to traditional activation functions since the derivative of ReLu is 1 for positive input. Due to constant, deep neural networks do not need to take additional time for computing error terms during the training phase.

The softmax function is used as the activation function in the output layer of neural network models that predict a multinomial probability distribution. That is, softmax is used as the activation function for multi-class classification problems where class membership is required on more than two class labels.