The source code for our paper "Exploring Dual-task Correlation for Pose Guided Person Image Generation“, Pengze Zhang, Lingxiao Yang, Jianhuang Lai, and Xiaohua Xie, CVPR 2022. Video: [Chinese] [English]

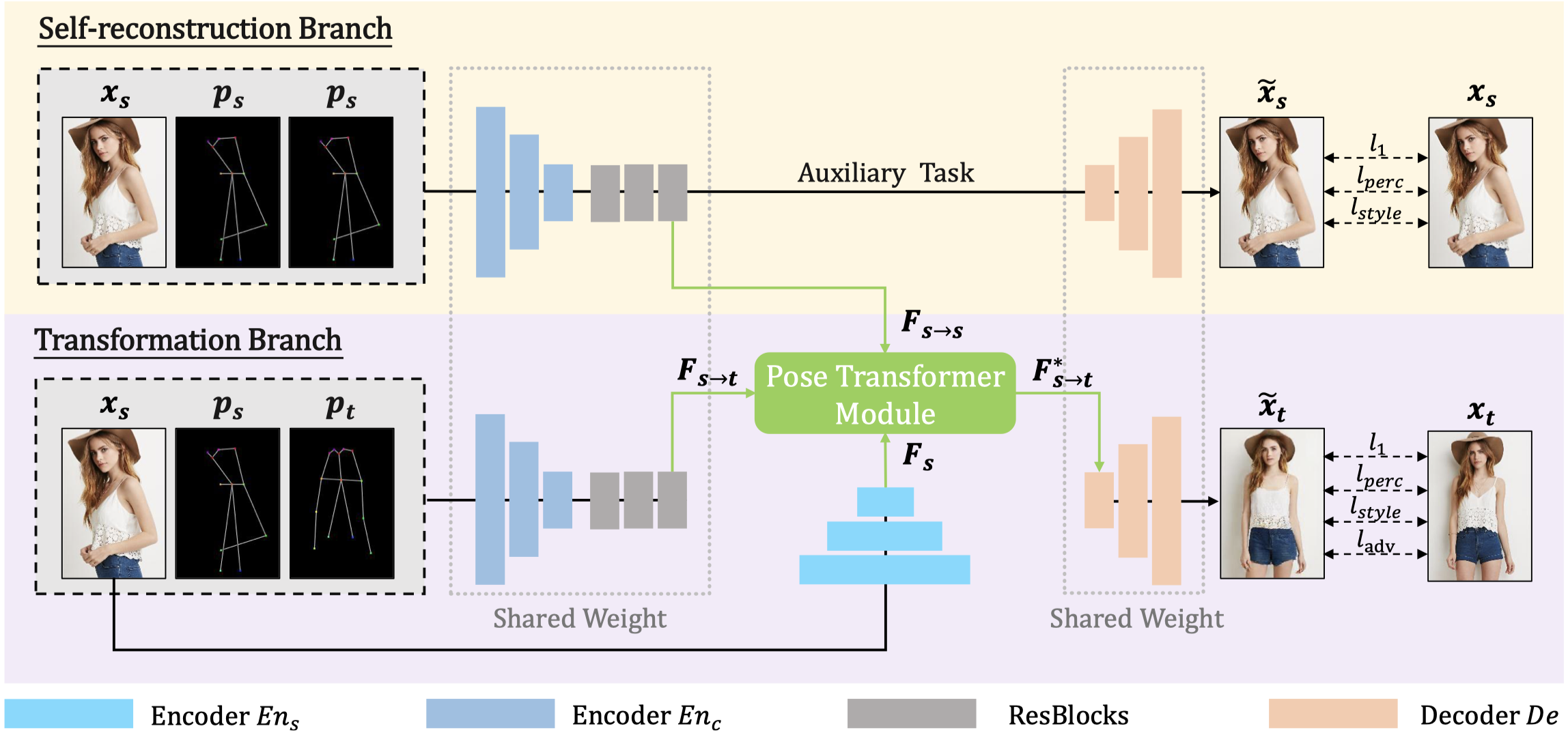

Pose Guided Person Image Generation (PGPIG) is the task of transforming a person image from the source pose to a given target pose. Most of the existing methods only focus on the ill-posed source-to-target task and fail to capture reasonable texture mapping. To address this problem, we propose a novel Dual-task Pose Transformer Network (DPTN), which introduces an auxiliary task (i.e., source-tosource task) and exploits the dual-task correlation to promote the performance of PGPIG. The DPTN is of a Siamese structure, containing a source-to-source self-reconstruction branch, and a transformation branch for source-to-target generation. By sharing partial weights between them, the knowledge learned by the source-to-source task can effectively assist the source-to-target learning. Furthermore, we bridge the two branches with a proposed Pose Transformer Module (PTM) to adaptively explore the correlation between features from dual tasks. Such correlation can establish the fine-grained mapping of all the pixels between the sources and the targets, and promote the source texture transmission to enhance the details of the generated target images. Extensive experiments show that our DPTN outperforms state-of-the-arts in terms of both PSNR and LPIPS. In addition, our DPTN only contains 9.79 million parameters, which is significantly smaller than other approaches.

- Python 3.7.9

- Pytorch 1.7.1

- torchvision 0.8.2

- CUDA 11.1

- NVIDIA A100 40GB PCIe

Following PATN, the dataset split files and extracted keypoints files can be obtained as follows:

DeepFashion

-

Download the DeepFashion dataset in-shop clothes retrival benchmark, and put them under the

./dataset/fashiondirectory. -

Download train/test pairs and train/test keypoints annotations from Google Drive, including fasion-resize-pairs-train.csv, fasion-resize-pairs-test.csv, fasion-resize-annotation-train.csv, fasion-resize-annotation-train.csv, train.lst, test.lst, and put them under the

./dataset/fashiondirectory. -

Split the raw image into the training set (

./dataset/fashion/train) and test set (./dataset/fashion/test):

python data/generate_fashion_datasets.pyMarket1501

-

Download the Market1501 dataset from here. Rename bounding_box_train and bounding_box_test as train and test, and put them under the

./dataset/marketdirectory. -

Download train/test key points annotations from Google Drive including market-pairs-train.csv, market-pairs-test.csv, market-annotation-train.csv, market-annotation-train.csv. Put these files under the

./dataset/marketdirectory.

DeepFashion

python train.py --name=DPTN_fashion --model=DPTN --dataset_mode=fashion --dataroot=./dataset/fashion --batchSize 32 --gpu_id=0Market1501

python train.py --name=DPTN_market --model=DPTN --dataset_mode=market --dataroot=./dataset/market --dis_layers=3 --lambda_g=5 --lambda_rec 2 --t_s_ratio=0.8 --save_latest_freq=10400 --batchSize 32 --gpu_id=0You can directly download our test results from Google Drive: Deepfashion, Market1501.

DeepFashion

python test.py --name=DPTN_fashion --model=DPTN --dataset_mode=fashion --dataroot=./dataset/fashion --which_epoch latest --results_dir ./results/DPTN_fashion --batchSize 1 --gpu_id=0Market1501

python test.py --name=DPTN_market --model=DPTN --dataset_mode=market --dataroot=./dataset/market --which_epoch latest --results_dir=./results/DPTN_market --batchSize 1 --gpu_id=0We adopt SSIM, PSNR, FID, LPIPS and person re-identification (re-id) system for the evaluation. Please clone the official repository PerceptualSimilarity of the LPIPS score, and put the folder PerceptualSimilarity to the folder metrics.

- For SSIM, PSNR, FID and LPIPS:

DeepFashion

python -m metrics.metrics --gt_path=./dataset/fashion/test --distorated_path=./results/DPTN_fashion --fid_real_path=./dataset/fashion/train --name=./fashionMarket1501

python -m metrics.metrics --gt_path=./dataset/market/test --distorated_path=./results/DPTN_market --fid_real_path=./dataset/market/train --name=./market --market- For person re-id system:

Clone the code of the fast-reid to this project (./fast-reid-master). Move the config and loader of the DeepFashion dataset to (./fast-reid-master/configs/Fashion/bagtricks_R50.yml) and (./fast-reid-master/fastreid/data/datasets/fashion.py) respectively. Download the pre-trained network and put it under the ./fast-reid-master/logs/Fashion/bagtricks_R50-ibn/ directory. And then launch:

python ./tools/train_net.py --config-file ./configs/Fashion/bagtricks_R50.yml --eval-only MODEL.WEIGHTS ./logs/Fashion/bagtricks_R50-ibn/model_final.pth MODEL.DEVICE "cuda:0"Our pre-trained models and logs can be downloaded from Google Drive: Deepfashion[log], Market1501[log].

@InProceedings{Zhang_2022_CVPR,

author = {Zhang, Pengze and Yang, Lingxiao and Lai, Jian-Huang and Xie, Xiaohua},

title = {Exploring Dual-Task Correlation for Pose Guided Person Image Generation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {7713-7722}

}We build our project based on pix2pix. Some dataset preprocessing methods are derived from PATN.