The code of our paper "Daedalus: Breaking Non-Maximum Suppression in Object Detection via Adversarial Examples".

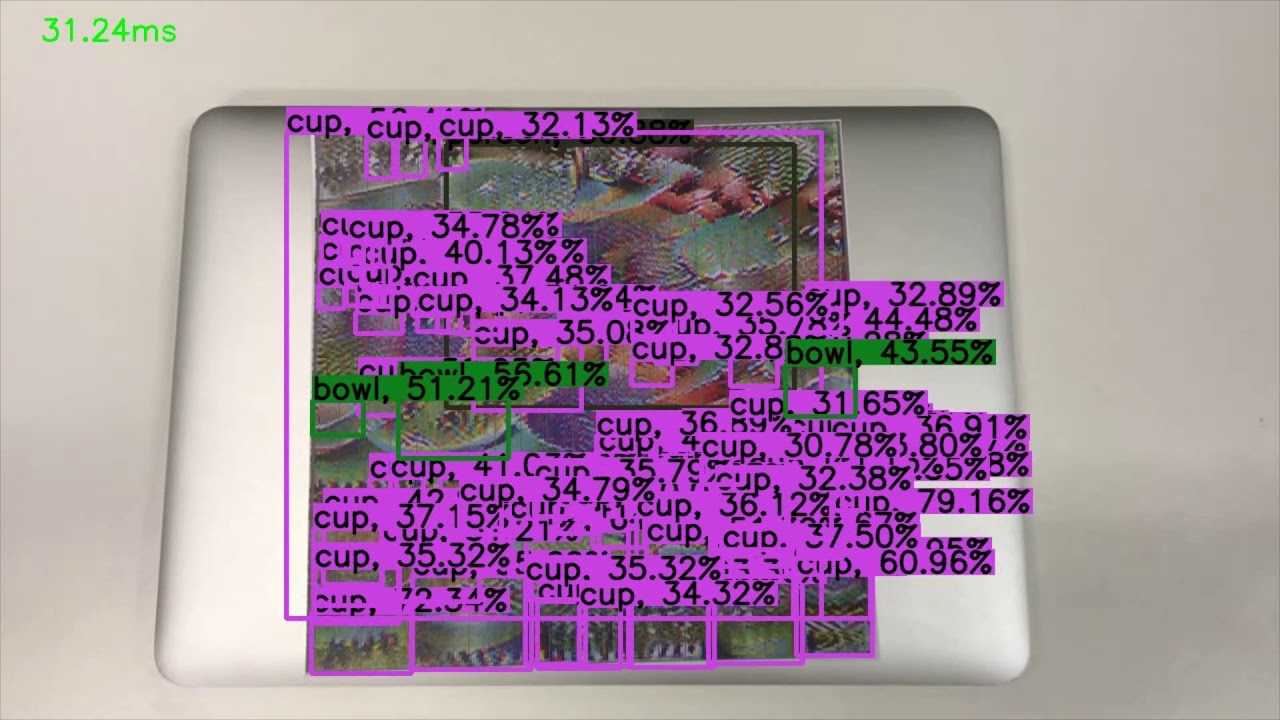

We propose an attack, in which we can tune the strength of the attack and specify the object category to attack, to break non-maximum suppression (NMS) in object detection. As the consequence, the detection model outputs extremely dense results as redundant detection boxes are not filtered by NMS.

Some results are displayed here:

Adversarial examples made by our L2 attack. The first row contains original images. The third row contains our low-confidence (0.3) adversarial examples. The fifth row contains our high-confidence (0.7) examples. The detection results from YOLO-v3 are in the rows below them. The confidence controls the density of the redundant detection boxes in the detection results.

Adversarial examples made by our L2 attack. The first row contains original images. The third row contains our low-confidence (0.3) adversarial examples. The fifth row contains our high-confidence (0.7) examples. The detection results from YOLO-v3 are in the rows below them. The confidence controls the density of the redundant detection boxes in the detection results.

Launching real-world attacks via a Daedalus poster

We instantiated the Daedalus perturbation into a physical poster. You can watch the demo of the attack on YouTube:

Running the attack against YOLO-v3:

- Download yolo.h5 and put it into '../model';

- Put original images into '../Datasets/COCO/val2017/';

- Run l2_yolov3.py.

Running the attack against RetinaNet:

- Install keras-retinanet;

- Download resnet50_coco_best_v2.1.0.h5 and put it into '../model';

- Put original images into '../Datasets/COCO/val2017/';

- Run l2_retinanet.py.

Running ensemble attack to craft robust adversarial examples:

Run l2_ensemble.py after completing the above setups for YOLO-v3 and RetinaNet attacks.

All attacks can specify object categories to attack. Crafted adversarial examples will be stored as 416X416 sized .png files in '../adv_examples/...'. The examples can be tested on official darknet and retinanet.

Cite this work:

@article{wang2021daedalus,

title={Daedalus: Breaking nonmaximum suppression in object detection via adversarial examples},

author={Wang, Derui and Li, Chaoran and Wen, Sheng and Han, Qing-Long and Nepal, Surya and Zhang, Xiangyu and Xiang, Yang},

journal={IEEE Transactions on Cybernetics},

volume={52},

number={8},

pages={7427--7440},

year={2021},

publisher={IEEE}

}