- parallel waveGan을 수정해 만들었습니다.

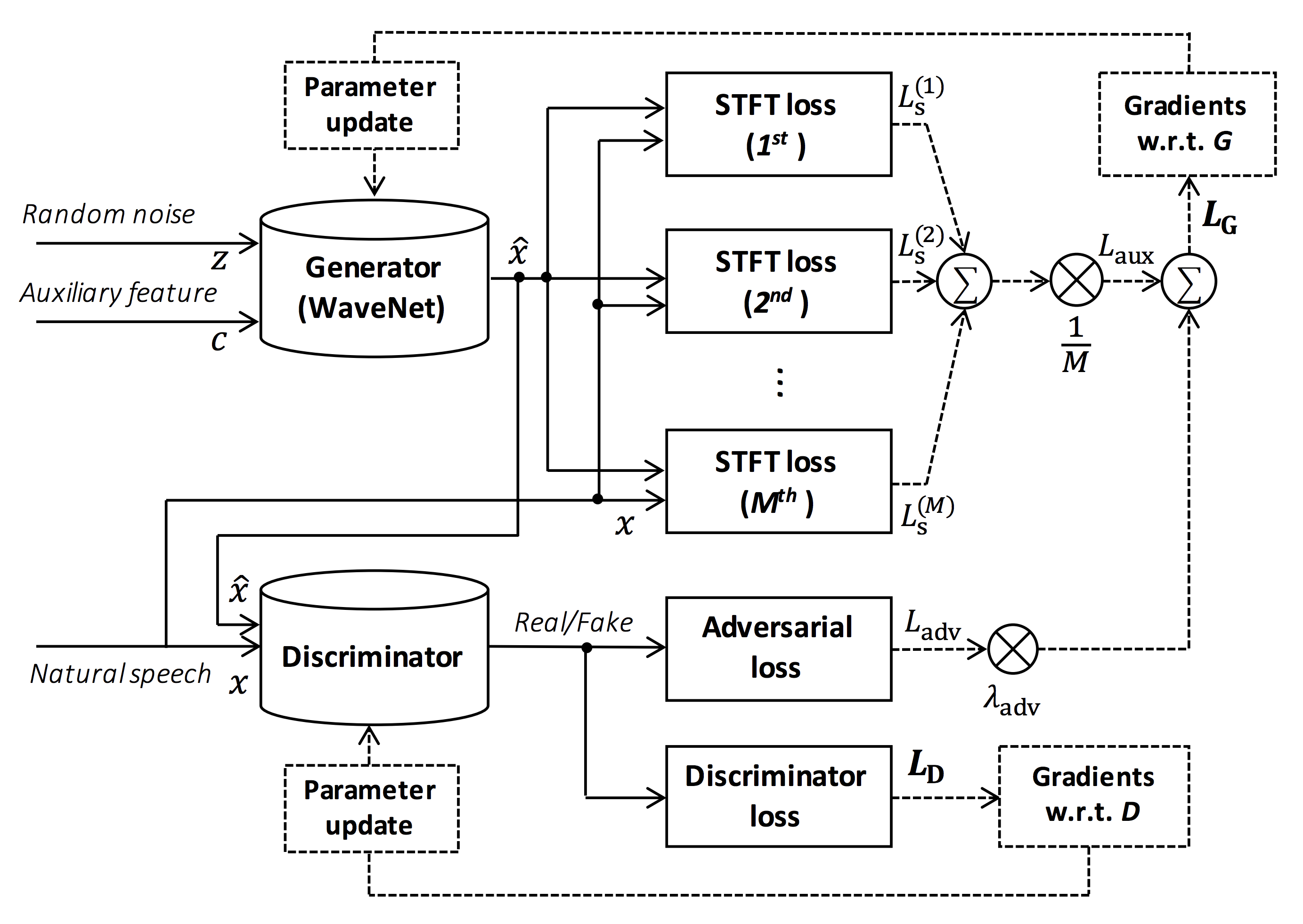

Source of the figure: https://arxiv.org/pdf/1910.11480.pdf

This repository is tested on Ubuntu 18.04 with a GPU Titan V.

- Python 3.6+

- Cuda 10.0

- CuDNN 7+

- NCCL 2+ (for distributed multi-gpu training)

- libsndfile (you can install via

sudo apt install libsndfile-devin ubuntu) - jq (you can install via

sudo apt install jqin ubuntu) - sox (you can install via

sudo apt install soxin ubuntu)

Different cuda version should be working but not explicitly tested.

All of the codes are tested on Pytorch 1.0.1, 1.1, 1.2, 1.3.1, 1.4, and 1.5.1.

Pytorch 1.6 works but there are some issues in cpu mode (See #198).

You can select the installation method from two alternatives.

$ git clone https://github.com/kan-bayashi/ParallelWaveGAN.git

$ cd ParallelWaveGAN

$ pip install -e .

# If you want to use distributed training, please install

# apex manually by following https://github.com/NVIDIA/apex

$ ...

# If you use docker and has error like AttributeError: module 'enum' has no attribute 'IntFlag'

$ pip3 uninstall -y enum34Note that your cuda version must be exactly matched with the version used for the pytorch binary to install apex.

To install pytorch compiled with different cuda version, see tools/Makefile.

$ git clone https://github.com/kan-bayashi/ParallelWaveGAN.git

$ cd ParallelWaveGAN/tools

$ make

# If you want to use distributed training, please run following

# command to install apex.

$ make apex# You should make files like this

ParallelWaveGAN

ㄴ egs

ㄴ kss

ㄴ voc1

ㄴ downloads

ㄴ kss

ㄴ wavs

ㄴ1_0000.wav

ㄴ1_0001.wav

.

.

.

# Create a wavs folder and push the wav file of kss divided into folders 1,2,3,4 at once.

# wavs 폴더를 만들고 1,2,3,4의 폴더로 나뉘어져 있는 kss의 wav파일을 한번에 몰아 넣습니다.Note that we specify cuda version used to compile pytorch wheel.

If you want to use different cuda version, please check tools/Makefile to change the pytorch wheel to be installed.

This repository provides Kaldi-style recipes, as the same as ESPnet.

Currently, the following recipes are supported.

To run the recipe, please follow the below instruction.

# Let us move on the recipe directory

$ cd egs/kss/voc1

# You can select the stage to start and stop

$ ./run.sh --stage 0 --stop_stage 3

# If you want to specify the gpu

$ CUDA_VISIBLE_DEVICES=1 ./run.sh --stage 2

# If you want to resume training from 10000 steps checkpoint

$ ./run.sh --stage 2 --resume <path>/<to>/checkpoint-10000steps.pklSee more info about the recipes in this README.

- Parallel WaveGAN

- r9y9/wavenet_vocoder

- LiyuanLucasLiu/RAdam

- MelGAN

- descriptinc/melgan-neurips

- Multi-band MelGAN

The author would like to thank Ryuichi Yamamoto (@r9y9) for his great repository, paper, and valuable discussions.

Tomoki Hayashi (@kan-bayashi)

E-mail: hayashi.tomoki<at>g.sp.m.is.nagoya-u.ac.jp