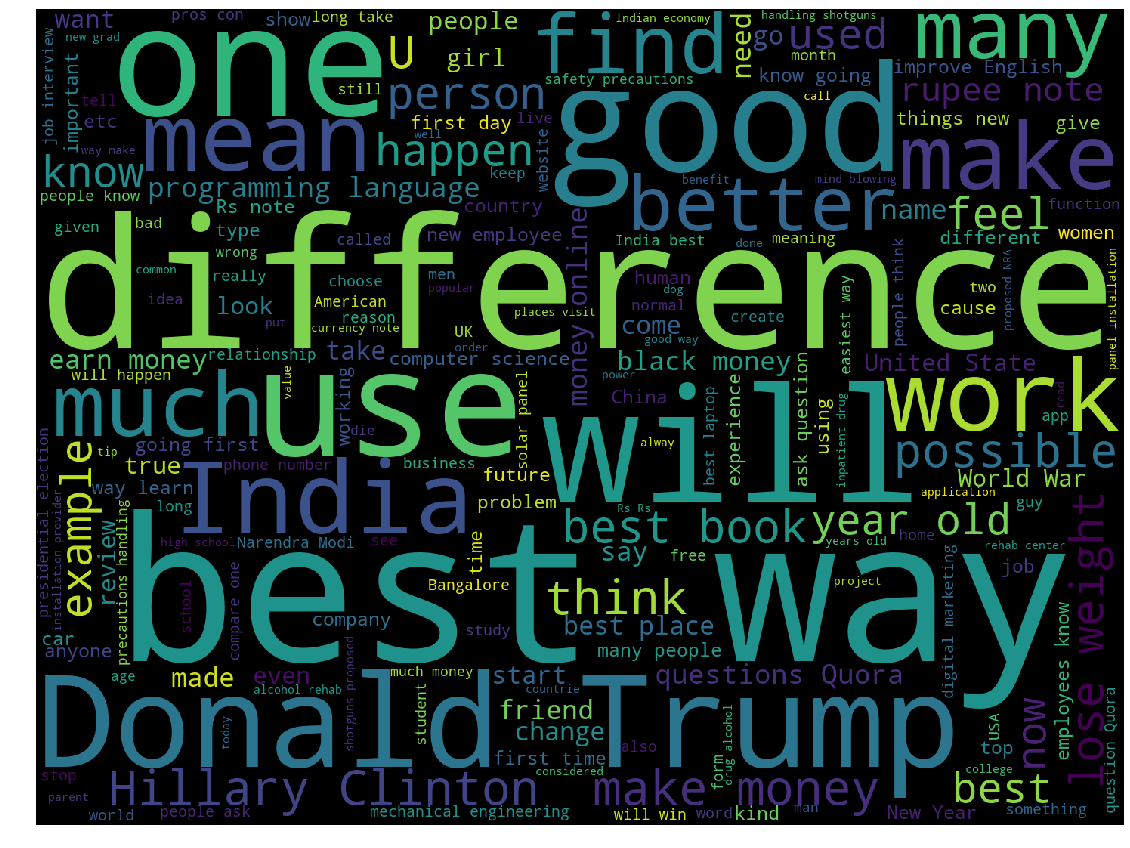

This competition is sponsored by Quora. The objective is to predict whether a question asked on Quora is sincere or not. This is a kernels only comeptition with contraint of two-hour runtime.

An insincere question is defined as a question intended to make a statement rather than look for helpful answers. Some characteristics that can signify that a question is insincere:

- has a non-neutral tone

- is disparaging or inflammatory

- isn't grounded in reality

- uses sexual content

Submissions are evaluated on F1 score between the predicted and the observed targets

I have a standard workflow for model development. First starts with simple linear-based model, then add complexities if needed. Eventually, I will deploy neural network models with ensemble technique for final submission. Following is each step during my model development:

-

Establish a strong baseline with the hybrid "NB-SVM" model (link to model V0)

-

Try tree-based model LightGBM (link to model V1)

-

Try a blending model: "NB-SVM" + LightGBM (link to the blending model V11)

-

Establish baseline for neural network model (link to model V2)

- 1st layer: embedding layer without pretrained

- 2nd layer: spatial dropout

- 3rd layer: bidirectional with LSTM

- 4th layer: global max pooling 1D

- 5th layer: output dense layer

- Try neural network model with pretrained embedding weights I used a very similar neural network architecture like above. The only changes are 1) adding text cleaning 2). using pretrained word embedding weights

- Neural Networks with Glove word embedding (link to model V30)

- Neural Networks with Paragram word embedding (link to model V31)

- Neural Networks with FastText word embedding (link to model V32)

-

Try to use LSTM Attention with Glove word embedding (link to model V40)

-

Use both LSTM Attention and Capsule Neural Network (CapsNet) (link to model V5)

| model | public score | public leaderboard |

|---|---|---|

| model V0 | 0.641 | 1600th (top66%) |

| model V30 | 0.683 | 1075th (top40%) |

| model V40 | 0.690 | 700th (top28%) |

| model V5 | 0.697 | 91th (top4%) |

https://www.kaggle.com/fizzbuzz/beginner-s-guide-to-capsule-networks

https://www.kaggle.com/ashishpatel26/nlp-text-analytics-solution-quora

https://www.kaggle.com/gmhost/gru-capsule

https://www.kaggle.com/larryfreeman/toxic-comments-code-for-alexander-s-9872-model

https://www.kaggle.com/shujian/single-rnn-with-5-folds-snapshot-ensemble

https://www.kaggle.com/thebrownviking20/analyzing-quora-for-the-insinceres

https://www.kaggle.com/mjbahmani/a-data-science-framework-for-quora

https://www.kaggle.com/christofhenkel/how-to-preprocessing-when-using-embeddings

https://www.kaggle.com/sudalairajkumar/a-look-at-different-embeddings