- 若训练效果不佳,首先需要调整学习率和Batch size,这俩超参很大程度上影响收敛。其次,从关闭图像增强手段(尤其小数据集)开始,有的图像增强方法会污染数据,如

如何去除增强?如efficientnetv2-b0配置文件中train_pipeline可更改为如下

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='RandomResizedCrop',

size=192,

efficientnet_style=True,

interpolation='bicubic'),

dict(type='Normalize', **img_norm_cfg),

dict(type='ImageToTensor', keys=['img']),

dict(type='ToTensor', keys=['gt_label']),

dict(type='Collect', keys=['img', 'gt_label'])

] 若你的数据集提前已经将shape更改为网络要求的尺寸,那么Resize操作也可以去除。

2023.12.02

-

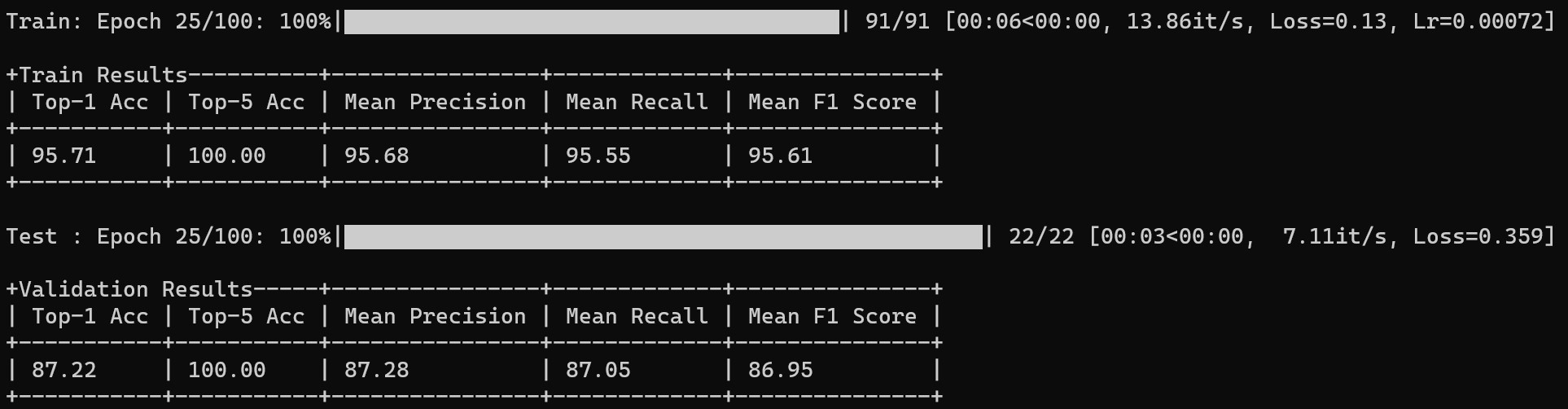

新增Issue中多人提及的输出Train Acc与Val loss

metrics_outputs.csv保存每周期train_loss, train_acc, train_precision, train_recall, train_f1-score, val_loss, val_acc, val_precision, val_recall, val_f1-score方便各位绘图- 终端由原先仅输出Val相关metrics升级为Train与Val都输出

2023.08.05

- 新增TinyViT(预训练权重不匹配)、DeiT3、EdgeNeXt、RevVisionTransformer

2023.03.07

- 新增MobileViT、DaViT、RepLKNet、BEiT、EVA、MixMIM、EfficientNetV2

2022.11.20

- 新增是否将测试集用作验证集选项,若不使用,从训练集按ratio划分验证集数量,随机从训练集某fold挑选作为验证集(类似k-fold但不是,可自己稍改达到k-fold目的),详见Training tutorial

2022.11.06

- 新增HorNet, EfficientFormer, SwinTransformer V2, MViT模型

- Pytorch 1.7.1+

- Python 3.6+

| 数据集 | 视频教程 | 人工智能技术探讨群 |

|---|---|---|

花卉数据集 提取码:0zat |

点我跳转 | 1群:78174903 3群:584723646 |

- 遵循环境搭建完成配置

- 下载MobileNetV3-Small权重至datas下

- Awesome-Backbones文件夹下终端输入

python tools/single_test.py datas/cat-dog.png models/mobilenet/mobilenet_v3_small.py --classes-map datas/imageNet1kAnnotation.txt- LeNet5

- AlexNet

- VGG

- DenseNet

- ResNet

- Wide-ResNet

- ResNeXt

- SEResNet

- SEResNeXt

- RegNet

- MobileNetV2

- MobileNetV3

- ShuffleNetV1

- ShuffleNetV2

- EfficientNet

- RepVGG

- Res2Net

- ConvNeXt

- HRNet

- ConvMixer

- CSPNet

- Swin-Transformer

- Vision-Transformer

- Transformer-in-Transformer

- MLP-Mixer

- DeiT

- Conformer

- T2T-ViT

- Twins

- PoolFormer

- VAN

- HorNet

- EfficientFormer

- Swin Transformer V2

- MViT V2

- MobileViT

- DaViT

- replknet

- BEiT

- EVA

- MixMIM

- EfficientNetV2

@repo{2020mmclassification,

title={OpenMMLab's Image Classification Toolbox and Benchmark},

author={MMClassification Contributors},

howpublished = {\url{https://github.com/open-mmlab/mmclassification}},

year={2020}

}