3DV 2022

[Project Page] • [Paper] • [Dataset] • [Video] • [Poster]

- Create a virtual environment via

conda. This environment is built onRTX 3090and can be modified manually.conda env create -f environment.yml conda activate panopticnerf

-

We evaluate our model on KITTI-360. Here we show the structure of a test dataset as follow. You can download it from here and then put it into

$ROOT. In thedatasets, we additionally provide some test files on different scenes.├── KITTI-360 ├── 2013_05_28_drive_0000_sync ├── image_00 ├── image_01 ├── bbx_intersection ├── *_00.npz ├── *_01.npz ├── calibration ├── calib_cam_to_pose.txt ├── perspective.txt ├── data_3d_bboxes ├── data_poses ├── cam0_to_world.txt ├── poses.txt ├── pspnet ├── sgm ├── visible_idfile Intro image_00/01stereo RGB images pspnet2D pseudo ground truth sgmweak stereo depth supervision visible_idper-frame bounding primitive IDs data_posessystem poses in a global Euclidean coordinate calibrationextrinsics and intrinsics of the perspective cameras bbx_intersectionray-mesh intersections, containing depths between hitting points and camera origin, semantic label IDs and bounding primitive IDs -

Generate ray-mesh intersections (

bbx_intersection/*.npz). The red dots and blue dots indicate where the rays hit into and out of the meshes, respectively. For the given test scene,START=3353,NUM=64.# image_00 python mesh_intersection.py intersection_start_frame ${START} intersection_frames ${NUM} use_stereo False # image_01 python mesh_intersection.py intersection_start_frame ${START} intersection_frames ${NUM} use_stereo True

- Evaluate the origin of a scene (

center_pose) and the distance from the origin to the furthest bounding primitive (dist_min). Then accordingly modify the.yamlfile.python recenter_pose.py recenter_start_frame ${START} recenter_frames ${NUM}

-

We provide the training code. Replace

resume Falsewithresume Trueto load the pretained model.python train_net.py --cfg_file configs/panopticnerf_test.yaml pretrain nerf gpus '1,' use_stereo True use_pspnet True use_depth True pseudo_filter True weight_th 0.05 resume False -

Render semantic map, panoptic map and depth map in a single forward pass, which takes around 16s per-frame on a single 3090 GPU. Please make sure to maximize the GPU memory utilization by increasing the size of the chunk to reduce inference time. Replace

use_stereo Falsewithuse_stereo Trueto render the right views.python run.py --type visualize --cfg_file configs/panopticnerf_test.yaml use_stereo False

-

Visualize novel view appearance & label synthesis. Before rendering, select a frame and generate corresponding ray-mesh intersections with respect to its novel spiral poses, e.g.

SPIRAL_FRAME=3400,NUM=32.python mesh_intersection_spiral_trajectory.py intersection_spiral_frame ${SPIRAL_FRAME} intersection_frames ${NUM} use_stereo FalseThen render results of the spiral trajectory. Feel free to change codes for rendering from arbitrary poses.

python run.py --type visualize --cfg_file configs/panopticnerf_test_spiral.yaml spiral_frame ${SPIRAL_FRAME} spiral_frame_num ${NUM} use_stereo False

├── KITTI-360

├── gt_2d_semantics

├── gt_2d_panoptics

├── lidar_depth

-

Download the released pretrained model and put it to

$ROOT/data/trained_model/panopticnerf/panopticnerf_test/latest.pth. -

We provide some semantic & panoptic GTs and LiDAR point clouds for evaluation. The details of evaluation metrics can be found in the paper.

-

Eval mean intersection-over-union (mIoU)

python run.py --type eval_miou --cfg_file configs/panopticnerf_test.yaml use_stereo False

- Eval panoptic quality (PQ)

sh eval_pq_test.sh

- Eval depth with 0-100m LiDAR point clouds, where the far depth can be adjusted to evaluate the closer scene.

python run.py --type eval_depth --cfg_file configs/panopticnerf_test.yaml use_stereo False max_depth 100.

- Eval Multi-view Consistency (MC)

python eval_consistency.py --cfg_file configs/panopticnerf_test.yaml use_stereo False consistency_thres 0.1

@inproceedings{fu2022panoptic,

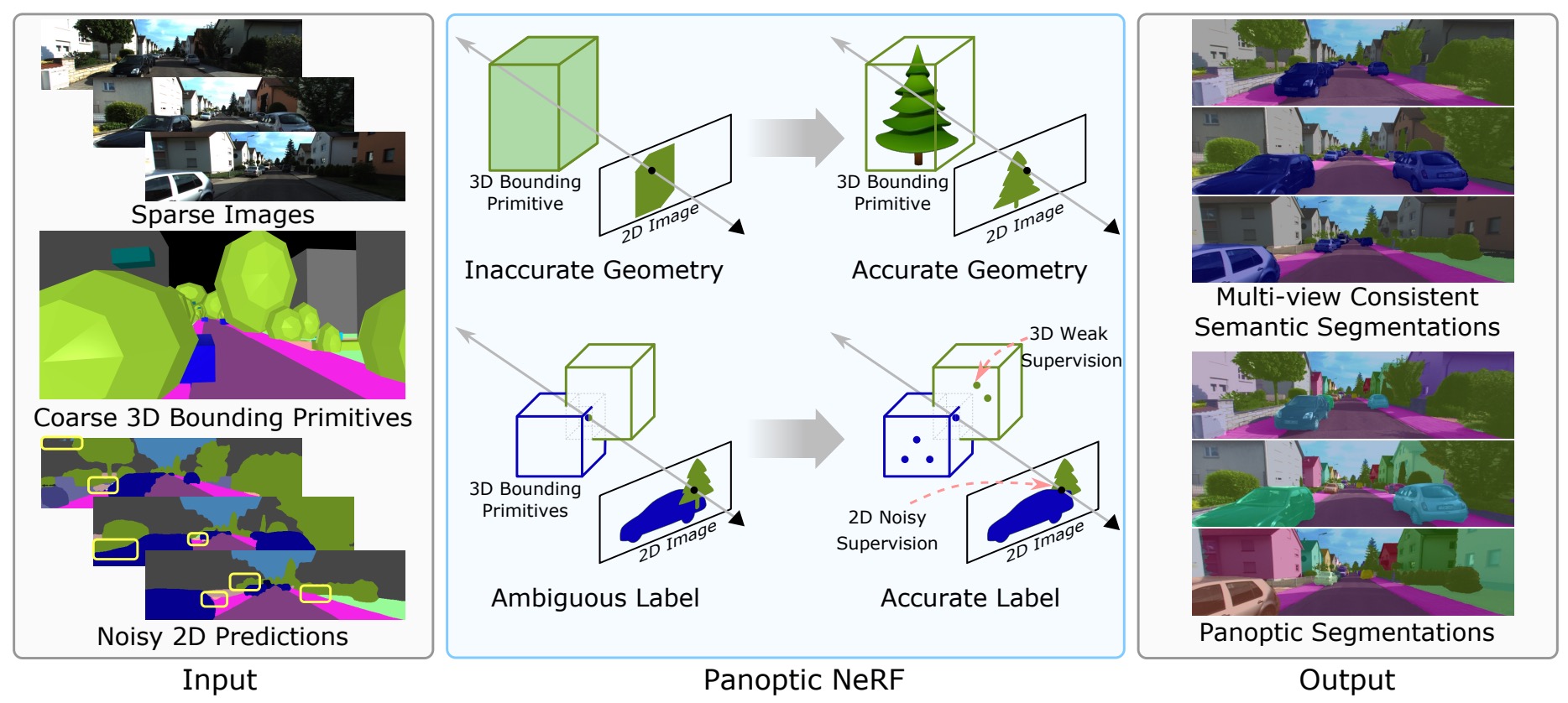

title={Panoptic NeRF: 3D-to-2D Label Transfer for Panoptic Urban Scene Segmentation},

author={Fu, Xiao and Zhang, Shangzhan and Chen, Tianrun and Lu, Yichong and Zhu, Lanyun and Zhou, Xiaowei and Geiger, Andreas and Liao, Yiyi},

booktitle = {International Conference on 3D Vision (3DV)},

year = {2022}

}Copyright © 2022, Zhejiang University. All rights reserved. We favor any positive inquiry, please contact lemonaddie0909@zju.edu.cn.