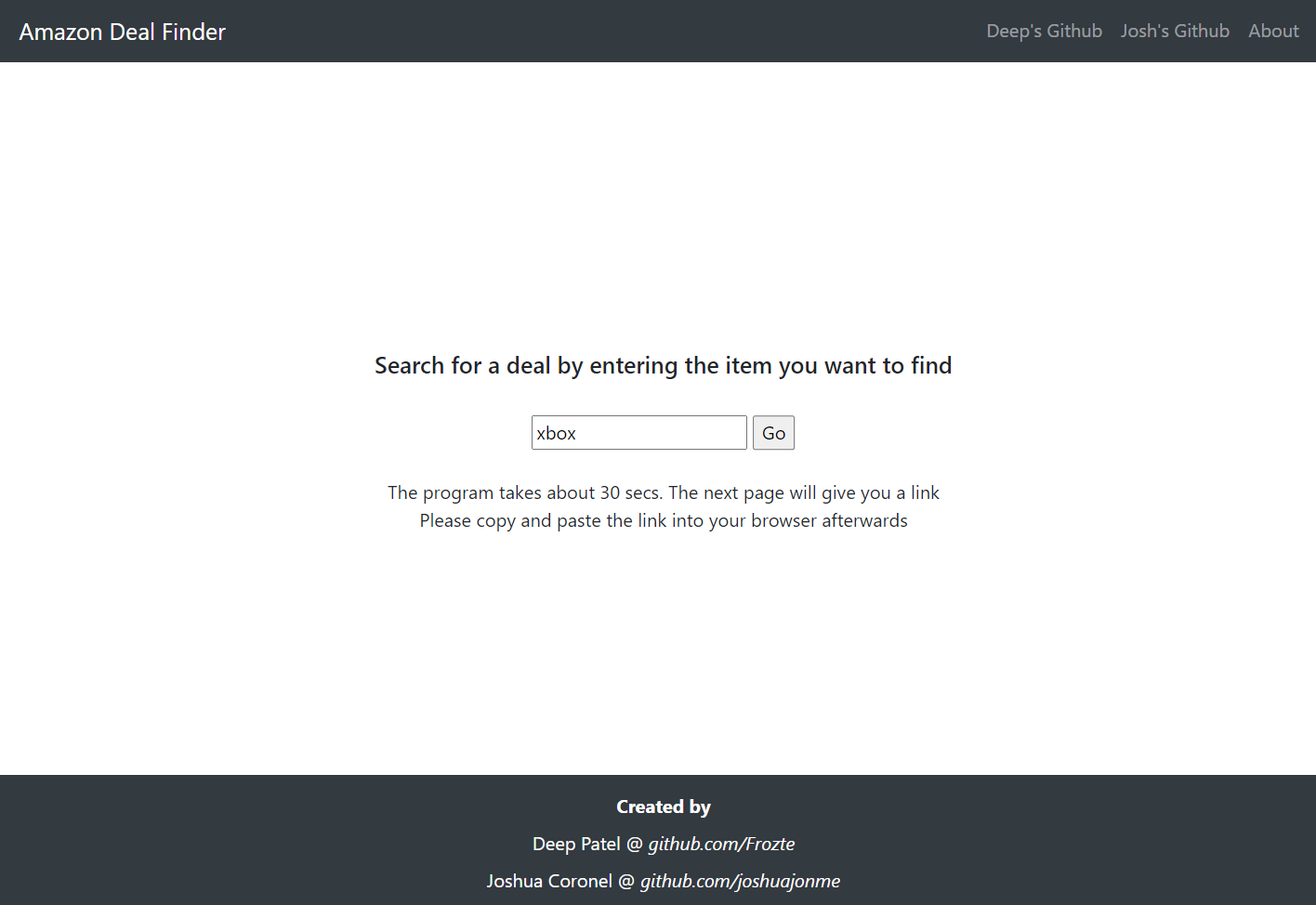

A Flask enabled Web Scraping Service to find the best product deals on Amazon

Visit the link 🔗HERE to try out the scrape for yourself. Please be patient as the process takes a bit to run in its current state.

The output will be in a .json format, after which, the user may click or copy and paste the URL into their browser.

{

"Discount": 40.00800160032007,

"Name": "Just Dance 2021 Xbox Series X|S, Xbox One",

"Previous price": 49.99,

"Price": 29.99,

"Prime product": true,

"URL": "https://www.amazon.com/Just-Dance-2021-Xbox-One/dp/B08GQW447N/ref=sr_1_16?dchild=1&keywords=xbox&qid=1606806822&sr=8-16"

}

You will need to download a chromedriver.exe compatible with your version of Google Chrome. To check your chrome version, please navigate to 'Settings>About Chrome' and note your version. Then proceed to this link and download the driver specific to your version. Take note of the filepath of this driver for the steps in the Usage section.

Other prerequisites include...

Flask >= 1.1.2

Selenium >= 3.141

Requests >= 2.25For local usage, please navigate to the price_scraper.py file and comment/uncomment the following. Code can be found in lines 34, 42, and 43.

34: #options.binary_location = os.environ.get("GOOGLE_CHROME_BIN")

42: #driver = webdriver.Chrome(executable_path=os.environ.get("CHROMEDRIVER_PATH"), options=options)

43: driver = webdriver.Chrome("chromedriver.exe", options=options)The path for the "chromedriver.exe" must also be changed according to your filepath.

Completing the above, proceed to run the code by

python app.pyWhile code is working properly, we would like to make the process run faster. Currently, the working deployment is scraping 1 page which we wish to change to 5. However, we are limited to 500MB and about 30 secs of runtime before Heroku timesout with it's infamous H12 error. The current code runs at an average of 20secs. We are already looking for ways to make this better. For those that wish to contribute, please create a pull request and we will include you as contributors

👤 Deep Patel

- Website: www.mrdeeppatel.com

- Github: @Frozte

- LinkedIn: @Deep Patel

👤 Joshua Coronel

- Github: @joshuajonme

- LinkedIn: @Joshua Coronel

Initial code was forked from KalleHallden

Give a ⭐️ if this project helped you!

Copyright © 2020 Deep Patel & @Joshua Coronel.

This project is MIT licensed.

This README was generated with readme-md-generator