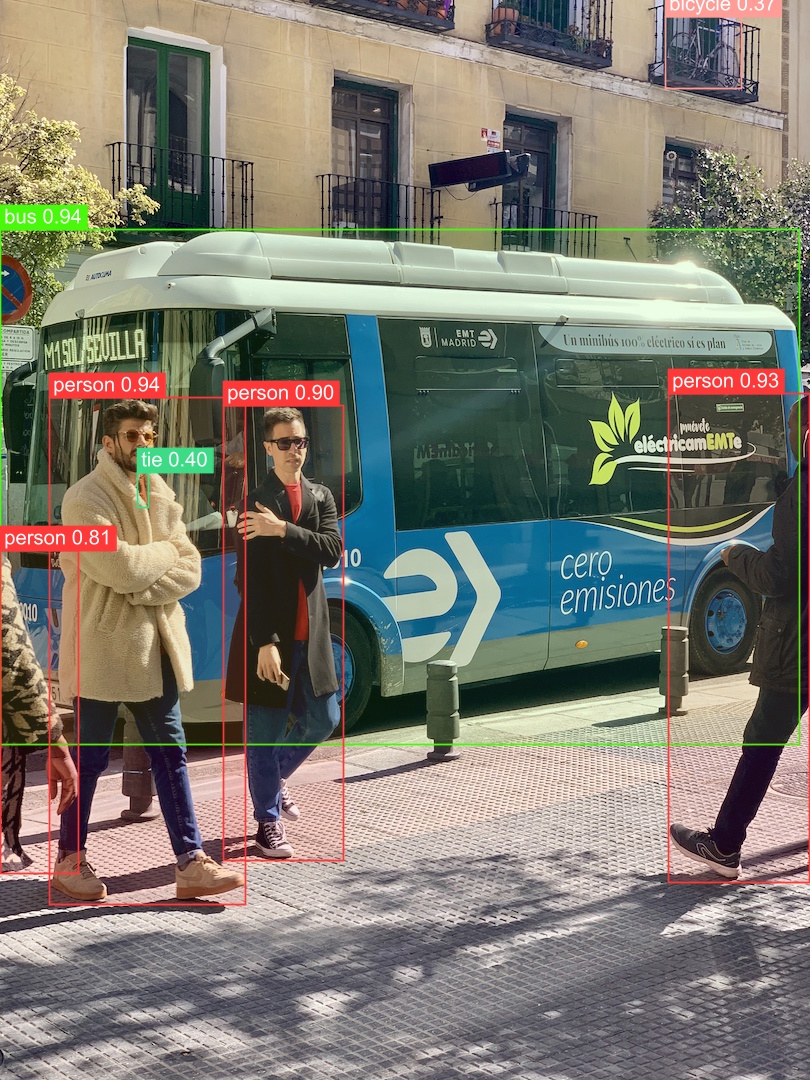

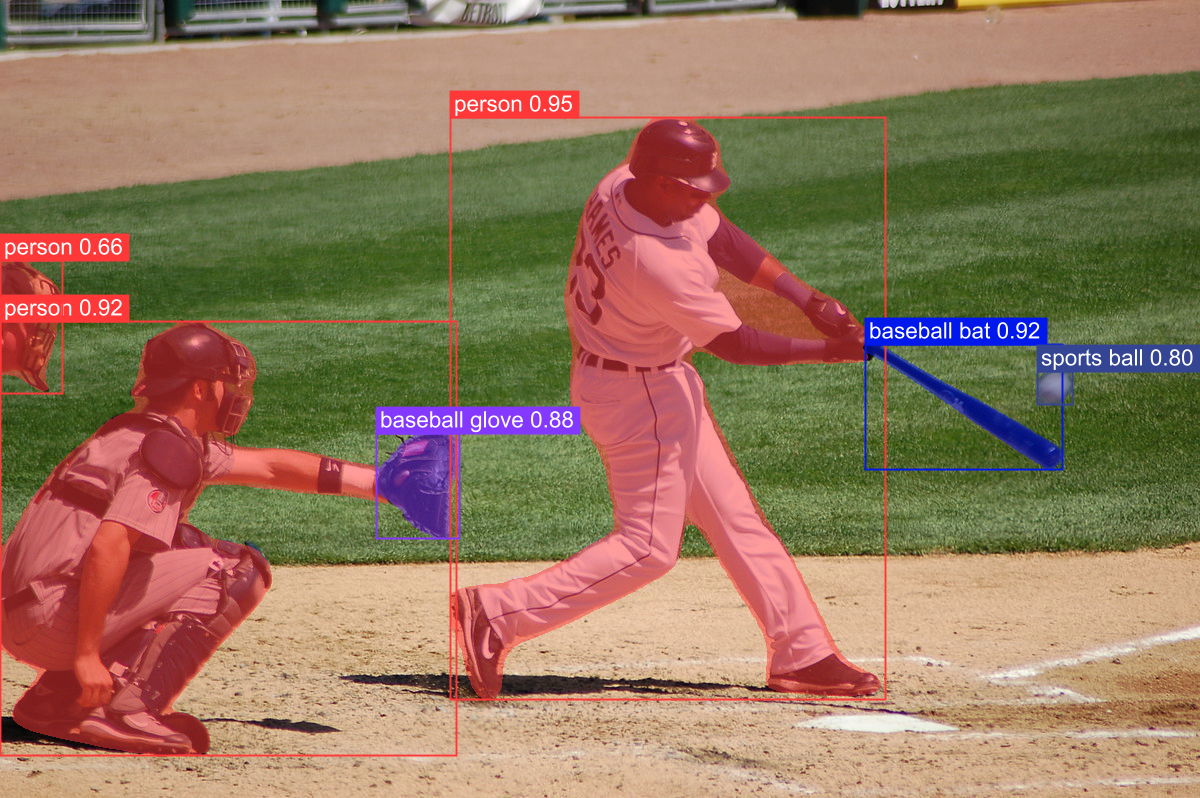

Use YOLOv8 in real-time for object detection, instance segmentation, pose estimation and image classification, via ONNX Runtime

The YoloV8 project is available in two nuget packages: YoloV8 and YoloV8.Gpu, if you use with CPU add the YoloV8 package reference to your project (contains reference to Microsoft.ML.OnnxRuntime package)

dotnet add package YoloV8If you use with GPU you can add the YoloV8.Gpu package reference (contains reference to Microsoft.ML.OnnxRuntime.Gpu package)

dotnet add package YoloV8.GpuRun this python code to export the model in ONNX format:

from ultralytics import YOLO

# Load a model

model = YOLO('path/to/best')

# export the model to ONNX format

model.export(format='onnx')using Compunet.YoloV8;

using SixLabors.ImageSharp;

using var predictor = YoloV8Predictor.Create("path/to/model");

var result = predictor.Detect("path/to/image");

// or

var result = await predictor.DetectAsync("path/to/image");

Console.WriteLine(result);You can to plot the input image for preview the model prediction results, this code demonstrates how to perform a prediction, plot the results and save to file:

using Compunet.YoloV8;

using Compunet.YoloV8.Plotting;

using SixLabors.ImageSharp;

using var image = Image.Load("path/to/image");

using var predictor = YoloV8Predictor.Create("path/to/model");

var result = await predictor.PoseAsync(image);

using var plotted = await result.PlotImageAsync(image);

plotted.Save("./pose_demo.jpg")You can also predict and save to file in one operation:

using Compunet.YoloV8;

using Compunet.YoloV8.Plotting;

using SixLabors.ImageSharp;

using var predictor = YoloV8Predictor.Create("path/to/model");

predictor.PredictAndSaveAsync("path/to/image");AGPL-3.0 License