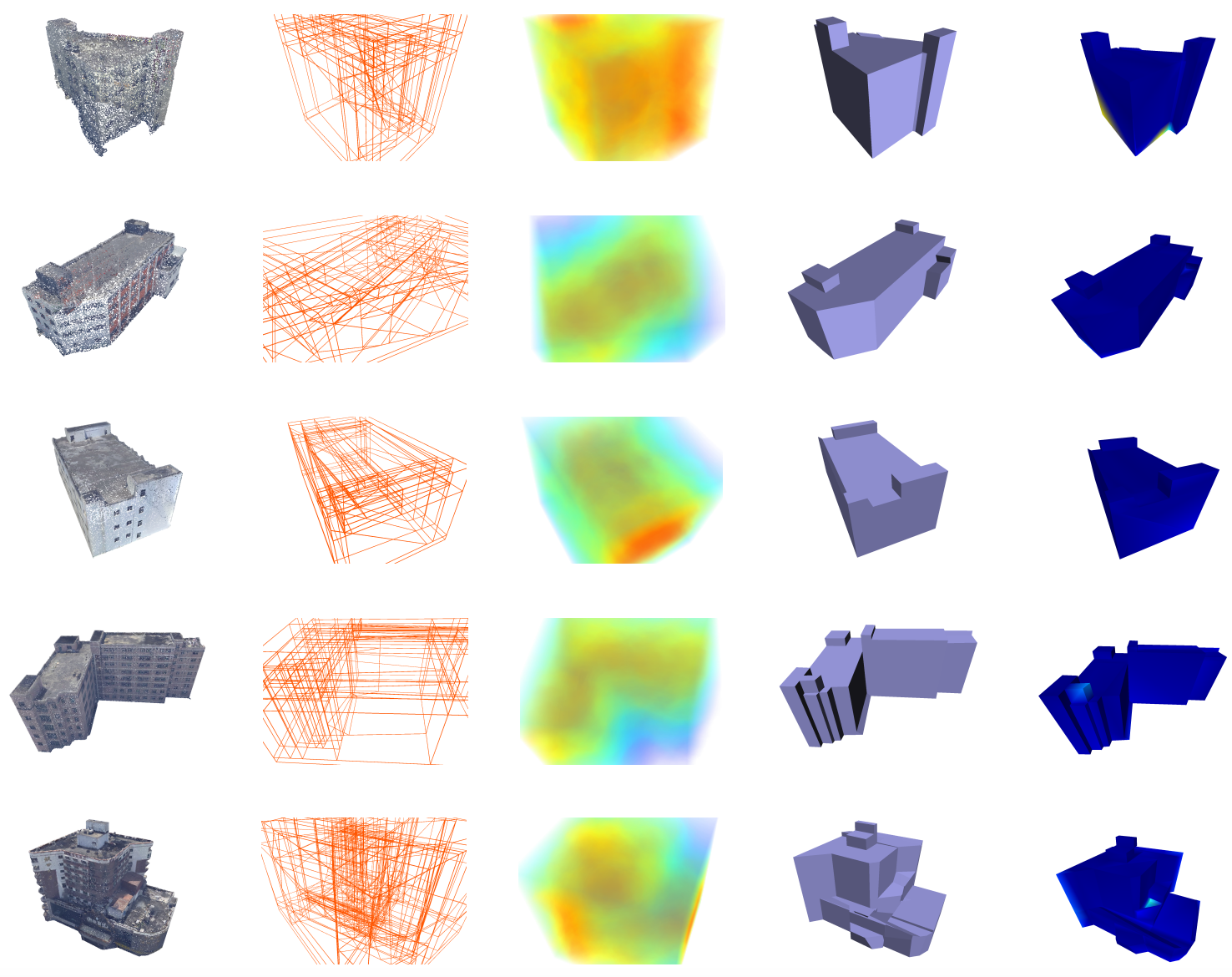

Points2Poly is an implementation of the paper Reconstructing Compact Building Models from Point Clouds Using Deep Implicit Fields, which incorporates learnable implicit surface representation into explicitly constructed geometry.

Due to clutter concerns, the core module is separately maintained in the abspy repository (also available as a PyPI package), while this repository acts as a wrapper with additional sources and instructions in particular for building reconstruction.

The prerequisites are two-fold: one from abspy with functionalities on vertex group, cell complex, and adjacency graph; the other one from points2surf that facilitates occupancy estimation.

Clone this repository with submodules:

git clone --recurse-submodules https://github.com/chenzhaiyu/points2polyIn case you already cloned the repository but forgot --recurse-submodules:

git submodule update --initFollow the instruction to install abspy with its dependencies, while abspy itself can be easily installed via PyPI:

# local version (stable)

pip install ./abspy

# PyPI version (latest)

pip install abspyInstall the dependencies for points2surf:

pip install -r points2surf/requirements.txtFor training, make sure CUDA is available and enabled.

Navigate to points2surf/README.md for more details on its requirements.

In addition, install dependencies for logging:

pip install -r requirements.txtDownload a mini dataset of 6 buildings from the Helsinki 3D city models, and a pre-trained full-view model:

python download.py dataset_name='helsinki_mini' model_name='helsinki_fullview'Run reconstruction on the mini dataset:

python reconstruct.py dataset_name='helsinki_mini' model_name='helsinki_fullview'Evaluate the reconstruction results by Hausdorff distance:

python evaluate.py dataset_name='helsinki_mini'The reconstructed building models and statistics can be found under ./outputs/helsinki_mini/reconstructed.

Download the Helsinki dataset from OneDrive, including meshes, point clouds, and queries with distances.

-

Convert point clouds into NumPy binary files (

.npy). Place point cloud files (e.g.,.ply,.obj,.stland.off) under./datasets/{dataset_name}/00_base_pcthen runpoints2surf/make_pc_dataset.py, or manually do the conversion. -

Extract planar primitives from point clouds with Mapple. In Mapple, use

Point Cloud-RANSAC primitive extractionto extract planar primitives, then save the vertex group files (.vgor.bvg) into./datasets/{dataset_name}/06_vertex_group. -

Run reconstruction the same way as that in the demo. Notice that, however, you might need to retrain a model that conforms to your data's characteristics.

Prepare meshes and place them under datasets/{dataset_name} that mimic the structure of the provided data. Refer to this instruction for creating training data through BlenSor simulation.

- Separate

abspy/points2surffrompoints2polywrappers - Config with hydra

- Short tutorial on how to get started

- Host generated data

The implementation of Points2Poly has greatly benefited from Points2Surf. In addition, the implementation of the abspy submodule is backed by great open-source libraries inlcuding SageMath, NetworkX, and Easy3D.

If you use Points2Poly in a scientific work, please consider citing the paper:

@article{chen2022points2poly,

title = {Reconstructing compact building models from point clouds using deep implicit fields},

journal = {ISPRS Journal of Photogrammetry and Remote Sensing},

volume = {194},

pages = {58-73},

year = {2022},

issn = {0924-2716},

doi = {https://doi.org/10.1016/j.isprsjprs.2022.09.017},

url = {https://www.sciencedirect.com/science/article/pii/S0924271622002611},

author = {Zhaiyu Chen and Hugo Ledoux and Seyran Khademi and Liangliang Nan}

}