Restricted Boltzmann Machines as Keras Layer

Boltzmann machines are unsupervised, energy-based probabilistic models (or generators). This means that they associate an energy for each configuration of the variables that one wants to model.

Intuitively, learning in these models corresponds to associating more likely configurations to lower energy states.

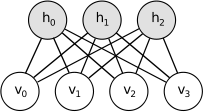

In these states there are units that we call visible, denoted by v, and hidden units denoted by h.

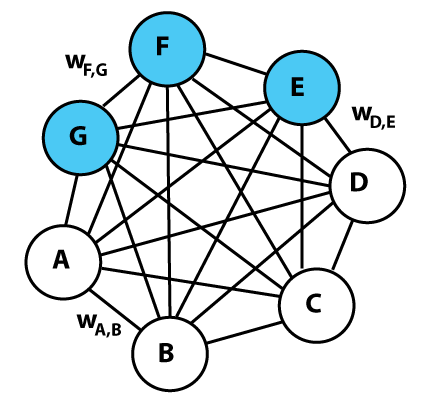

A general model of Boltzmnn Machine is shown below.

In fact, Boltzmann machines are so complicated that they have yet to prove practical utility. So we will have to restrict them in some way. Restricted Boltzmann Machines fulfill this role.

They are Boltzmann Machines on the condition that there are no direct connections between the visible units nor between the hidden ones.

The code was impplemented using Python 3, and had the follow dependences:

- Tensorflow

- Keras

- Numpy

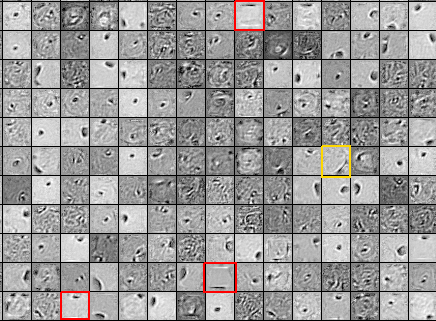

One way to evaluate the RBM is visually, by showing the W parameters as images.

If the training is successful, the weights should contain useful information for modeling the MNIST base digits.

Above, not all weights are easily interpreted. Note how the weights highlighted in red contain black lines at the top or bottom.

Black pixels mean negative values in w and can be interpreted as a filter that prevents the passage of information.

These black lines then capture information that the digits do not exceed line height.

Thus, the MBR places little probability in visible states with positive pixels in places higher or lower than those lines.

The filter highlighted in yellow is probably useful for detecting sloping traces on the right, such as the "7".