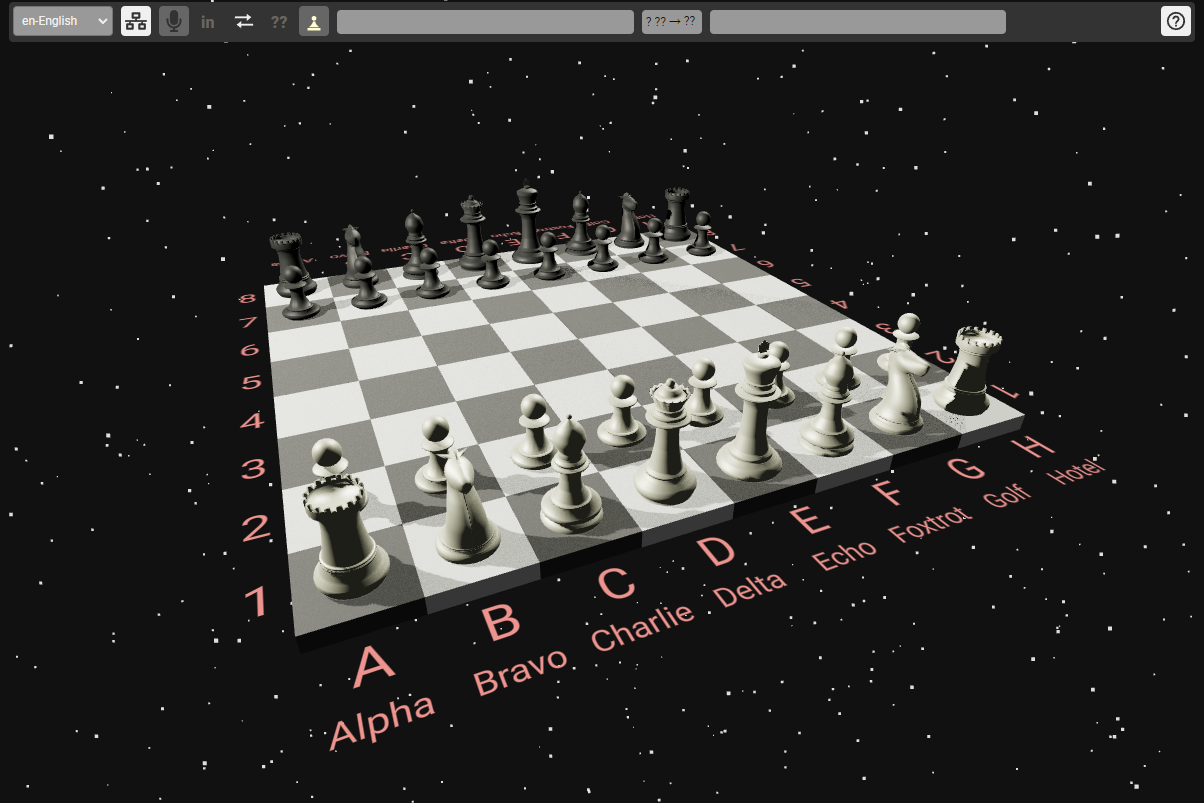

A multi-lingual voice driven 3D chess game for learning and teaching Voice AI using Coqui STT using limited vocabulary language models.

Please note: This repo is not production ready. It is somewhere between alpha and beta versions as of April 1st 2022.

Current capabilities:

- Single user server for Speech To Text STT inference (nodejs) (i.e. it works on a single core free node - but we support a server pool)

- 3D Frontend (voice only multi-lingual interface with some buttons & output areas)

- Currently Supported Languages: de (German), en (English), tr (Turkish)

- You play against yourself :)

We have opened a test site which is supported by a server pool for our tests.

- acoustic-model-creation: Example notebook model (TODO)

- language-model-creation: All files for creating your domain specific language model

- voice-chess-react: Frontend - React & three.js implementation

- voice-chess-server: Server - Simple single connection nodejs implementation

TODO

- Creation of new acoustic and language models in your language

- Better chess related wording for existing languages if needed

- Translate resource files (messages.json)

- Testing; ideas, feedback in issues; commits & PR's

- Get information on chess terminology in your language (if you don't know already - Wikipedia and Youtube helps).

- Examine existing sentences/programming in chess sentence generators.

- Copy an appropriate sentence generator, rename it to your language code and translate/adapt.

- Find a compatible Coqui STT acoustic model (.tflite file) or train one from Mozilla Common Voice datasets.

- Translate resource files (messages.json)

- Test your results on your forked server/client locally and improve your models if needed.

- Make a Pull Request (PR) to add your acoustic model (.tflite), language model (.scorer) to voice-chess-server/voice dir, add generated json language file and translated messages.json files to voice-chess-react/locale.

If you cannot do some of these, please open an issue so we can help.

Detailed information can be found here.

- VOICE: Coqui STT, Coqui example, KenLM, Mozilla Common Voice datasets.

- 3D UI: three.js & react-three-fiber (with drei and zustand)

- CHESS: chess.js for chess data and controls (no AI or GUI).

The client and server voice related code is adapted from the Coqui example web_microphone_websocket.

The first version of the project is created during coqui.ai's "Hack the Planet" hackathon in Mozilla Festival 2022, between 8-15 March.

The main idea was to implement a speech enabled application in one week. A group of people voted for implementation of a voice controlled game (tic-tac-toe), but the idea became a multi-lingual voice driven 3D chess. A team was formed and implementing a chess application became the goal. Team members were BÖ, JF, KM, MK.

This was a two part application at the beginning:

- The server part is a node.js application which does the actual STT

- The client is a React.js app which records sentences and communicates to the server for transcription via socket.io, validate it, show on the browser with three.js and with the help of chess.js.

Due to the limited timeframe and individual time constraints, the group kept the expectations also limited.

- The UI part is kept minimal, but working. E.g. there is no manual play, enhanced UI features etc.

- A sample of languages got selected, but it can be expanded with other languages.

- There are many commanding formats for chess. To simplify the whole workflow, user is forced to use a single format in this version:

"Move <piece> from <fromCell> to <targetCell>".Here "piece" is the chess piece name, such as King, Bishop etc, "cell" is the board coordinate col-row (columns: A-Z, rows: 1-8).

- After several trials with English and Turkish, we found out recognition of single alphabetic characters are not robust enough (nearly impossible), so we used NATO alphabet: Alpha, Bravo, ... Hotel. Except the NATO naming, other wording got translated into respective languages.

- Include support for following languages: - German, English, French, Hindi, Russian, Turkish

At the end of the project duration, a semi-working software has been presented for English and Turkish.

You can watch the initial project presentation video here.

And, as promised at the end of the presentation, we continue to develop and make it open-source here.