-

Notifications

You must be signed in to change notification settings - Fork 442

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add more pretrain model #16

Comments

|

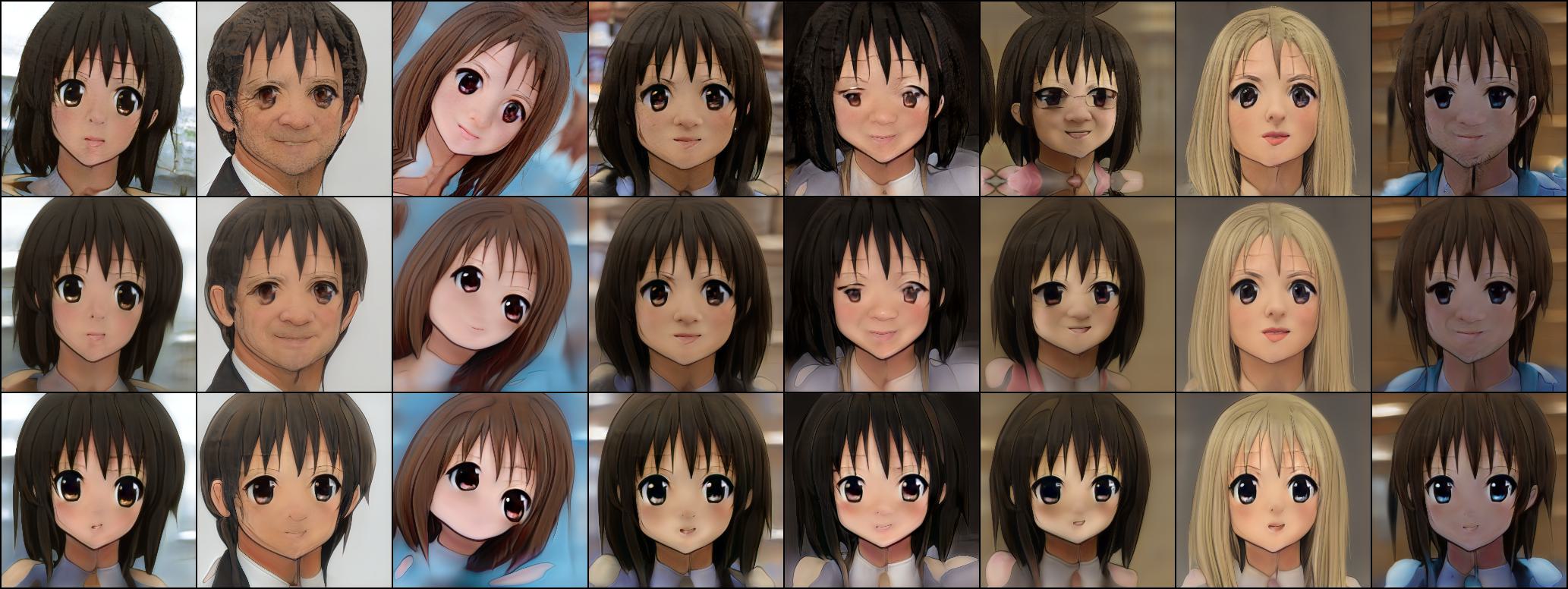

We have tried the style of Anime, but the results are not satisfactory. |

|

Thank you for your explanation,your model is very interesting,I still hope to do some insteresting experiments with these insteresting models if possible.Maybe you can tell me how to use that repo's(I mean DualStyleGAN) model in VToonify's code,I see they seem to be trained out of the same network. |

|

You only need to train the corresponding encoder to match the DualStyleGAN using the follwoing two codes: https://github.com/williamyang1991/VToonify#train-vtoonify-d |

|

OK,I wonder how long the training will take. |

|

THX! |

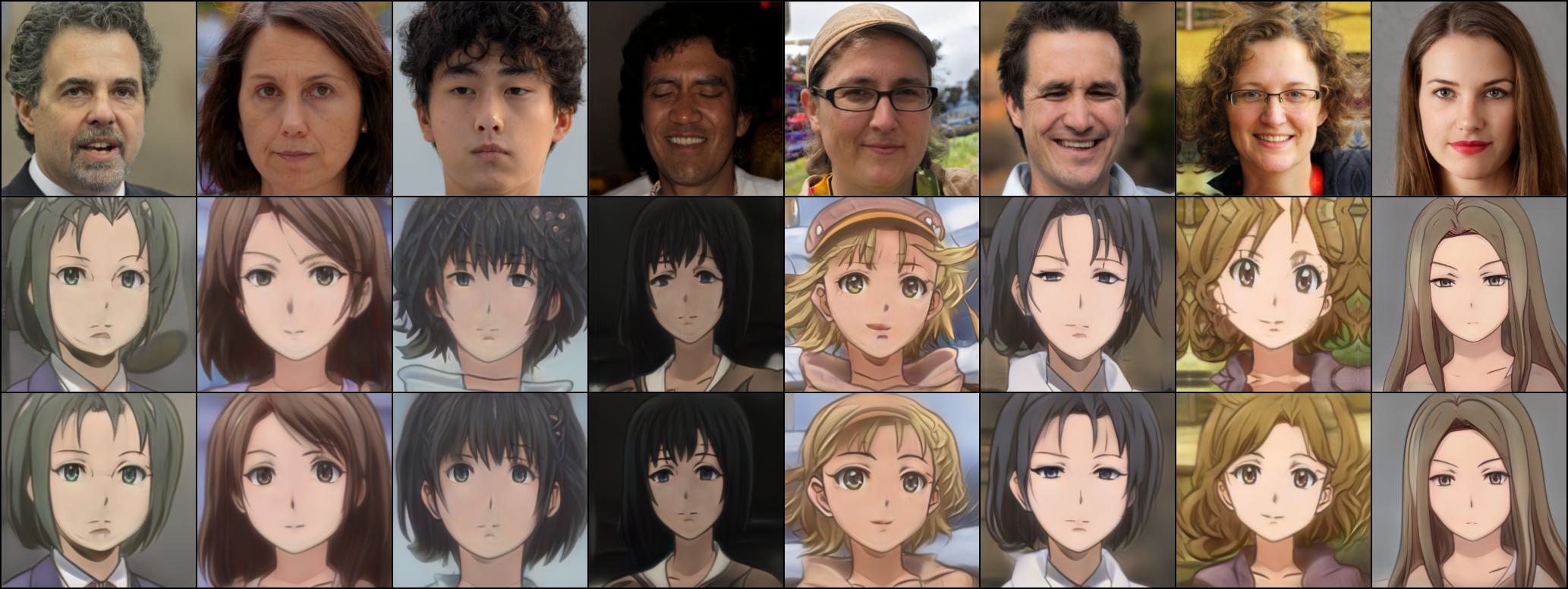

Thank for your detailed reply, I train the anime model based on the Dual-StyleGAN's checkpoint, ( generator.pt from https://drive.google.com/drive/folders/1YvFj33Bfum4YuBeqNNCYLfiBrD4tpzg7), but it seems not the anime style. Would you please share Vtoonify’s anime checkpoint? |

|

My checkpoint is trained with color transfer. You need not specify |

|

I see. You can specify the or change Lines 245 to 250 in db57c27

to (remove the and args.fix_style)

|

In this case, you need to specify So the style options are You can tune the style_degree to find the best results. |

|

I think your results look good! |

|

How can I create my own style to use with this? |

good job!I see another repo(https://github.com/williamyang1991/DualStyleGAN) have many other style model , can you integrate them in this repo? I had download the model,but I can't use them in VToonify's code.

The text was updated successfully, but these errors were encountered: