New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

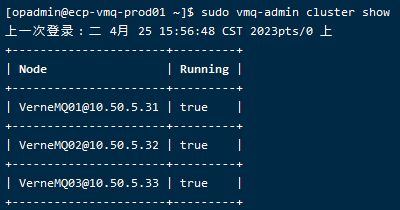

[Bug]: After vernemq starts the cluster, the cpu and memory usage is abnormal #2146

Comments

|

Can you specify what you mean by "after VerneMQ starts the cluster?" Starting from 3 empty clustered nodes: can you tell me what you actually test to arrive at the behaviour described? 👉 Thank you for supporting VerneMQ: https://github.com/sponsors/vernemq |

|

I mean, after all the nodes of the vernemq cluster are started, the CPU will soar. When only one node is started, the CPU is normal. |

|

And we modified redis.lua. redis.lua: |

|

Re "a few days ago": did you do anything specific to the cluster then? like make one or multiple nodes leave and rejoin the cluster? 👉 Thank you for supporting VerneMQ: https://github.com/sponsors/vernemq |

|

Yes, this is not on Kubernetes, this is on three virtual machines. |

|

Wouldn't a process only be killed/OOMed with high RAM, not high CPU? 👉 Thank you for supporting VerneMQ: https://github.com/sponsors/vernemq |

|

Ok. I wonder what happened during the VM takedown. Was the disk wiped? I don't know why you see high CPU before the crash, you might want to look at this separately. But it must somehow be load related. (the input we give to the system). 👉 Thank you for supporting VerneMQ: https://github.com/sponsors/vernemq |

|

potential fix: #2162 👉 Thank you for supporting VerneMQ: https://github.com/sponsors/vernemq |

|

@GoneGo1ng what is your status on this? can you possibly test the 1.13.0 release? 👉 Thank you for supporting VerneMQ: https://github.com/sponsors/vernemq |

|

@ioolkos Thx, I will test. |

|

Hi, I can confirm the issue is still happening on version VerneMQ 1.13.0 |

Environment

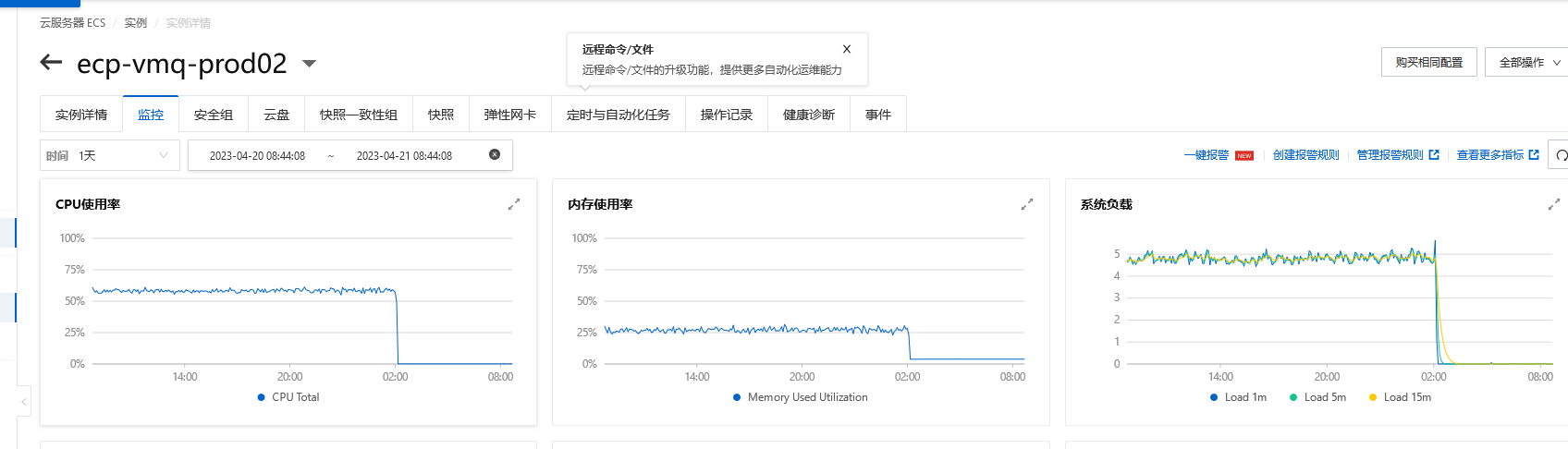

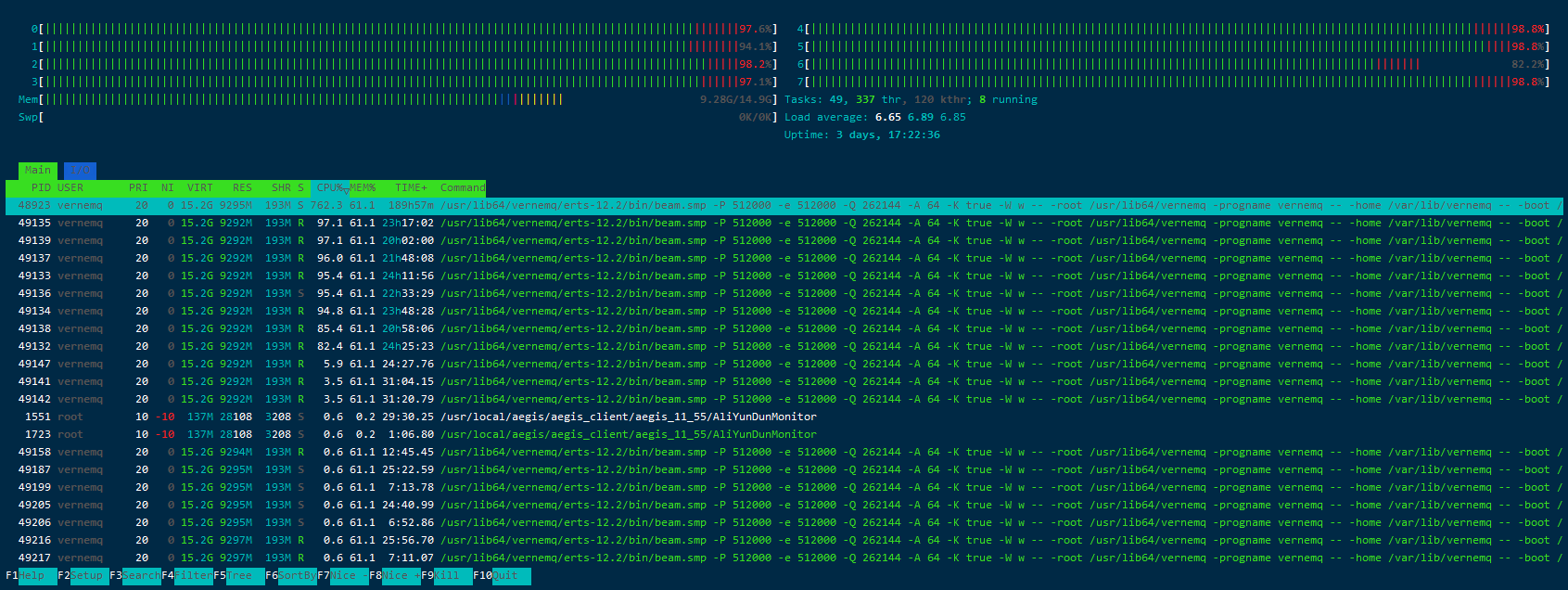

In the process of verifying the vernemq cluster, we found that even if there is no client connection, the CPU and memory usage of the vernemq is still high.

Current Behavior

The CPU and memory usage of vernemq is very high. Looking at the logs, it seems that what data is being synchronized, but we cannot judge what data is being synchronized.

Expected behaviour

The CPU and memory of vernemq should not be so high when no clients are connected to vernemq.

Configuration, logs, error output, etc.

Configuration: accept_eula = yes allow_anonymous = off allow_register_during_netsplit = on allow_publish_during_netsplit = on allow_subscribe_during_netsplit = on allow_unsubscribe_during_netsplit = on allow_multiple_sessions = off coordinate_registrations = on max_inflight_messages = 20 max_online_messages = 1000 max_offline_messages = 1000 max_message_size = 0 upgrade_outgoing_qos = off listener.max_connections = 10000 listener.nr_of_acceptors = 10 listener.tcp.default = 10.50.5.31:1883 listener.vmq.clustering = 10.50.5.31:44053 listener.http.default = 10.50.5.31:8888 systree_enabled = on systree_interval = 20000 graphite_enabled = off graphite_host = localhost graphite_port = 2003 graphite_interval = 20000 shared_subscription_policy = prefer_local plugins.vmq_passwd = on plugins.vmq_acl = off plugins.vmq_diversity = on plugins.vmq_webhooks = off plugins.vmq_bridge = off topic_max_depth = 20 metadata_plugin = vmq_swc vmq_acl.acl_file = /etc/vernemq/vmq.acl vmq_acl.acl_reload_interval = 10 vmq_passwd.password_file = /etc/vernemq/vmq.passwd vmq_passwd.password_reload_interval = 10 vmq_diversity.script_dir = /usr/share/vernemq/lua vmq_diversity.auth_postgres.enabled = off vmq_diversity.postgres.ssl = off vmq_diversity.postgres.password_hash_method = crypt vmq_diversity.auth_cockroachdb.enabled = off vmq_diversity.cockroachdb.ssl = on vmq_diversity.cockroachdb.password_hash_method = bcrypt vmq_diversity.auth_mysql.enabled = off vmq_diversity.mysql.password_hash_method = password vmq_diversity.auth_mongodb.enabled = off vmq_diversity.mongodb.ssl = off vmq_diversity.auth_redis.enabled = on vmq_diversity.redis.host = xxxxxx vmq_diversity.redis.port = 6379 vmq_diversity.redis.password = xxxxxx vmq_diversity.redis.database = 0 retry_interval = 60 vmq_bcrypt.pool_size = 1 vmq_bcrypt.nif_pool_size = 4 vmq_bcrypt.nif_pool_max_overflow = 10 vmq_bcrypt.default_log_rounds = 12 vmq_bcrypt.mechanism = port log.console = file log.console.level = info log.console.file = /var/log/vernemq/console.log log.error.file = /var/log/vernemq/error.log log.syslog = off log.crash = on log.crash.file = /var/log/vernemq/crash.log log.crash.maximum_message_size = 64KB log.crash.size = 10MB log.crash.rotation = $D0 log.crash.rotation.keep = 5 nodename = VerneMQ01@10.50.5.31 distributed_cookie = vmq erlang.async_threads = 64 erlang.max_ports = 262144 leveldb.maximum_memory.percent = 70 include conf.d/*.confConsole:

Error:

The text was updated successfully, but these errors were encountered: