New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

scene coordinate system #388

Comments

|

The |

|

Your bounding box, or how you specify the bounding box, must be wrong. |

|

The bounding box is not mine. it is actually from vision.Middelburry, and the reason i bring this up is that i sent my result for evaluation to Middlebury and they said my points are not in the correct coordinate and they asked me to run sanity check using their bounding box and the image above is the result. |

|

Are you running SfM on the Middleburry datasets? This will basically create an arbitrary scene coordinate system that has nothing to do with the one Middleburry expects. If you want to do this properly, this is what you have to do:

|

|

oh ok, it weird and I don't see the logic behind this XD. I will do what you said, but step 2 is going to be hard -__- |

|

last question and i will go to sleep x') : if I run sfmrecon with this 2 arg = true

will it work? |

|

No. Because you'll also have to fix the extrinsics. And fixing the extrinsics is not supported by nature of the incremental SfM approach. What's weird about these steps? Step 1 should be fairly self-explanatory, you have to use the Middleburry provided parameters as these "ground truth" camera parameters are better than what any SfM can produce. Step 2 is required because our MVS requires SfM points to work. If you have a MVS algorithm that doesn't require SfM points, you can probably omit this step. |

|

Thank you for all the answers you provided. it is really helpful. |

|

The SfM reconstruction has a 7 dimensional ambiguity, that is position, orientation and scale with respect to the original real world object. This ambiguity is resolved in the first SfM steps by making some arbitrary decisions. So the reconstruction is not "way off", just different with respect to this ambiguity. |

|

I added a simple tool that does what you want. Please read this page. |

|

Thank you so much , |

Hello

is there an easy way build in MVE to transform the point cloud of scene2pset to the scene coordinate system.

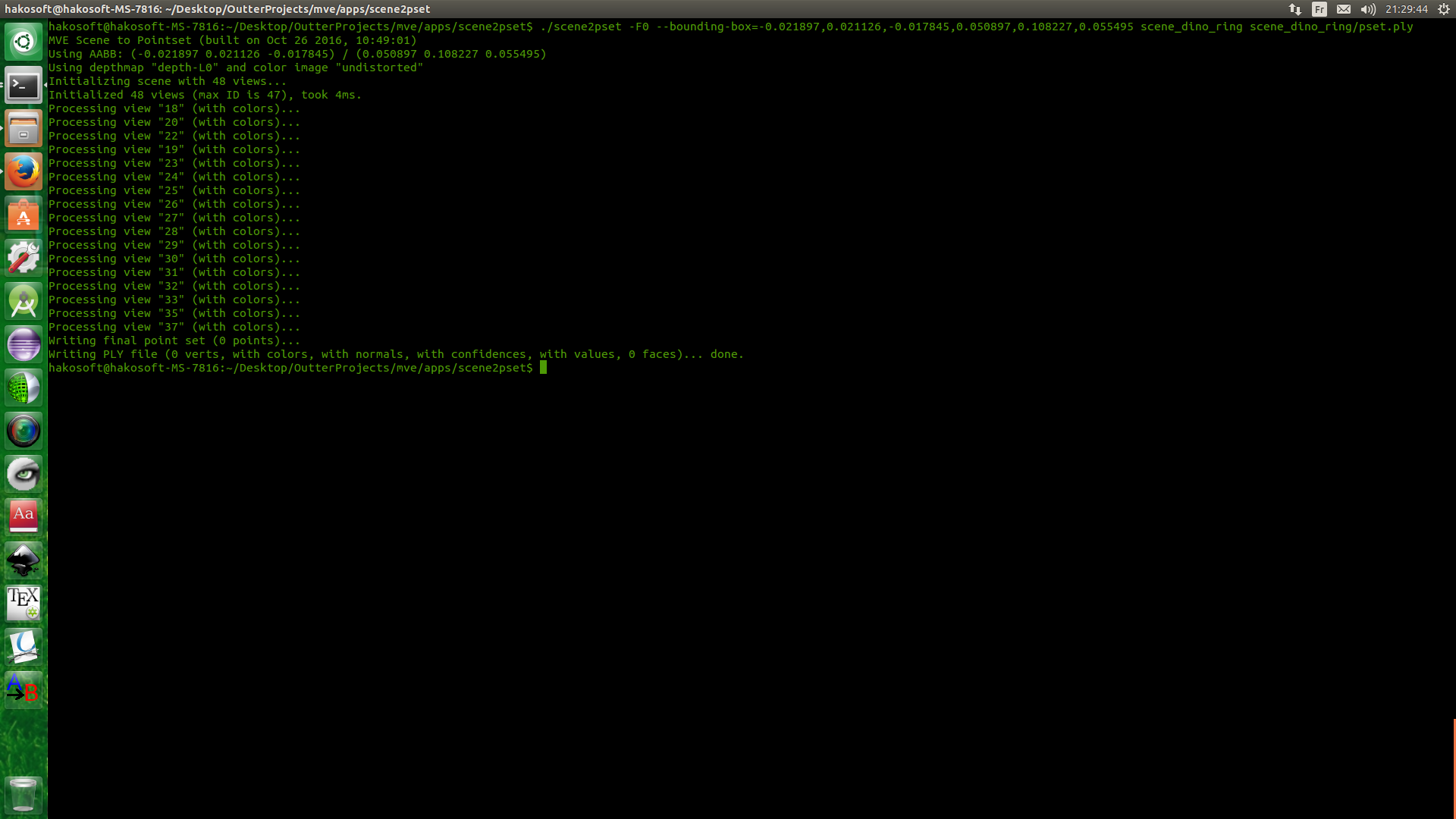

I'm developing a depth map reconstruction algorithm and feed these maps to MVE to create point cloud, I used dino dataset for testing the reconstruction is fine but it's not in scene coordinate. If I use bounding Box arg with scene2pset I get an empty ply file. otherwise, i get my points which mean my cloud is not in scene coordinate how do I fix this

note: even if I used dmrecon the same thing happen

thank you

The text was updated successfully, but these errors were encountered: