This issue was moved to a discussion.

You can continue the conversation there. Go to discussion →

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

On pipeline params, constructors and column conditions: A.k.a, can I pass parameters to ApplyToRows? #56

Comments

|

Hey @lim-0 , That's a good question, and I think I actually have some good answers for it! :) Recall that If you think of pipelines like fittable ML models (and you should; indeed, in many cases it is useful to think of them as a part of the ML model you use), hyperparameters represent choices you make between families or sub-families of models, or in our cases transformations. In contrast, parameters are a specific way in which models, or transformations, are fit to some specific data set, usually what we call a training set, and are then set and used when the model/transformation is applied to a another set (be it validation, test or future data to transform/produce predictions for). A small caveat is that when I think of something as pipeline parameters I'm thinking about some quantity or value that is determined when the pipeline (or pipeline stage) is applied to a dataframe for the first time (or is explicitly fitted u In this case, it seems like you want to build a pipeline stage that halves the value of certain columns. Lets go through several scenarios, and you use the one that works for you. 1. Pre-determined set of columnsIf you always know exactly which columns are those beforehand - down to their exact labels - when constructing the pipelines, I don't think these should be parameters of the function. They should be hardcoded, for example, in the following way: _COLUMNS_TO_HALVE = ['year', 'revenue']

def halfer(row):

new = {

f'{lbl}/2': row[lbl] / 2

for lbl in _COLUMNS_TO_HALVE

}

return pd.Series(new)

COL_HALVER = pdp.ApplyToRows(halfer, follow_column='years')So here we've used a dict comprehension to create a new half-column for each column in a list of pre-determined columns we know. This will always operate on the same set of columns, regardless of the input dataframe (and it will fail if not all of them are contained in it). I've also put everything in the global scope of the imaginary Python script file we're writing. If this is in a notebook, it probably looks the same, possibly minus the all-caps to signify global variables. 2. Columns are known on pipeline creation timeIf this is not set in stone, but is indeed always known on pipeline creation time (but may change between different uses of the same pipeline, or perhaps pipeline "template), then I'd say you need a constructor function to construct the pipeline stage on pipeline creation, which means you just probably want a pipeline constructor function. Then, from typing import List

import pdpipe as pdp

def _halfer_constructor(columns_to_halve: List[object]) -> callable:

# having this defined as a named function and not a lambda makes the resulting

# pipeline stage, and thus the whole pipeline, pickle-able/serializable

def halfer(row):

new = {

f'{lbl}/2': row[lbl] / 2

for lbl in columns_to_halve

}

return pd.Series(new)

return halfer

def pipeline_constructor(

columns_to_drop: List[object],

columns_to_half: List[object],

) -> pdp.PdPipeline:

"""Constructs my pandas dataframe-processing pipeline, according to some input arguments.

Parameters

----------

columns_to_drop : list of objects

A list of the labels of the columns to drop.

Any Python object that can be used as pandas label can be included in the list.

columns_to_half : list of objects

A list of the labels of the columns to half.

For each such a column, an additional new column, containing its halved values, is generated.

Each new column has the label "x/2", where "x" is the label of the corresponding original column.

Any Python object that can be used as pandas label can be included in the list.

Returns

-------

pipeline : pdpipe.PdPipeline

The resulting pipeline constructed by this constructor.

"""

return pdp.PdPipeline([

pdp.ColDrop(columns_to_drop),

pdp.ApplyToRows(

func=_halfer_constructor(columns_to_half),

follow_column='years',

),

])3. Columns are determined on pipeline fitIn this scenario, you don't know beforehand the exact labels of the columns you want to half, but I'm assuming you know something about them. Perhaps you want to half all float-valued columns, or all columns with labels starting with the word "revenue", etc. Luckily, I'll just provide you with an example use case, but to do that we will have to switch to a little more powerful and specific pipeline stage, the If, for example, we want to generate new, half-value, columns for each column with import numpy as np

import pdpipe as pdp

float_col_halver = pdp.MapColVals(

columns=pdp.cq.OfDtypes(np.float),

value_map=lambda x: x/2,

drop=False,

suffix='_half',

)This neat little pipeline stage will, when a dataframe is first passed through it, build a list of all columns of The cool thing is, that if applied once on a dataframe - let's say, your training set - it will remember the list of columns it "chose" by the criteria you fed it, and will only apply it to the same list of columns on any future dataframe, even if it has additional float columns. This property is invaluable in ML scenarios, when you need to generate a fixed schema for the model who follows. You can't just half a new column on inference time just because something changed in the input data (you actually have to discard it). Now, if you instead want to halve all columns with

Some notes:

4. Columns are determined on each applicationOk, say all of that sounds great, but you're not in the specific fit-vs-transform scenario that is common in ML. You just want to build a pipeline which includes a stage that halves all revenue columns in an input dataframe, and you don't care if it's a different list every time. No problem. Column qualifiers have the import numpy as np

import pdpipe as pdp

float_col_halver = pdp.MapColVals(

columns=pdp.cq.OfDtypes(np.float, fittable=False),

value_map=lambda x: x/2,

drop=False,

suffix='_half',

)That's it! I hope this helps. Feel free to keep asking stuff and pointing me in the right direction if this doesn't solve your problem. I love it when people use my code! :) |

|

@shaypal5 I get it,thank you very much! |

This issue was moved to a discussion.

You can continue the conversation there. Go to discussion →

I saw en example like this:

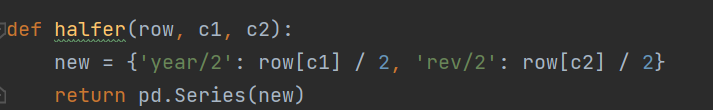

if my function like this:

how can I do to make it go into `effect?

The text was updated successfully, but these errors were encountered: