May 2020

tl;dr: Improvement over SENet.

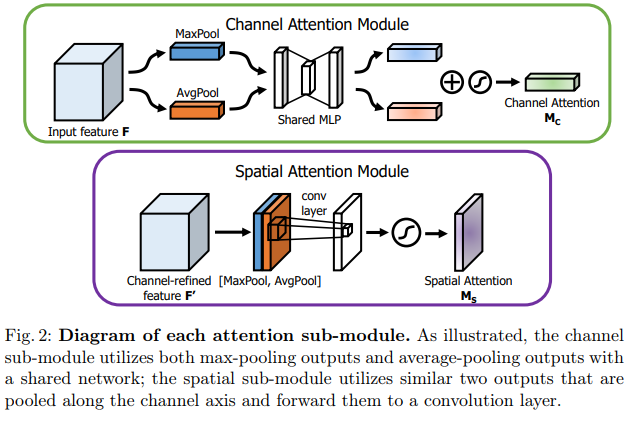

Channel attention module is very much like SENet but more concise. Spatial attention module concatenates mean pooling and max pooling across channels and blends them together.

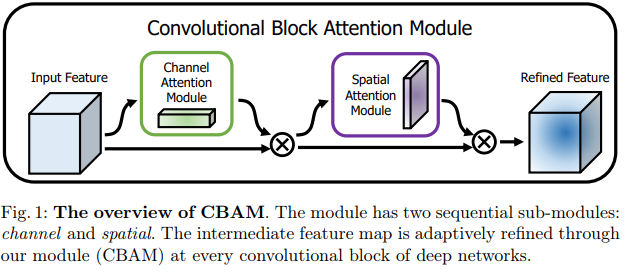

Each attention is then used sequentially with each feature map.

The Spatial attention module is modified in Yolov4 to a point wise operation.

- Summaries of the key ideas

- Summary of technical details

- Questions and notes on how to improve/revise the current work