New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

put file failed behind a proxy #7939

Comments

|

Not 100% sure what your setup is, but the GET is actually done by the pachd server, so these environment variables need to be set on the server. The helm value |

|

It works now. I solved the probelm by adding "local" to the noProxy variable. Thanks again! @jrockway |

|

That makes sense. We should document what "noproxy" needs to be (I think that our etcd connection attempts to respect the HTTP_PROXY environment variable, which is probably not what most people want here). I'll open an (internal) issue for that. |

|

Sorry, wrong again. # SPDX-FileCopyrightText: Pachyderm, Inc. <info@pachyderm.com>

# SPDX-License-Identifier: Apache-2.0

deployTarget: "MINIO"

global:

# Sets the HTTP/S proxy server address for console, pachd, and enteprise server

proxy: "http://123.103.74.231:20172"

# If proxy is set, this allows you to set a comma-separated list of destinations that bypass the proxy

noProxy: "10.1.0.0/16,10.152.183.0/24,localhost,jinniuai,127.0.0.1,172.16.0.0/15,192.168.0.0/16,jinniuai.internal,hub,jinniuai.com,local,postgresql,minio,postgres-0,pachd,etcd-0,etcd,console,pachd-peer,postgres-headless,etcd-headless,pg-bouncer"

pachd:

storage:

backend: "MINIO"

minio:

bucket: "pachyderm"

endpoint: "minio.minio.svc.cluster.local:9000"

id: "rootuser"

secret: "rootpass123"

secure: "false"

etcd:

storageClass: openebs-hostpath

size: 10Gi

postgresql:

persistence:

storageClass: openebs-hostpath

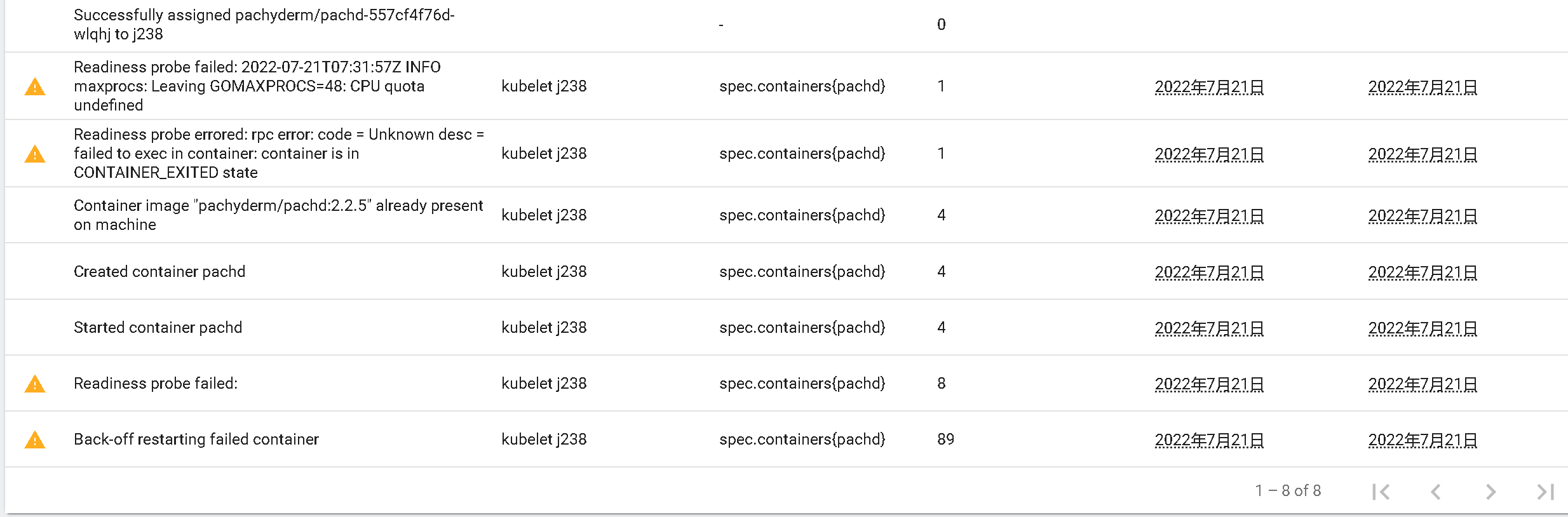

size: 10GiMy installation command is as follow: microk8s helm3 upgrade --install pachd -f pachyderm-enterprise-member-values.yaml pach/pachyderm --set pachd.enterpriseLicenseKey=$(cat pachyderm-enterprise-license.txt) --namespace pachyderm --create-namespace --set console.enabled=trueThe error in the log is as follows: I've tried to add many values to the noProxy variable with no luck. Any idea? |

|

It looks like the grpc library does an exact comparison on the host being connected to, without the port. Since PACHD_ADDRESS is If that doesn't work, you can do some more extreme debugging of console; - name: GRPC_TRACE

value: proxy

- name: GRPC_VERBOSITY

value: DEBUGThat should print something like: For reference, I got a working console with these values: deployTarget: "LOCAL"

global:

proxy: "http://127.0.0.1"

noProxy: "pachd-peer.default.svc.cluster.local"127.0.0.1 is not a real proxy, but it does cause failures, which was all I was after. |

|

It works! |

|

Does anyone know how to fix it? Thanks |

|

I don't see anything here that obviously looks like console crashing. There are some analytics requests that appear blocked because of the proxying rules; that shouldn't be fatal or anything. (They can be turned off entirely though.) If console does go away, you will have to restart the port forwarding, that's just how port-forwarding is. An alternative is to configure the services to listen on a NodePort (if local) or a LoadBalancer (if remote). Depending on your local dev environment, one might be easier than the other. With a LoadBalancer service on minikube running on OS X or Windows, The 2.3 alphas (soon to be marked as stable) make it very easy to serve everything on a port with |

What happened?:

pachctl put file images_clever-stegodon@master:birthday-cake.jpg -f https://i.imgur.com/FOO9q43.jpg

Get "https://i.imgur.com/FOO9q43.jpg": dial tcp 103.230.123.190:443: i/o timeout

What you expected to happen?:

I have set HTTP_PROXY HTTPS_PROXY environment variable correctly.

How to reproduce it (as minimally and precisely as possible)?:

rerun the command

Anything else we need to know?:

How to set a proxy for pachctl?

Environment?:

kubectl version):pachctl version):helm get values pachyderm):The text was updated successfully, but these errors were encountered: