-

Notifications

You must be signed in to change notification settings - Fork 5

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

About your dataset #2

Comments

|

Hi @IVparagigm,

The batch_size is preserved. |

|

1)in Videodataset.py class Videodataset _make_dataset() 2)Yes, I first use github, so there are something wrong with my comment format. This is my test code: Tnank you very much if you could help me. |

|

|

1)Yes, what I metioned is in this file. 2) |

Hi, because I'm a green hands and I could not get Kinetics dataset, I only can read your code. So there are some questions:

(1) In videodataset.py, class videodataset return a clip and a target in training. I notice that the length of clip is equal to the length of frame_indices which is 10, but in your paper, you select 32 frames as input. So could you tell me where you select 32 frames?

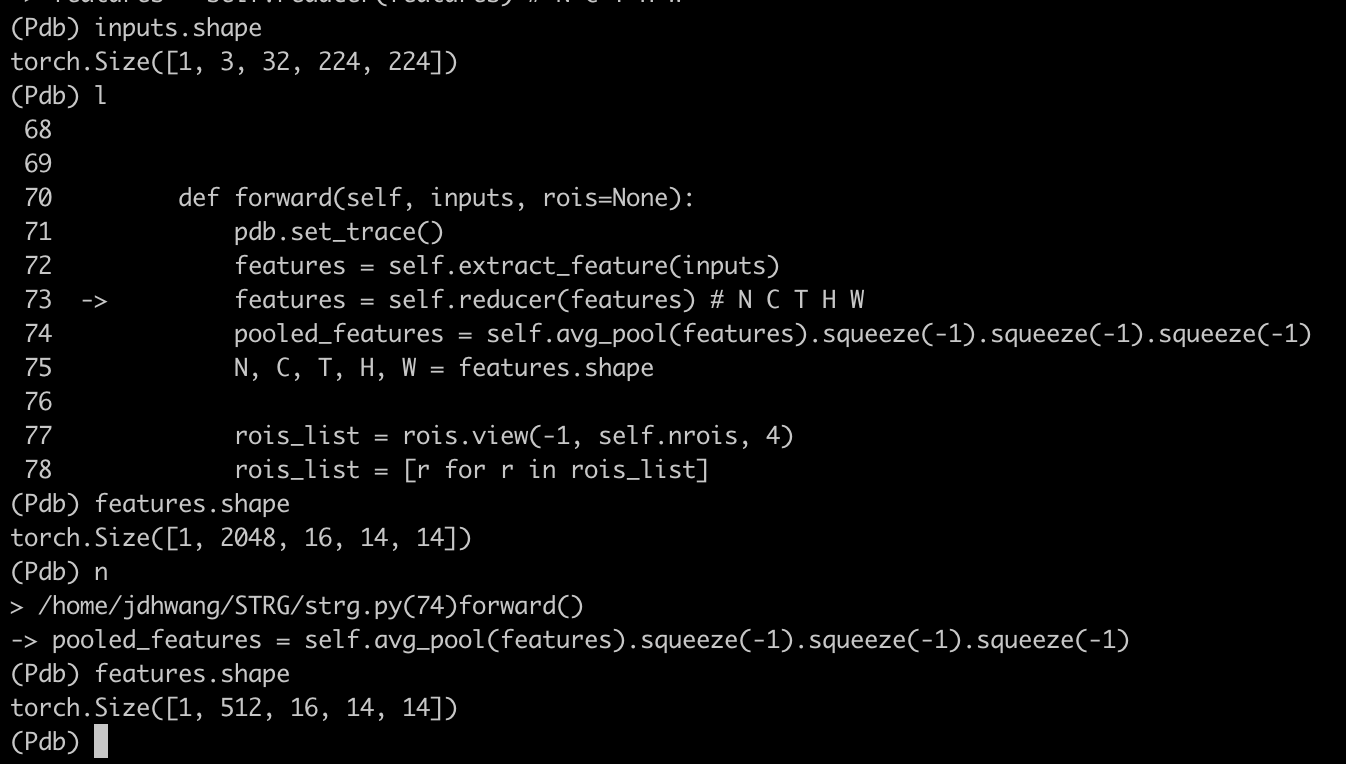

(2)About strg.py, I test other size of input like 1332224224, whose batch size is 1 and depth is 32, but the batch size of output of extractor and reducer is 2. If that means I must process all my input with batch_size = 4? Could I use other size of input?

All problems above are just primary but bothering me for several days. I would be very grateful to you if you could help me.

Thanks.

The text was updated successfully, but these errors were encountered: