-

-

Notifications

You must be signed in to change notification settings - Fork 55

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

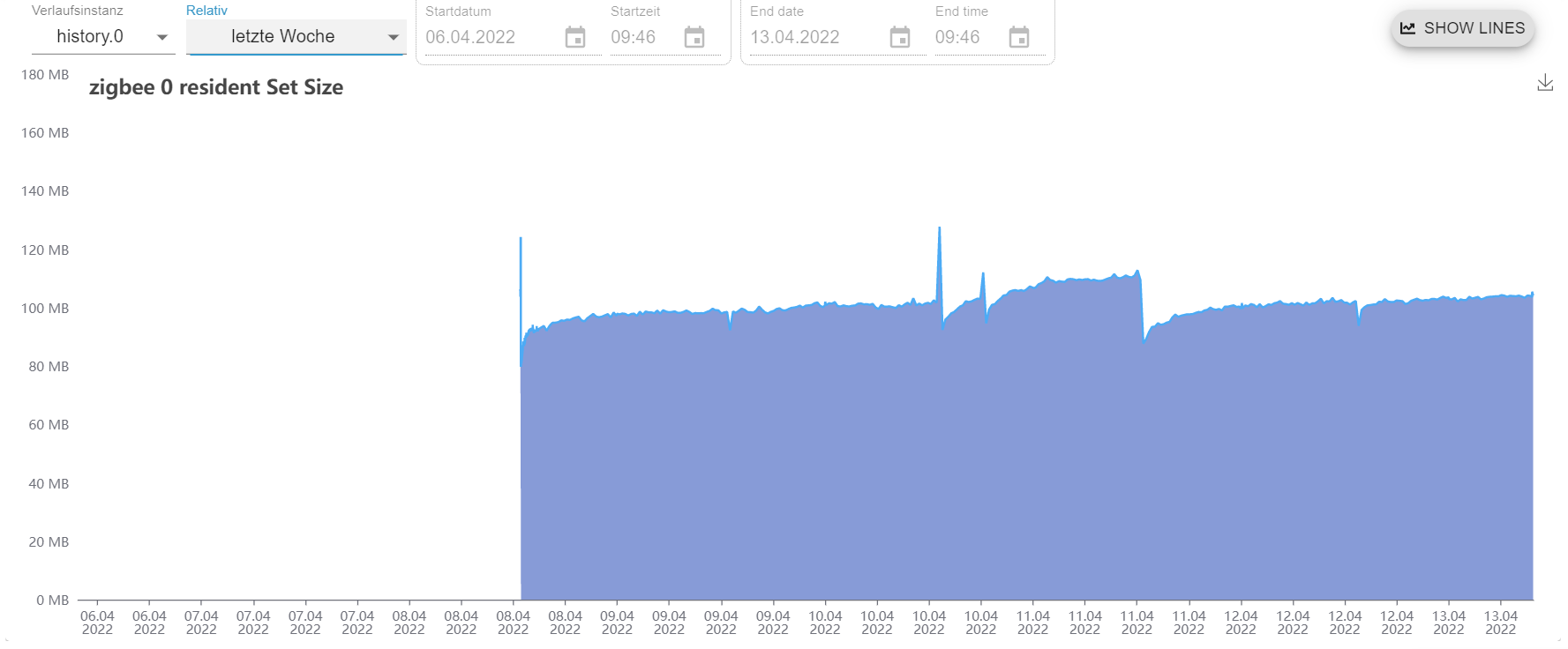

Memory leak on 4.0.x (at least - .21) - on adapter level when a lot of logs forwarded to Seq (ZigBee with Debug) #1888

Comments

|

Which db type? |

|

ANd does the chart mean that you switched off debug logging or seq logging at all ? |

Objects type: jsonl

currently Seq is still enabled, but debug status on ZigBee adapter is switched off... |

|

So basically when you have a lot of logs going through Seq, correct? |

|

@PeterVoronov Whats the seq config? Can You please copy that part out of iobroker-data/iobroker.json? especially interesting is the "Loglevel" setting there. is it empty or set to anything? |

As I wrote previously, it happened in two situations:

And there is Seq config |

|

@PeterVoronov Would you up for a bit testing? ;-) We do da deepClone of the log object in https://github.com/ioBroker/ioBroker.js-controller/blob/master/packages/common/src/lib/common/logger.ts#L96 (buut in general for all loglevels) ... One idea would be to adjuust the generated js file on your system to have "ioInfo = null" after the super call. The file should be in @iobroker/js-controller-common |

I'm ready. Only one thing - I'm not fully understand what I have to do ... |

|

And ? What I have to do? |

|

Ok, then lets go :-)

|

Ok. I did it. will monitor. But, I'm not sure, will it help, or not, due to in original /@iobroker/js-controller-common/lib/common/logger.js this |

|

Thank you, yes I missed that you also need to adjust this. Good catch. I fear that the "clone" migth be the issue ... |

|

Has anything changed with controller v5 on this behavior or still occurring? |

Not testes, but I still have seq docker image on the same maschine - will check nearest time. |

I faced with issue with ZigBee adapter - periodically it was restarted due to

JavaScript heap out of memory.Initially it was an idea about issues inside the adapter, but after log term studying the situation I found the root cause outside the adapter - such issues was happened only in case of enabling forwarding logs to Seq and Debug level of logging on the adapter or Seq level.

So, it looks like, in case of massive log generation some memory not freed and after growing used memory up to threshold - adapter was restarted.

top - 16:07:28 up 15 days, 21:17, 1 user, load average: 0.77, 0.85, 0.76

Tasks: 253 total, 5 running, 248 sleeping, 0 stopped, 0 zombie

%Cpu(s): 3.6 us, 1.5 sy, 0.0 ni, 94.7 id, 0.1 wa, 0.0 hi, 0.1 si, 0.0 st

MiB Mem : 7876.6 total, 855.8 free, 5919.8 used, 1100.9 buff/cache

MiB Swap: 7628.0 total, 5092.7 free, 2535.3 used. 1580.3 avail Mem

The text was updated successfully, but these errors were encountered: