Very poor performance in Firestore reads due to proto-plus sequence unmarshaling #224

Labels

P2

A nice-to-fix bug

priority: p2

Moderately-important priority. Fix may not be included in next release.

🚨

This issue needs some love.

type: bug

Error or flaw in code with unintended results or allowing sub-optimal usage patterns.

Environment details

Problem

We have been using Firestore with the python admin SDK for some time now, and in some cases the latency in fetching data was nowhere near the expected latency. For example, reading 500 (very small) documents from a collection could take up to 30

seconds depending on which fields the documents had. After some experiments, we found out this poor performance directly related with the presence of empty arrays in the documents.

Seemingly related issues

Steps to reproduce

Leading to the following result:

Issue analysis

To demonstrate the issue with empty arrays, we created a collection containing 500 documents each having a single field as follows:

We then fetched the data with the following code:

This required 9.76 seconds. By profiling the python code we understood that this issue was related to an extremely high

number of calls (15,295,500 times) to the

deepcopyfunction of the Python standard library, as it can be seenin the following profiling output:

The call graph showed us that a lot of calls to the

deepcopyfunction were caused by the_pb_typefunction defined in the RepeatedComposite class (contained in "proto.marshal.collections.repeated") used to infer the protocol buffer type for a sequence. The reason for this is the following snippet taken from the "_pb_type" function:proto.marshal.collections.repeated:RepeatedComposite._pb_typewhich performs a

deepcopyof theself.pbelement just to add an element in order to infer the type of the sequence.Proposed solution

By replacing the above snippet with the following snippet we can bypass the

deepcopy:That is, we get the type of the protocol buffer from the protected attributes. With this change, fetching the 500 documents took 0.24 seconds compared to 9.76 seconds with the original snippet. In fact, if we profile the code with the newly introduced change, we see that the number of calls to the

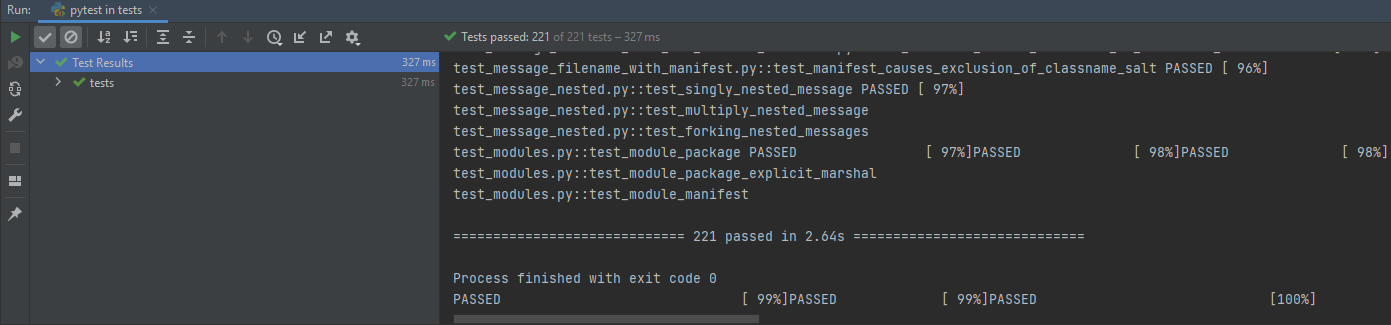

deepcopyfunction went from 15,295,500 to 1,500.Tests

All tests pass after the proposed solution was implemented.

Next steps

As soon as the maintainers have validated (or improved upon) our proposed solution, we can readily submit a PR to address this issue.

@zoncrd @lucastanzani

The text was updated successfully, but these errors were encountered: