New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

no module named 'torch__.C' #42

Comments

|

Seems like you do not have |

|

Thanks @DerekChia! @ChloeJKim, I agree this looks like a PyTorch issue unfortunately. If you're totally stuck let me know and I can try to help debug. Closing for now. |

|

Seems like this is really an issue with Pytorch. I did a quick search and found this (pytorch/pytorch#574). Perhaps you can uninstall and install torch again? |

|

@ChloeJKim let me know if this issue resolves things for you. |

|

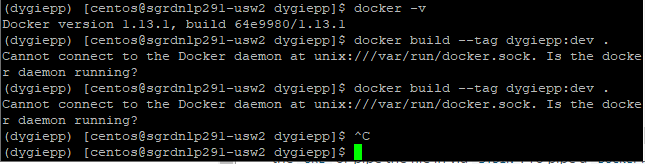

Docker was added by a contributor @GillesJ, unfortunately I don't have bandwidth to offer support for it. Let's try to get it working without Docker. Are you interested in training your own model, or in making predictions on an existing dataset? Also - can you confirm that you created a clean Conda environment and followed the instructions exactly as in the |

|

In response to this question: I want to first make predictions on existing datasets (what you have for genia) to see the results and possibly predict on my datasets in the future. How long does the prediction take on genia processed data? do you know? Thank you! |

|

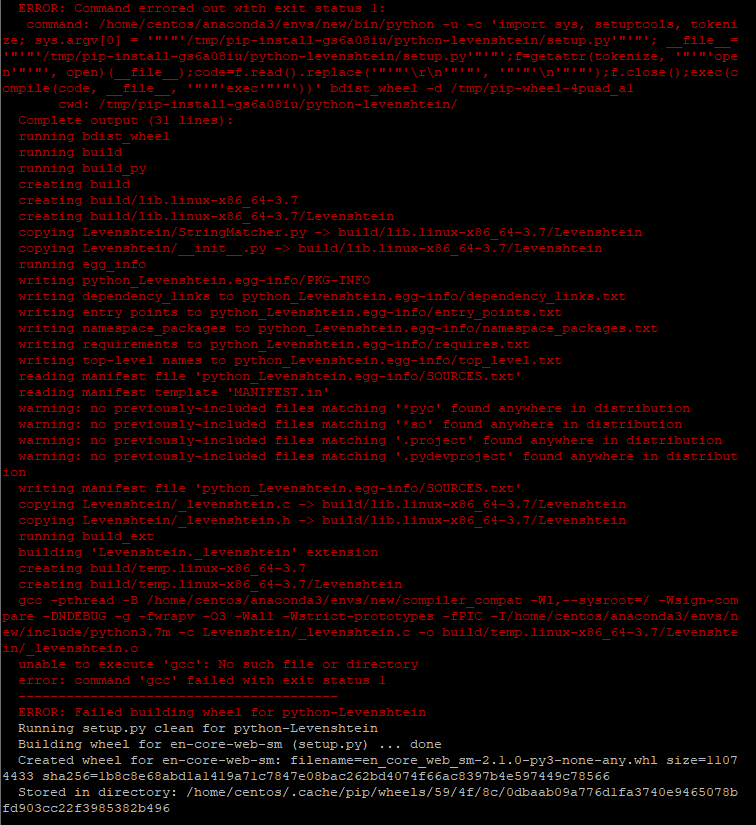

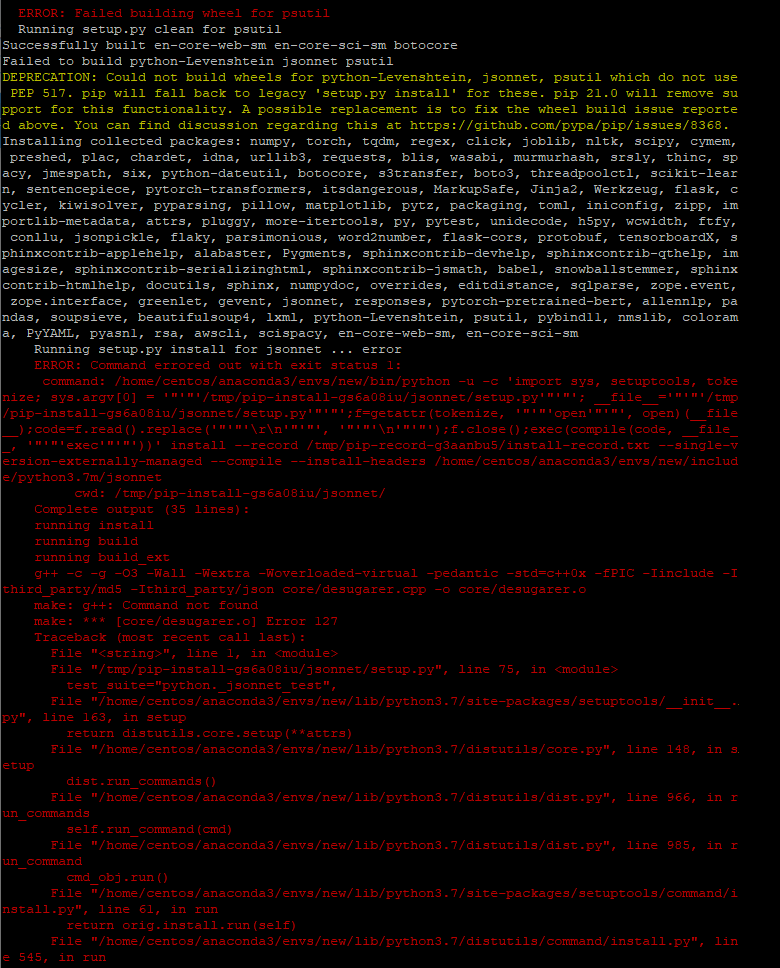

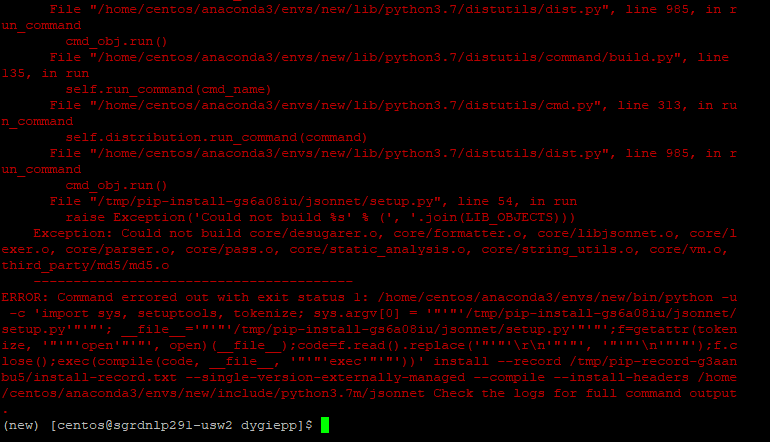

I'm looking through the install logs and it looks like there's some issues getting some of the dependencies installed. A few quick observations:

Unfortunately, I can't offer in-depth support when it comes to dealing with dependencies, I think your best bet if my observations don't help is to create GitHub issues in the relevant repositories (e.g. python-Levenshtein). Once you get the dependencies installed I can help with anything in the DyGIE code. On making predictions: It's pretty quick. Predicting on GENIA takes a couple minutes. |

|

|

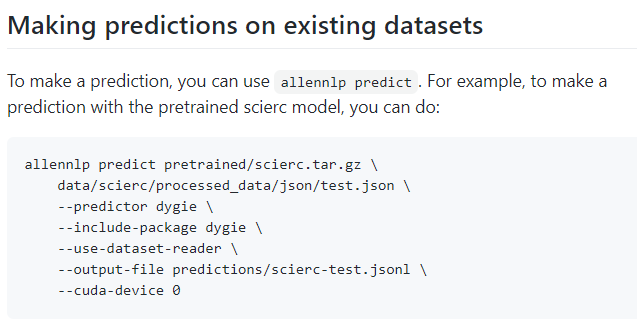

Thank you guys, I installed pytorch again and it fixed problem allennlp predict scripts/pretrained/genia-lightweight.tar.gz \ scripts/processed_data/json-coref-ident-only/test.json \ --predictor dygie \ --include-package dygie \ --use-dataset-reader \ --output-file scripts/predictions/genia-test.jsonl \ --cuda-device 0 |

|

on top of this, what would be the output of prediction on genia data? Thank you! |

|

I think you're getting an error because your backslashes aren't followed by newlines: https://superuser.com/questions/794963/in-a-linux-shell-why-does-backslash-newline-not-introduce-whitespace. Either preserve the newlines from the example in the README, or remove the backslashes in your command. The output will be formatted as described here https://github.com/dwadden/dygiepp/blob/master/doc/data.md. Predicted fields will have the word |

|

Thank you @dwadden after getting rid of basckslahses, I got following attribute error: 2020-09-18 11:24:40,258 - INFO - pytorch_pretrained_bert.modeling - Better speed can be ach ieved with apex installed from https://www.github.com/nvidia/apex . An idea on this? |

|

I see for Scirec, it shows relations like "mayor of" "elected in"

what are the relation types available or there are for GENIA dataset?

Thank you,

…On Thu, Sep 17, 2020 at 10:32 PM David Wadden ***@***.***> wrote:

I think you're getting an error because your backslashes aren't followed

by newlines:

https://superuser.com/questions/794963/in-a-linux-shell-why-does-backslash-newline-not-introduce-whitespace.

Either preserve the newlines from the example in the README, or remove the

backslashes in your command.

The output will be formatted as described here

https://github.com/dwadden/dygiepp/blob/master/doc/data.md. Predicted

fields will have the word predicted prepended, for instance predicted_ner.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#42 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AMQJDVK4O6KCSPHSW7MW4JLSGLWHXANCNFSM4ROE3ZHA>

.

--

*Chloe Kim** | *Masters Student

UC Berkeley Class of 2020

Masters in Bioengineering

chloe.kim@berkeley.edu

|

|

It looks like you don't have SciBERT installed and place in the correct folder. Please follow the instructions in the README to download SciBERT. GENIA only has named entities, not relations. I don't remember the schema offhand, but once you've processed the data you can examine the named entity labels to determine this. |

|

I just finalized a fairly substantial code upgrade. You no longer have to download SciBERT to get things working. The new docs should provide enough info to get predictions working. |

|

Closing this for lack of activity. Feel free to reopen if you're still having problems. |

Hi

Thank you for your amazing work and for publishing the code!

While replicating your work on making predictions on the existing dataset I encountered the following error: can you please help me out?

allennlp predict ./scripts/pretrained/genia-lightweight.tar.gz \ ./scripts/processed_data/json-coref-ident-only/test.json \ --predictor dygie \ --include-package dygie \ --use-dataset-reader \ --output-file predictions/genia-test.jsonl \ --cuda-device 0

Thank you!

The text was updated successfully, but these errors were encountered: