You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Problem description:

I'm wondering what does the parameter 'thin' exactly mean? I find for different thin value, the result is different.

So I'm confused what value should I set to the parameter 'thin': flat_samples = sampler.get_chain(discard=100, thin=?, flat=True) ?

let steps= 5000, walks=32, discard=100, and let flat_samples = sampler.get_chain(discard=100, flat=True, thin=i)

If I denote the size of flat_samples when thin= i as ' flat_samples| thin= i ', I found:

flat_samples | thin=1 = (5000-100)*32 =156800

flat_samples | thin=i = [ (5000-100)/thin] * 32

But I still do not understand the meaning of the parameter 'thin'.

Why should one 'thin' a relatively big sample to a small sample?

Code to show my question:

importnumpyasnpimportmatplotlib.pyplotaspltnp.random.seed(123)

# Choose the "true" parameters.m_true=-0.9594b_true=4.294f_true=0.534# Generate some synthetic data from the model.N=50x=np.sort(10*np.random.rand(N))

yerr=0.1+0.5*np.random.rand(N)

y=m_true*x+b_truey+=np.abs(f_true*y) *np.random.randn(N)

y+=yerr*np.random.randn(N)

deflog_likelihood(theta, x, y, yerr):

m, b, log_f=thetamodel=m*x+bsigma2=yerr**2+model**2*np.exp(2*log_f)

return-0.5*np.sum((y-model) **2/sigma2+np.log(sigma2))

deflog_prior(theta):

m, b, log_f=thetaif-5.0<m<0.5and0.0<b<10.0and-10.0<log_f<1.0:

return0.0return-np.infdeflog_probability(theta, x, y, yerr):

lp=log_prior(theta)

ifnotnp.isfinite(lp):

return-np.infreturnlp+log_likelihood(theta, x, y, yerr)

x0=np.array([-1.00300851, 4.52831429, -0.79044033])

importemceepos=x0+1e-4*np.random.randn(32, 3)

nwalkers, ndim=pos.shapesampler=emcee.EnsembleSampler(nwalkers, ndim, log_probability, args=(x, y, yerr))

sampler.run_mcmc(pos, 5000, progress=True);

flat_samples=sampler.get_chain(discard=100, thin=15, flat=True)

print(flat_samples.shape)

x=np.zeros(15)

y=np.zeros(15)

foriinrange(1,16):

flat_samples=sampler.get_chain(discard=100, flat=True, thin=i)

x[i-1]=iy[i-1]=len(flat_samples)

fig, ax=plt.subplots()

ax.semilogy(x, y,'.-')

plt.xlabel("thin")

plt.ylabel(r"$\log_{10}$ len(flat_samples)",fontsize=16);

plt.savefig("thin.png")

plt.show()

# sample code goes here...

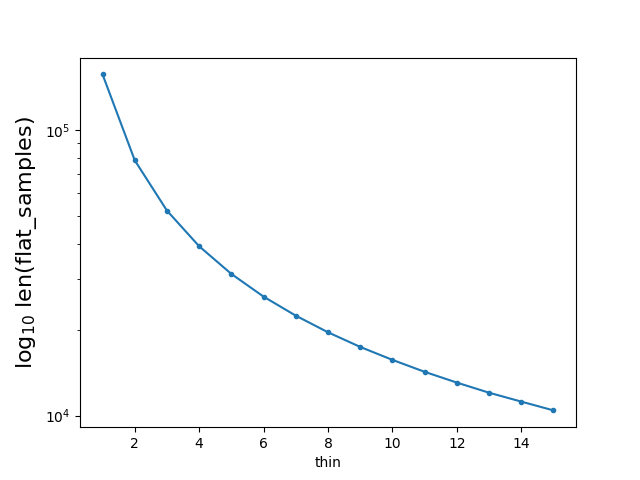

By running the above code, I got a figure showing the relation between the value of thin and len(flat_samples):

The samples in an MCMC chain are not independent (see https://emcee.readthedocs.io/en/stable/tutorials/autocorr/ for example) so it can be redundant to store many non-independent samples. thin=n takes the chain and returns every nth sample and you could set n to something like the integrated autocorrelation time without loss of statistical power. You will find that you get marginally different results because MCMC is a stochastic process and you'll see the Monte Carlo error.

No, that's not necessarily right and there's never going to be any "best" thin value. That can be a fine choice in some cases, but remember that the integrated autocorrelation time depends on the target integral so you might be throwing away information for some targets if you use that. I normally don't use the thin parameter, unless I'm storing a large number of chains and want to save on hard drive space.

General information:

Problem description:

I'm wondering what does the parameter 'thin' exactly mean? I find for different thin value, the result is different.

So I'm confused what value should I set to the parameter 'thin':

flat_samples = sampler.get_chain(discard=100, thin=?, flat=True)?let steps= 5000, walks=32, discard=100, and let

flat_samples = sampler.get_chain(discard=100, flat=True, thin=i)If I denote the size of flat_samples when thin= i as ' flat_samples| thin= i ', I found:

flat_samples | thin=1 = (5000-100)*32 =156800

flat_samples | thin=i = [ (5000-100)/thin] * 32

But I still do not understand the meaning of the parameter 'thin'.

Why should one 'thin' a relatively big sample to a small sample?

Code to show my question:

By running the above code, I got a figure showing the relation between the value of thin and len(flat_samples):

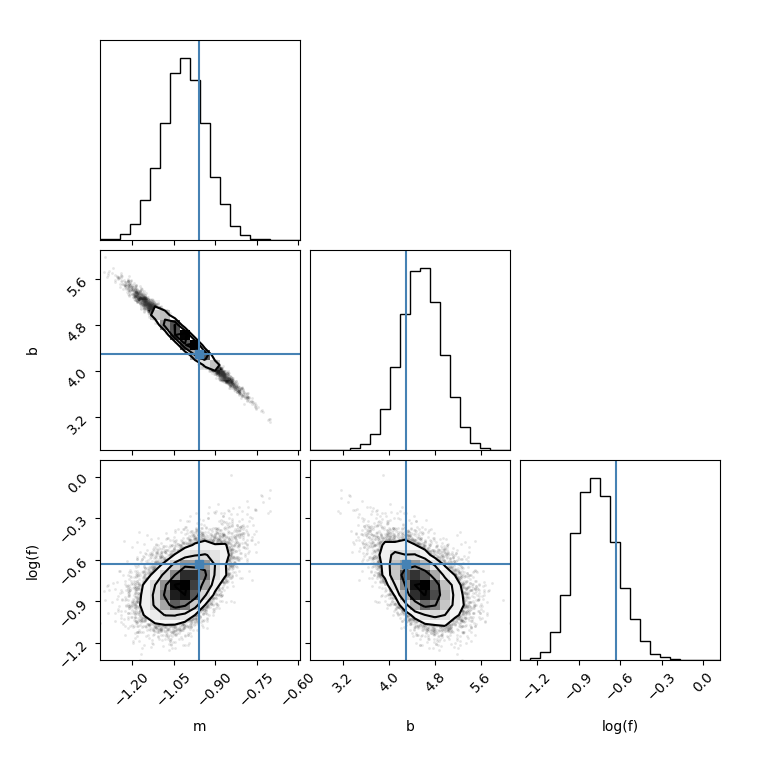

Then I made a corner plot by setting thin=1

I made another corner plot by setting thin=15, I found the figure is different:

flat_samples = sampler.get_chain(discard=100, thin=15, flat=True)Then I run the following code, I found for different thin value, the results is also different.

The text was updated successfully, but these errors were encountered: