-

-

Notifications

You must be signed in to change notification settings - Fork 4.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

AttributeError 'list' object has no attribute 'decode' with redis backend #4363

Comments

|

This seems like a race condition in the Redis connection pool from concurrent worker operations. Which worker pool type are you using? I think that if you use the prefork pool you will not run into this issue. Let me know what the outcome is, if you try it. |

|

Hey @georgepsarakis, thanks for your response, apparently it seems we are running on when checked output when starting celery (which runs under systemd) i got this output: some other info:

let me know if i can add anything else, would like to help as much as possible to resolve that :) thanks! |

|

another thing (even thoung i'm not sure if it could be related) - sometimes we get this exception (same setup as above) we tried to update py-redis (will see, but its just minor one) any hint is very appreciate :) |

|

@Twista can you try a patch on the Redis backend? If you can add here the following code: I hope that this will force the client cached property to generate a new Redis client after each worker fork. Let me know if this has any result. |

|

Hey, sorry for long response. Just a followup - we just patched it and will see if it helps. Hopefully soon :) thanks for help :) |

|

lets us know your feedback |

|

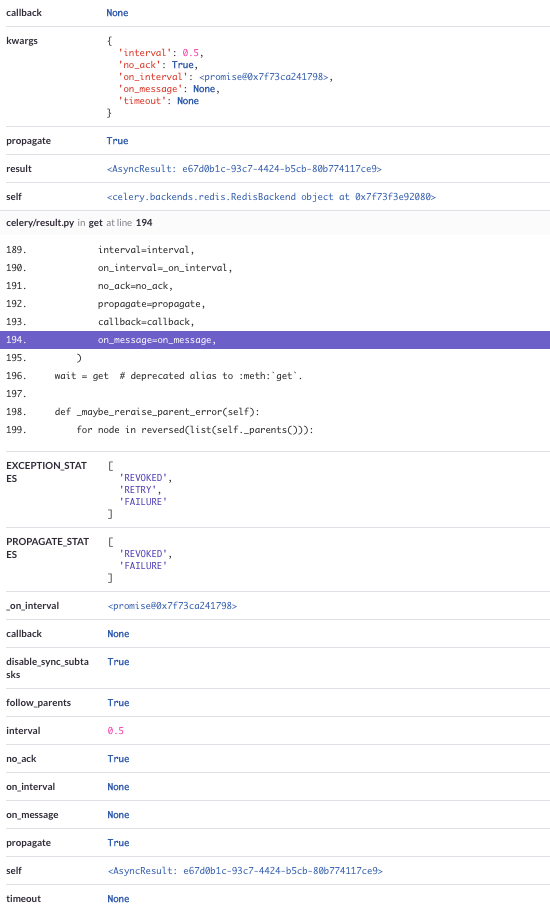

Hi, I'm experiencing pretty the same issue (using celery/celery/backends/redis.py Line 197 in 2547666

|

|

@georgepsarakis unfortunately the patch didn't help. :-( Please let me know if you want some logs or other patches tested. |

|

@wimby thanks a lot for the feedback. Can you please tell me what options are you using for starting the worker? |

|

Hey @georgepsarakis that's whole command we use to start celery |

|

faced with the same issue when decided to try redis broker, rolled back to rabbit for now.. |

|

I'm seeing this as well. Celery 4.2.0, kombu 4.2.1, redis 2.10.6. Using redis for both the broker and the results. Redis traffic goes between servers over an encrypted stunnel connection over ports. Running into it in a Django 1.11 running on mod_wsgi (2 processes, 15 threads each), and it's not just limited to the above exception. I can't copy-paste full stack traces. The application loads up a web page with a bunch of ajax requests (90ish per page load), each of which runs a background task via celery. The tasks complete successfully, but getting the results back is difficult. When submitting the tasks, I've gotten When getting the results back, I've gotten I'm switching back to doing bulkier tasks, which should at least minimize this happening. However, I've seen cases where protocol issues will still happen when things have a minimal load. I wouldn't be surprised if this was being caused by underlying networking issues. I am dding additional retry logic as well for submitting requests. |

|

@deterb if you set The fix to respect |

|

@asgoel The whole point is to get the results for the bulk of the requests (it's not so much running in the background for them, but running heavy calculations on a server built for it instead of the web server). I will add the ignore result for the others though and see if that helps. |

|

@deterb this sounds like an issue with non thread-safe operations. Is it possible to try not to use multithreading? |

|

@georgepsarakis I agree that it sounds like a multithreading issue. I'll try configuring mod_wsgi to run with 15 processes, 1 thread per process and see if I still see that behavior. I'm not doing any additional threading or multiprocessing outside of mod_wsgi. I saw similar behavior (though with less frequency) running with Django's runserver. The only interfacing with redis clients in the web application is through Celery, namely the submitting of tasks and retrieval of their results. redis-py claims to be thread safe. I'll try to do more testing tomorrow, and see if I can recreate outside of Django and without the stunnel proxy. |

|

@georgepsarakis I didn't get a chance to try today, but I believe #4670 (namely the shared |

|

Hey guys, I was facing this errors a lot. Nevertheless they were still ocurring from time to time. Therefore I decided to change the result backend to PostgreSQL and then the errors disappeared completely. |

|

An intersting thing: we started experiencing this issue right after deploying a new release that

So, it's interesting to know this may be something that depends on the python version. Hoping it may help tracking the problem down. (For the rest, we're using celery 4.2.1 and redis 2.10.6) Also, the problematic task is launched by Celery beat, and we've had the same problem in a task launched by another task |

|

Thanks for the feedback everyone. Just to clarify a few things:

I am not aware if the corresponding operations can be performed upon Django startup, perhaps this callback could help; calling |

|

not sure but it is planned for 4.5. |

|

FWIW, even with the code snippet above, we still see periodic Protocol Errors, though less frequently: The all-zeros response is the most common one, though I just saw a I don't discount the possibility that this could also just be a glitch in Redis and nothing to do with Celery though. It's kind of hard to tell. This is using Celery 4.3.0, Kombu 4.6.6, and Redis 3.3.11 |

|

I have started getting this error frequently after implementing an async call. A flask app calls to celery. It works some times and then others I get the \x00 result: More weird errors:

And: Python 3.7, Celery 4.4.1, Redis 3.4.1. |

|

could any of you try this patch #5145? |

I wouldn't mind trying it, but I note there's a great deal of contention on the approach in that patch, builds with that patch are failing, and it's been sitting there for 17 months. It kind of looks like the idea has been abandoned. |

|

give it a try first and don't' mind review the PR and prepare a failing test for it. |

|

FWIW, confirmed today this is still an issue with Celery 4.4.2, Kombu 4.6.8, Redis 3.4.1. |

|

Considering how old of an issue this is, is there any hint of what causes it? I don't think my project has seen it [much] recently, but nonetheless, at least a couple projects continue to see this bug occur. |

|

@jheld Comments from #4363 (comment) fit with what I saw last time I poked at it - namely the result consumer initializes a PubSub which ends up getting shared across threads and that PubSubs are not thread safe. My workaround was ignoring the results and saving/watching the results independently from my Django app.

|

|

Earlier in the issue, somebody mentioned this started happening after they upgraded Django:

We just upgraded from 1.11 to 2.2, and we're started seeing it. I can't imagine why, but thought I'd echo the above. |

|

I just checked this redis/redis-py#612 (comment) but not fully sure though |

|

We just made an upgrade from python2 to python3 including upgraded celery from v3 to v4 and i started to get this error in workers of one certain queue. Celery 4.4.6. We have 2 celery workers started with this command Here is stack trace: |

|

this should have been fixed in 5.1.x versions |

|

I'm seeing this issue on Celery 5.2.1, should I open a new issue or can we discuss here? |

not sure if its a py-redis issue redis/redis-py#612 (comment) or celery, but we have to investigate. I am busy with other backlogs now. if you try to dig it deeper? and check related merged PRs? |

|

From that link it does seem like this is a gevent issue and not celery |

Checklist

celery -A proj reportin the issue.redis-server version, both 2.x and 3.x

Steps to reproduce

Hello, i'm not sure what can cause the problems and already tried to find a simillar solution, but no luck so far. Therefore opening issue here, hopefully it helps

The issue is described there as well (not by me): redis/redis-py#612

So far the experience is, its happen in both cases, where backend is and isn't involved (means just when calling

apply_async(...))Exception when calling

apply_async()Exception when calling

.get()(also this one has int, instead of list)Hope it helps

Expected behavior

To not throw the error.

Actual behavior

AttributeError: 'list' object has no attribute 'decode'

Thanks!

The text was updated successfully, but these errors were encountered: