-

-

Notifications

You must be signed in to change notification settings - Fork 4.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Celery Beat Periodic Task Duplicated Incessantly #4041

Comments

|

This may be the same timezone issue that was fixed with this Pull Request. Could you try with the current |

|

i have this exact bug on te latest master version(of today), i have just made a fork of celery to create an example project with django celery beat and to replecate this issue. marco-silva0000@4c99c06 I am not sure what is the core reason for this problem, but from what i've tested so far, the is_due calculation has a last_run_at well calculated, but self.remaining_estimate(last_run_at) returns a negative value because of timezone diferences, in my case -1 day, 23:45:24.982145. this repeats for ever and will lunch tasks for ever. this is on the latest pip version, on the latest master version there is still a calculation bug as it schedules a task configured for 10m to 1h+10m because of timezone diferences. |

|

I also encountered this bug. It is caused by re-offsetting an already-offsetted/timezone-aware datetime and fixed in celery/django-celery-beat@2312ab5. Cherry-picking celery/django-celery-beat@2312ab5 onto master fixes it. |

|

I will test this tomorrow to confirm the fix in my setup

…On Wed, Aug 9, 2017, 12:11 AM Patrick Paul ***@***.***> wrote:

I also encountered this bug. It is caused by re-offsetting an

already-offsetted/timezone-aware datetime and fixed in

***@***.***

<celery/django-celery-beat@2312ab5>

.

Cherry-picking ***@***.***

<celery/django-celery-beat@2312ab5>

onto master fixes it.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#4041 (comment)>, or mute

the thread

<https://github.com/notifications/unsubscribe-auth/ADlMQ39JPjwd6QKcxyaGT6PLqI0Godfbks5sWOs6gaJpZM4NhZ-w>

.

|

|

I'm having this issue, using celery If I let settings as below, many tasks are generated each second. Even more, Only solution for now is to set Tried Returning to version Example: Return from |

|

try master branch |

|

Hi @auvipy . Thank you for the return, I've already tried and got the following error: Installed today using Blaming that line returned that a long time ago, it used to be: Would it be solved just by moving line 532 after line 535 ? |

|

This seems to be caused by https://github.com/celery/celery/pull/4381/files . Very weird though, since the signature of |

|

confirming reproducing this in celery 4.1.0, but inconsistently and not for all scheduled tasks. a task was listed as due multiple times per second and therefore launched about 32000 times before it was caught. the actual schedule is set for daily at midnight UTC and the problem started occurring at 16.20 UTC. Several other schedules have not exhibited this behavior yet and upon restart we have not observed this again (although its only been 24 hours) we do not set either flag

python 2.7.12 |

|

Hello! We recently swapped out APScheduler with Celery (v.4.1.0) in Security Monkey, and we are seeing duplicates tasks being scheduled as well. We use celery beat to add tasks that many worker instances will then execute. Exactly one celery beat instance is running at any time. Our Based on my code, it's not clear to me how tasks are getting duplicated. Also, when reviewing our ElastiCache memory usage, whenever we re-run the celery beat instance the available memory clears up, which indicates to me that the purging is working. Does Celery have the concept of coalescing? Effectively, there should be no more than 1 of the same task scheduled and running at any given time. I'm still new to using Celery, so it's entirely possible that I made some mistakes, but would greatly appreciate the help. Running python 2.7.14, celery 4.1.0, and celery[redis] 4.1.0 as well. |

|

Update: it looks like we fixed our issue by specifying |

|

Turns out I spoke too soon. Things were looking better, but we have again noticed more duplicates. It seems to run well for a while before adding in duplicates. @zpritcha have you noticed anything else? |

|

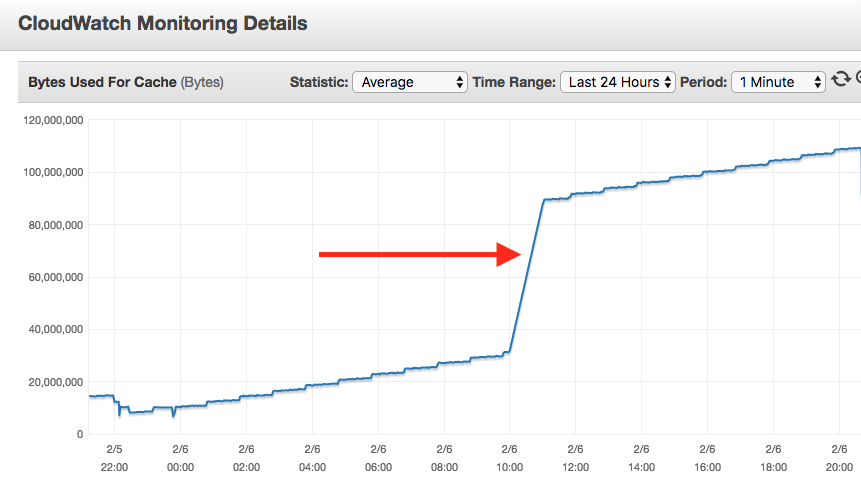

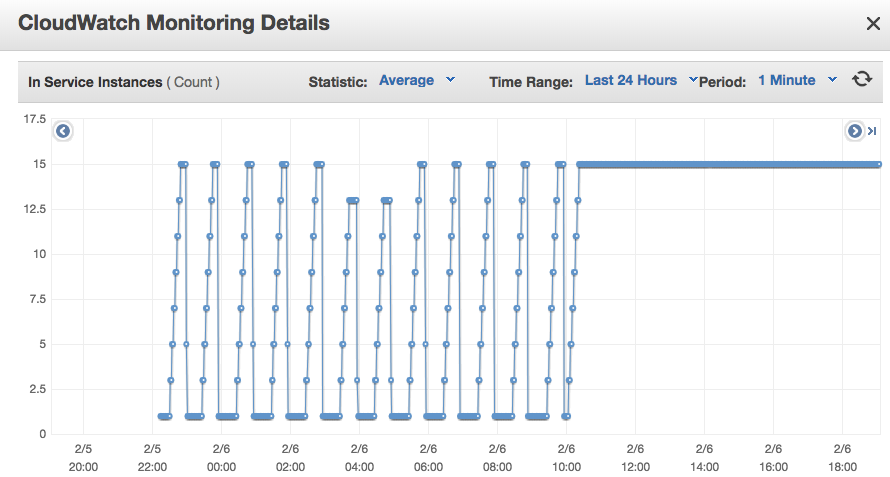

@thedrow : This graph showcases our ElastiCache usage. (with 4.1.0 -- just updated the scheduler with the latest master so will see how that goes 🤞 ) Around the 12 hour mark is where things get a little crazy. We also noticed an increase over time in Redis usage so there might be some leakage. I'm more than happy to help debug, so please let me know if you want more fancy graphs. EDIT: It looks like this corresponds with |

|

@thedrow To avoid waiting -- I set the clock to run in the next 15 minutes or so. Going to monitor it now and see if we see a huge spike (with latest master). |

|

I'm seeing the same issue - the tasks that are supposed to be scheduled for 10AM UTC via crontab are re-scheduled 56 times, leading to an abundance of tasks in the queue. The AWS autoscaling group I have configured to handle the task queue handled the non-crontab tasks 12-13 times (running once per hour or so) until the 10AM scheduled crontab executed which caused those duplicate tasks to be added to the queue. The screenshot below is from the auto scaling group showing it growing in size then shrinking when the queue size drops -- the constant spike is when the queue shot up to over 100k tasks. |

|

OK so it seems that there are two separate issues here. One with master that we have to resolve before the release and another one which we can resolve later. |

|

@thedrow Pardon my ignorance of Celery. What impact will that have on the application making use of Celery? Will this raise exceptions or otherwise disrupt the beat scheduler and workers? |

|

@mikegrima If we uncovered a bug in our scheduler I'd rather error than produce the behavior the OP is describing. The beat scheduler will be disrupted but the workers will continue to work. |

|

@mikegrima Then that's a different problem. Please search our issues and if you don't find one that matches your problem, open a new issue. |

|

is this a release blocker for 4.2? |

|

@auvipy I consider it so. It makes the scheduler unusable given that it randomly goes off the hook and launches thousands of jobs when it shouldn't. We've turned it off in our production environment and are working around it, but we'd rather use it. |

|

Does anyone have a minimal repro of the problem? Did this break recently, or has it been an issue for a while (i.e. since 4.0)? |

|

I am having the same issue, scheduled task duplicates thousands of times. Any suggestion for a quick workaround such as degrade some libraries? |

|

Just some random trial and error, I am using celery 4.1, django 1.11.7 django-celery-beat 1.1.0, postgresql as db. After change USE_TZ=True, the scheduler seems to work correctly. Hope this can add some value to the discussion. |

|

As far as I'm concerned this is a regression and I can't release without a fix or without reverting the offending PR :( |

|

I believe this might be a duplicate of #4184, and is fixed in master? It looks similar. |

|

@dwrpayne I believe gabriellima mentioned on Jan 23 they tried master and it still has the issue. I'll try master again in a test env and see what we find. |

|

Anyone arriving at this issue should just know that changing the timezone setting back to enable_utc = True seems to solve the issue (stop the bleeding). |

|

@dwrpayne thanks for the info, will be testing master...I thought we tried the enable_utc = True to no avail but will followup |

|

Master no longer contains the regression described by @gabriellima in #4041 (comment) |

|

will reopen if it's not fixed by 4.2rc2+ |

|

Thanks for your advice @liutuo, after change USE_TZ=True the Schenduler working again.

Now my project works again, but in the log appears the following error after execute any PeriodicTask:

This doesn't affect the execution, but if someone knows why that happen, I would appreciate your help ! |

|

@aarondiazr This looks out of the scope of Celery and is related to your deployment. Also, we can't tell what resolved your issue. Does upgrading to Celery 4.2 alone resolve this issue? |

|

I have same issue with Periodic task with interval of every minute constantly started by beat.

In django settings.py i have only this configuration on timezone:

USE_TZ is False by default Same configuratio on windows is working fine.

|

|

After debugging my problem is due to different approaches of django and celery when dealing with naive times. Apparently this need to be fixed in django-celeru-beat, i have created an issue with some details celery/django-celery-beat#211 |

Celery 4.0.0

Python 3.5.2

(rest of report output below)

I recently pushed celery beat to production (I had been using celery without beat with no issues for several months). It had 1 task to run several times daily, but it duplicated the task many times per second, gradually overwhelming the capacity of my small elasticache instance and resulting in OOM error.

I was searching for similar errors online and found the following:

#943 (comment)

While not exactly the same, changing timezone back to default settings seemed to solve the issue for now. To be clear, previously I had:

enable_utc = False

timezone = 'America/New_York'

I changed these to:

enable_utc = True

and it seemed to solve the problem (for now).

** celery -A [proj] report:

software -> celery:4.0.0 (latentcall) kombu:4.0.0 py:3.5.2

billiard:3.5.0.2 redis:2.10.5

platform -> system:Linux arch:64bit, ELF imp:CPython

loader -> celery.loaders.app.AppLoader

settings -> transport:redis results:redis://[elasticache]

result_persistent: False

enable_utc: False

result_serializer: 'json'

include: [tasks -- redacted]

result_backend: 'redis://[elasticache]

task_create_missing_queues: True

task_acks_late: False

timezone: 'America/New_York'

broker_url: '[elasticache]'

task_serializer: 'json'

crontab: <class 'celery.schedules.crontab'>

broker_transport_options: {

'visibility_timeout': 600}

task_time_limit: 200

task_always_eager: False

task_queues:

(<unbound Queue default -> <unbound Exchange default(direct)> -> default>,

<unbound Queue render -> <unbound Exchange media(direct)> -> media.render>,

<unbound Queue emails -> <unbound Exchange media(direct)> -> media.emails>,

<unbound Queue other -> <unbound Exchange media(direct)> -> media.other>)

beat_schedule: {

'lookup-emails-to-send': { 'args': [],

'schedule': <crontab: 0 9,12,15,18,21 * * * (m/h/d/dM/MY)>,

'task': 'send_emails'}}

accept_content: ['json']

task_default_exchange_type: 'direct'

task_routes:

('worker.routes.AppRouter',)

worker_prefetch_multiplier: 1

BROKER_URL: [elasticache]

** Worker error message after OOM:

Traceback (most recent call last):

File "python3.5/site-packages/celery/beat.py", line 299, in apply_async

**entry.options)

File "python3.5/site-packages/celery/app/task.py", line 536, in apply_async

**options

File "python3.5/site-packages/celery/app/base.py", line 717, in send_task

amqp.send_task_message(P, name, message, **options)

File "python3.5/site-packages/celery/app/amqp.py", line 554, in send_task_message

**properties

File "python3.5/site-packages/kombu/messaging.py", line 178, in publish

exchange_name, declare,

File "python3.5/site-packages/kombu/connection.py", line 527, in _ensured

errback and errback(exc, 0)

File "python3.5/contextlib.py", line 77, in exit

self.gen.throw(type, value, traceback)

File "python3.5/site-packages/kombu/connection.py", line 419, in _reraise_as_library_errors

sys.exc_info()[2])

File "python3.5/site-packages/vine/five.py", line 175, in reraise

raise value.with_traceback(tb)

File "python3.5/site-packages/kombu/connection.py", line 414, in _reraise_as_library_errors

yield

File "python3.5/site-packages/kombu/connection.py", line 494, in _ensured

return fun(*args, **kwargs)

File "python3.5/site-packages/kombu/messaging.py", line 200, in _publish

mandatory=mandatory, immediate=immediate,

File "python3.5/site-packages/kombu/transport/virtual/base.py", line 608, in basic_publish

return self._put(routing_key, message, **kwargs)

File "python3.5/site-packages/kombu/transport/redis.py", line 766, in _put

client.lpush(self._q_for_pri(queue, pri), dumps(message))

File "python3.5/site-packages/redis/client.py", line 1227, in lpush

return self.execute_command('LPUSH', name, *values)

File "python3.5/site-packages/redis/client.py", line 573, in execute_command

return self.parse_response(connection, command_name, **options)

File "python3.5/site-packages/redis/client.py", line 585, in parse_response

response = connection.read_response()

File "python3.5/site-packages/redis/connection.py", line 582, in read_response

raise response

kombu.exceptions.OperationalError: OOM command not allowed when used memory > 'maxmemory'.

** Example log showing rapidly duplicated beat tasks:

[2017-05-20 09:00:00,096: INFO/PoolWorker-1] Task send_emails[1d24250f-c254-4fd8-8ffc-cfb17b98a392] succeeded in 0.01704882364720106s: True

[2017-05-20 09:00:00,098: INFO/MainProcess] Received task: send_emails[e968cc65-0924-4d31-b2d7-9a3e3f5aefda]

[2017-05-20 09:00:00,115: INFO/PoolWorker-1] Task send_emails[e968cc65-0823-4d31-b2d7-9a3e3f5aefda] succeeded in 0.015948095358908176s: True

[2017-05-20 09:00:00,117: INFO/MainProcess] Received task: send_emails[2fb9f7ac-5f6a-42e1-813e-25ea4023dc81]

[2017-05-20 09:00:00,136: INFO/PoolWorker-1] Task send_emails[2fb9f7ac-5f6a-42e1-813e-25ea4023dc81] succeeded in 0.018534105271100998s: True

[2017-05-20 09:00:00,140: INFO/MainProcess] Received task: send_emails[59493c0b-1858-497f-a0dc-a4c8e4ba3a63]

[2017-05-20 09:00:00,161: INFO/PoolWorker-1] Task send_emails[59493c0b-1858-497f-a0dc-a4c8e4ba3a63] succeeded in 0.020539570599794388s: True

[2017-05-20 09:00:00,164: INFO/MainProcess] Received task: send_emails[3aac612f-75dd-4530-9b55-1e288bec8db4]

[2017-05-20 09:00:00,205: INFO/PoolWorker-1] Task send_emails[3aac612f-75dd-4530-9b55-1e288bec8db4] succeeded in 0.04063933063298464s: True

[2017-05-20 09:00:00,208: INFO/MainProcess] Received task: send_emails[7edf60cb-d1c4-4ae5-af10-03414da672fa]

The text was updated successfully, but these errors were encountered: