New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ci: Future of CI after Cirrus pricing change #1392

Comments

There is one aarch64 one. (It is required because GitHub doesn't offer aarch64 Linux boxes, and Google Cloud doesn't offer an aarch64 CPU that can run armhf 32-bit binaries) |

|

Ok, then it probably makes sense to do what I suggested in #1153, namely move ARM tasks to Linux, and reduce the number of our macOS tasks. |

Sounds interesting. I wonder how (and if) docker images can be cached, along with ccache, etc... |

Yeah, we'll need to see. And I agree that "in the short run it seems easier to stick to Cirrus for now, because the diff is a lot smaller (just replace container: in the yml with persistent_worker:, etc)" (bitcoin/bitcoin#28098 (comment)). We should probably do this first, and then see if we're interested in moving to GitHub Actions fully. edit: I updated the roadmap above. |

For such a case, it is good to see some progress in #1274 :) |

See #1396. |

There are open PRs for all of the mentioned items. It would be more productive, if we somehow prioritise them to spend our time until Sept. 1st more effectively. |

I'd say the Windows/macOS ones are probably easier, since they don't require write permission and don't have to deal with docker image caching. |

|

Yes, we should in principle proceed in the order of the list above. But it doesn't need to be very strict. For example, if it turns out that #1396 is ready by Sep 1st, we can skip "Move Linux tasks to the Bitcoin Core persistent workers". |

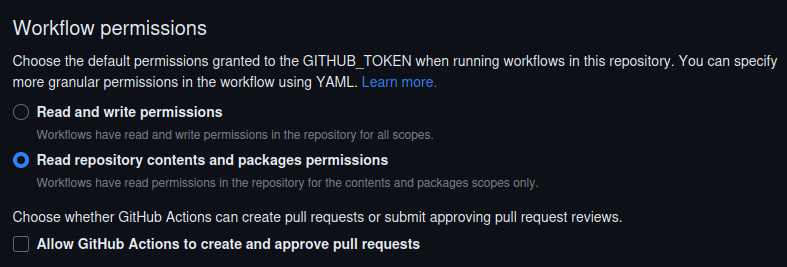

a2f7ccd ci: Run "Windows (VS 2022)" job on GitHub Actions (Hennadii Stepanov) Pull request description: This PR solves one item in #1392. In response to upcoming [limiting free usage of Cirrus CI](https://cirrus-ci.org/blog/2023/07/17/limiting-free-usage-of-cirrus-ci/), suggesting to move (partially?) CI tasks/jobs from Cirrus CI to [GitHub Actions](https://docs.github.com/actions) (GHA). Here is example from my personal repo: https://github.com/hebasto/secp256k1/actions/runs/5806269046. For security concerns, see: - bitcoin/bitcoin#28098 (comment) - bitcoin/bitcoin#28098 (comment) I'm suggesting the repository "Actions permissions" as follows:   --- See build logs in my personal repo: https://github.com/hebasto/secp256k1/actions/runs/5692587475. ACKs for top commit: real-or-random: utACK a2f7ccd Tree-SHA512: b6329a29391146e3cdee9a56f6151b6672aa45837dfaacb708ba4209719801ed029a6928d638d314b71c7533d927d771b3eca4b9e740cfcf580a40ba07970ae4

It seems reasonable to split this task in two ones, depending on the underlying architecture: |

|

@hebasto Hm, we currently don't have native Linux arm64 jobs, so we can't "move" them over. We could add some (see #1163 and #1394 (comment)). I tend to think that is also acceptable to wait for github/roadmap#528, it's currently planned for the end of the year. Then we could move macOS back to ARM. Until that happens, perhaps we can add a QEMU jobs that run the ctimetests on MSan (clang-only) at least. Note to self: We need (The real tests fail with msan enabled on qemu. I think this is because the stack will explode.) I updated the list above with optional items. |

|

qemu-arm is a bit slower than native aarch64. You can use the already existing persistent worker, if you want: (Currently not set up for this repo, but should be some time this week) |

|

Sure, that's an easy option. I just think we're currently playing around with the idea to move everything to GHA, if it's feasible for this repo. |

…ons job e10878f ci, gha: Drop `driver-opts.network` input for `setup-buildx-action` (Hennadii Stepanov) 4ad4914 ci, gha: Add `retry_builder` Docker image builder (Hennadii Stepanov) 6617a62 ci: Remove "x86_64: Linux (Debian stable)" task from Cirrus CI (Hennadii Stepanov) 03c9e65 ci, gha: Add "x86_64: Linux (Debian stable)" GitHub Actions job (Hennadii Stepanov) ad3e65d ci: Remove GCC build files and sage to reduce size of Docker image (Tim Ruffing) Pull request description: Solves one item in #1392 partially. ACKs for top commit: real-or-random: ACK e10878f Tree-SHA512: 1e685b1a6a41b4be97b9b5bb0fe546c3f1f7daac9374146ca05ab29803d5945a038294ce3ab77489bd971ffce9789ece722e0e0f268b6a7e6483a3aa782d532d

…tHub Actions fc3dea2 ci: Move "ppc64le: Linux..." from Cirrus to GitHub Actions (Hennadii Stepanov) 7782dc8 ci: Move "ARM64: Linux..." from Cirrus to GitHub Actions (Hennadii Stepanov) 0a16de6 ci: Move "ARM32: Linux..." from Cirrus to GitHub Actions (Hennadii Stepanov) ea33914 ci: Move "s390x (big-endian): Linux..." from Cirrus to GitHub Actions (Hennadii Stepanov) 880be8a ci: Move "i686: Linux (Debian stable)" from Cirrus to GiHub Actions (Hennadii Stepanov) Pull request description: Move more non-x86_64 tasks from Cirrus CI to GitHub Actions. Solves one item in #1392 partially. ACKs for top commit: real-or-random: ACK fc3dea2 but still waiting for Cirrus Tree-SHA512: 9a910b3ee500aa34fc4db827f8b2a50bcfb637a9e59f4ad32545634772b397ce80b31a18723f4605dc42aa19a5632292943102099f7720f87de1da454da068b0

While it worked on macOS Catalina back in time, it seems a couple of suppression for Branch (POC) -- https://github.com/hebasto/secp256k1/tree/230824-valgrind |

|

Oh thanks for checking. Have you tried the supplied suppression file (https://github.com/LouisBrunner/valgrind-macos/blob/main/darwin19.supp)? If it doesn't solve the problem, we could try to upstream the additional suppressions, see also LouisBrunner/valgrind-macos#15. |

Yes, I have. It does not change the outcome. UPD. I used https://github.com/LouisBrunner/valgrind-macos/blob/main/darwin22.supp as we run Ventura. |

|

Do you think maintaining the suppressions is a problem? I don't think it's a big deal.

Okay, sure, I got confused and looked at the wrong file. |

You mean, in this repository? |

Yes... I don't think it will be a lot of work, but I guess we should still submit it upstream first. If they merge it quickly, then it's easiest for us. I can take care if you don't have the bandwidth. |

FWIW, it works with no additional suppressions on |

It would be nice because I have no x86_64 macOS Ventura available. |

Oh ok, should we then just use this for now?

I don't have any macOS available. ;) |

See LouisBrunner/valgrind-macos#96 as a first step. |

c223d7e ci: Switch macOS from Ventura to Monterey and add Valgrind (Hennadii Stepanov) Pull request description: This PR switches the macOS native job from Ventura to Monterey, which allows to support Valgrind. Both runners--`macos-12` and `macos-13`--have the same clang compilers installed: - https://github.com/actions/runner-images/blob/main/images/macos/macos-12-Readme.md - https://github.com/actions/runner-images/blob/main/images/macos/macos-13-Readme.md But Valgrind works fine on macOS Monterey, but not on Ventura. See: #1392 (comment). The Homebrew's Valgrind package is cached once it has been built (as it was before #1152). Therefore, the `actions/cache@*` action is needed to be added to the list of the allowed actions. #1412 (comment): > By the way, this solves #1151. ACKs for top commit: real-or-random: ACK c223d7e I tested that a cttest failure makes CI fail: https://github.com/real-or-random/secp256k1/actions/runs/6010365844 Tree-SHA512: 5e72d89fd4d82acbda8adeda7106db0dad85162cca03abe8eae9a40393997ba36a84ad7b12c4b32aec5e9230f275738ef12169994cd530952e2b0b963449b231

2635068 ci/gha: Let MSan continue checking after errors in all jobs (Tim Ruffing) e78c7b6 ci/Dockerfile: Reduce size of Docker image further (Tim Ruffing) 2f0d3bb ci/Dockerfile: Warn if `ulimit -n` is too high when running Docker (Tim Ruffing) 4b8a647 ci/gha: Add ARM64 QEMU jobs for clang and clang-snapshot (Tim Ruffing) 6ebe7d2 ci/Dockerfile: Always use versioned clang packages (Tim Ruffing) Pull request description: Solves one item in #1392. This PR also has a few tweaks to the Dockerfile, see individual commits. --- I'll follow up soon with a PR for ARM64/gcc. This will rely on Cirrus CI. ACKs for top commit: hebasto: ACK 2635068. Tree-SHA512: d290bdd8e8e2a2a2b6ccb1b25ecdc9662c51dab745068a98044b9abed75232d13cb9d2ddc2c63c908dcff6a12317f0c7a35db3288c57bc3b814793f7fce059fd

Hm, it appears that Cirrus' "Dockerfile as a CI environment" feature won't work with persistent workers (see #1418). Now that I think about it, that's somewhat expected (e.g., where should the built images be pushed?). Alternatives:

I think we should do one of the last two? |

|

A persistent worker will persist the docker image itself, after the first run on the hardware. I think all you need to do is call

Alternatively it may be possible to find a sponsor to cover the cost (if it is not too high) on cirrus directly, while native arm64 isn't on GHA. I can look at the llvm issue next week, if time permits. |

Thanks for chiming in. Wouldn't we also need to make sure that images get pruned from time to time? Or does podman handle this automatically?

I assume the first step performs the caching automatically, rebuildung layers only as necessary? Sorry, I'm not familiar with podman, I have only used Docker so far.

Right, yeah, I'm just not sure if I want to spend time on this.

Ok sure, but I recommend not spending too much time on it. It also won't help with GCC (I added a note above). |

Yeah, you can also run See:

Yes, it is the same. You should be able to use

If you mean reaching out to a sponsor, I am happy to reach out, if there is a cost estimate. |

|

Okay, then I think this approach is probably simpler than I expected. I'm not sure if I have the time this week, but I'll look into that soon. (Or @hebasto, if you want to give it a try, feel free to go ahead, of course. My plan was to simply "abuse" the existing Dockerfile to avoid maintaining a second one, at the cost of a somewhat larger image. The existing file should build fine except that debian won't let you install an arm64 cross-compiler on arm64. So we'd need to add some check to skip these packages when we're on arm64, see https://github.com/bitcoin-core/secp256k1/pull/1163/files#diff-751ef1d9fd31c5787e12221f590262dcf7d96cfb166d456e06bd0ccab115b60d .)

Okay, thanks, but let's first try docker/podman then. |

|

Anything left to be done here? |

|

The migration is done, but there are still a few unticked checkboxes. (And I've just added two.) None of them are crucial, but I plan to work on them soon, so I'd like to keep this open for now. We could also close this issue here and add a new tracking issue, or open separate issues for the remaining items, if people think that makes tracking easier. |

|

"With today’s launch, our macOS larger runners will be priced at $0.16/minute for XL and $0.12/minute for large." |

This is a price decrease for private repos, and GHA remains free for public repos. |

|

Are large runners available for public repos? |

Ha, okay, you're right. No, "larger runners" are always billed per minute, i.e., they're not free for public repos. And it seems that they're not planning to provide M1 "standard runners". At least github/roadmap#528 (comment) has been closed now. That means we should stick to the Cirrus runners for ARM. |

That has changed now: https://github.blog/changelog/2024-01-30-github-actions-introducing-the-new-m1-macos-runner-available-to-open-source/ |

Roadmap (keeping this up to date):

I think the natural way forward for us is:

Possible follow-ups:

Consider using artifacts to move the Docker image from the Docker build job to the actual CI job (for Linux tasks).(Not worth the hassle, the current approach seems to work well.)Details

This should be a cleaner solution, but it adds some complexity. It's also worth checking if this avoids network issues. In terms of delay, this adds about 12 min uploading time to the Docker build job, but avoids about 1 min delay in the actual CI jobs as compared to the current solution that relies purely on the GHA cache (ci, gha: Add Windows jobs based on Linux image #1398). So this will speed up CI if we could avoid re-uploading existing artifacts, e.g., have another digest file that just stores the SHA256, and re-upload only if the SHA does not match. But all of this is probably not worth the complexity if the current approach with the cache turns out to be good enough.

gitsafe directory stuff torun-in-docker-action(ci: Make repetitive command the default one #1411)Other related PRs:

github.refat a time #1403Corresponding Bitcoin Core issue: bitcoin/bitcoin#28098

Cirrus CI will cap the community cluster, see cirrus-ci.org/blog/2023/07/17/limiting-free-usage-of-cirrus-ci. As with Core, the pricing model makes it totally unreasonable to pay for compute credits (multiple thousand USD / month).

The plan in Bitcoin Core is to move native Windows+macOS tasks to GitHub Actions, and move Linux tasks to persistent workers (=self-hosted). If I read the Bitcoin Core IRC meeting notes correctly, @MarcoFalke said these workers will also be available for libsecp256k1.

But the devil is in the details:

For macOS, we need to take also #1153 into account. It seems that GitHub-hosted macOS runners are on x86_64. The good news is that Valgrind should work again then, but the (very) bad is that this will reduce our number of native ARM tasks to zero. We still have some QEMU tasks, but we can't even the run the Valgrind cttimetests on them (maybe this would now work with MSan?!) @MarcoFalke Are the self-hosted runners only x86_64?

For Linux tasks, the meeting notes say that the main reason for using persistent workers is that some tasks require a very specific environment (e.g., the USDT ASan job). I don't think we have such requirements, so I tend to think that moving everything to GitHub Actions is a bit cleaner for us. With a persistent worker, Cirrus CI anyway acts only as a "coordination layer" between the worker and GitHub. Yet another way is to the self-hosted runners with GitHub Actions, see my comment bitcoin/bitcoin#28098 (comment)).

The text was updated successfully, but these errors were encountered: