New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Patching on AutoMLSearch tests failing #1682

Comments

|

@bchen1116 If you add a @patch('evalml.pipelines.BinaryClassificationPipeline.score', return_value={"Log Loss Binary": 0.2}) |

|

@freddyaboulton is this something we need to add to all of the |

|

I guess I hesitate to call this a bug because it doesn't change the behavior of automl in such a way that it causes the test to pass when it should fail or vice versa. The underlying issue is that if you don't provide a return_value, a Which uses the Maybe if we change the way the logging is called, we can avoid the ugly log but I worry that would complicate the code for little benefit. What do you think? |

|

Ah I see, that makes sense. I was unsure where that message was coming from. I think the behavior is fine then, since it doesn't seem to be detrimental to the behavior of AutoML. Thanks for the help, i'll close this out! |

Patching pipelines

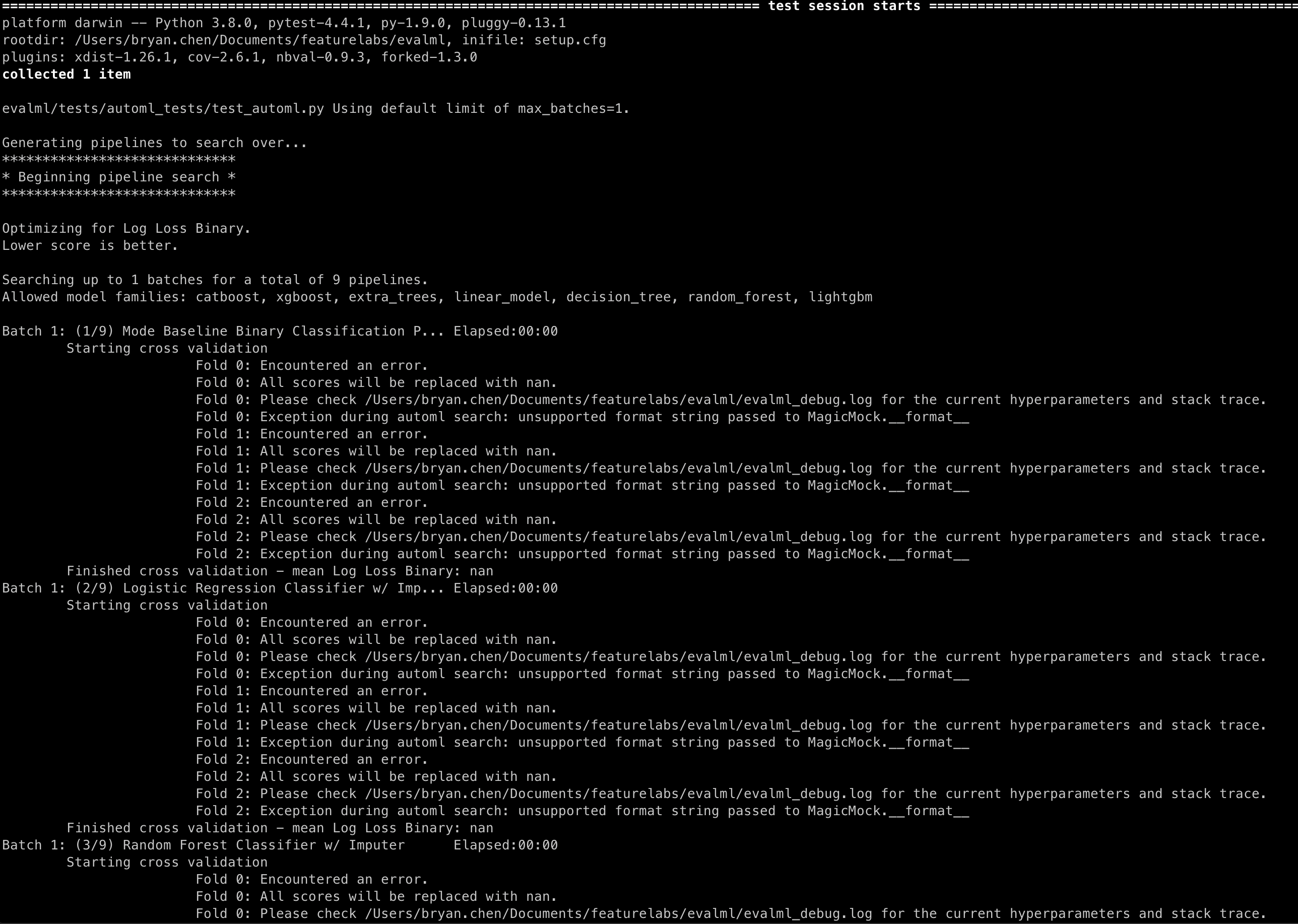

.score()and.fit()methods are causing the following messages in AutoMLSearch tests:Repro

checkout main

The text was updated successfully, but these errors were encountered: