New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Data leak using Ray Core and Ray Workflows #3

Comments

|

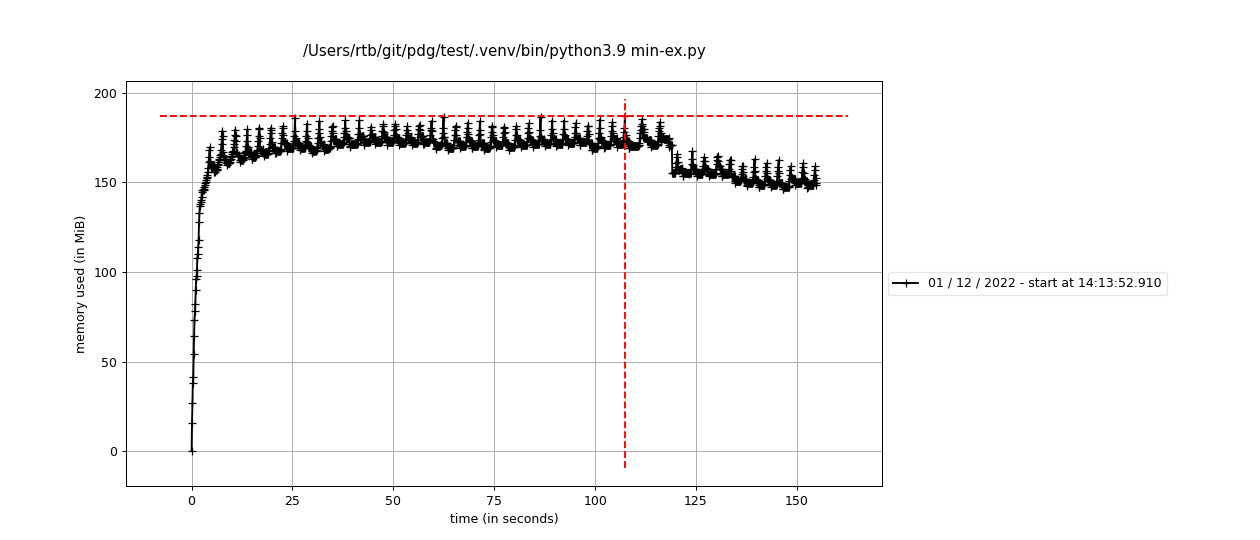

In the Raster class, in order to calculate pixel values based on the area of a pixel that a polygon covers, we use the geopandas overlay method to "slice" each polygon with the grid lines (see line 678-681). I think that the overlay method could be producing a memory leak somehow. I made a minimal example where we create some row polygons, then create some column polygons, then overlay the two to create a grid. When we repeat this process many times, the memory usage starts to climb even though we are not storing the output (the grid GeoDataFrame) to any variable. Here is the plot of memory usage over 200 runs: This was created using the following python script: from memory_profiler import profile

import geopandas as gpd

from shapely.geometry import box

# Number of rows & column polygons to make. Will results in n x n cells after

# overlay operation. The larger the number, the slower & more memory intensive

# the operation.

n = 100

# Number of times to repeat the same overlay process.

runs = 200

# Create n vertical rectangles (columns in a grid)

column_polygons = gpd.GeoDataFrame(

geometry=[box(i, 0, i + 1, n) for i in range(n)])

# Create n horizontal rectangles (rows in a grid)

row_polygons = gpd.GeoDataFrame(

geometry=[box(0, i, n, i + 1) for i in range(n)])

@profile

def suspected_leak():

for i in range(0, runs):

print(f'Run {i+1} of {runs}')

# overlay the horizontal rectangles on the vertical rectangles,

# resulting in n x n polygons (cells in a grid)

column_polygons.overlay(row_polygons)

if __name__ == '__main__':

suspected_leak()To run the above code: Here is a plot from a slightly different version where we create a 500x500 grid (very slow) 10 times. The blue brackets indicate where the overlay operations start and stop: I also tried tracking memory usage with pympler but I'm having a harder time interpreting the output. For example, the following script ... from pympler import tracker

import geopandas as gpd

from shapely.geometry import box

n = 200

runs = 5

column_polygons = gpd.GeoDataFrame(

geometry=[box(i, 0, i + 1, n) for i in range(n)])

row_polygons = gpd.GeoDataFrame(

geometry=[box(0, i, n, i + 1) for i in range(n)])

def suspected_leak():

for i in range(0, runs):

print(f'Run {i+1} of {runs}')

column_polygons.overlay(row_polygons)

tr = tracker.SummaryTracker()

tr.print_diff()

print('\n👆 START of overlay operation\n\n')

suspected_leak()

tr.print_diff()

print('\n👆 END of overlay operation')... gives the following output: The only potentially related issue I could find from geopandas is geopandas/geopandas#955, though this is about spatial joins ( |

|

Update! TLDRInstalling the newest The long versionAfter a few dead ends (running Turns out, that just calling Minimal examplefrom pympler import tracker

import geopandas as gpd

from shapely.geometry import box

tr = tracker.SummaryTracker()

def suspected_leak(n, runs):

gdf = gpd.GeoDataFrame(

geometry=[box(i, 0, i + 1, n) for i in range(n)])

for i in range(0, runs):

print(f'Run {i+1} of {runs}')

gdf.geometry.buffer(0)

def main():

n = 200

runs = 20000

print('\n👇 START of buffer operation\n\n')

tr.print_diff()

suspected_leak(n, runs)

tr.print_diff()

print('\n👆 END of buffer operation')

main()Looking further into the With these new library updates, repeating the Apparently, PyGEOS and Shapely both call the C++ library GEOS, however, PyGEOS was considered the newer, faster method until December 2021, when the two libraries merged. Shapely 2.0 will eventually be released as the result of this merge. Next steps

|

|

The memory leak mystery continues! I tested rasterizing 250,000 staged files on Delta, on a single node, using the ray workflow with the updated Shapely and pyGEOS libraries. With |

|

I believe I found the source of the memory leak for real this time: I was opening a rasterio In-Memory file without closing it, every time a tile was rasterized... The issue seems obvious in retrospect... 🙃 This is fixed in PermafrostDiscoveryGateway/viz-raster@c56a8bc, but I'm going to do a larger run on delta before merging to be certain that this resolves the leak. |

|

We are having trouble accessing delta resources, and unfortunately I could not get a run going on datateam that used anywhere near 100% memory usage. However, here is a little test that demonstrates why I believe that the issue we initially saw is fixed. Given the following script run with # test.py

from pdgraster import RasterTiler

def measure_memory(n):

tiler = RasterTiler({})

ex_path = 'staged/WorldCRS84Quad/13/1402/902.gpkg'

for i in range(1, n):

print(f'Job {i} of {n}')

tiler.rasterize_vector(ex_path)

if __name__ == '__main__':

measure_memory(50)... Here is the memory profile plot using the main branch version of viz-raster: ... and here it is using the bug-mem-leak branch version of viz-raster with the new fix: I am going to merge the fix into main and consider this issue resolved, but we can re-open if needed. |

Data leak

While executing the PDG workflow with Ray, @KastanDay is consistently experiencing a data leak. The memory usage on NCSA's Delta server increases as the process runs, resulting in a crash. @robyngit suggested the issue might be in the rasterization step (vector -> raster).

Debugging approaches by Kastan and Robyn:

remote()functionrasterize_vectors()without using rayresolution_level=3(also tried 4, 5, 6)The text was updated successfully, but these errors were encountered: