Deep Neural Networks and Partial Differential Equations: Approximation Theory and Structural Properties Philipp Petersen, University of Oxford

https://memento.epfl.ch/event/a-theoretical-analysis-of-machine-learning-and-par/

- http://at.yorku.ca/c/b/p/g/30.htm

- https://mat.univie.ac.at/~grohs/

- https://skymind.ai/ebook/Skymind_The_Math_Behind_Neural_Networks.pdf

- http://cmsa.fas.harvard.edu/geometric-analysis-ai/

- https://github.com/markovmodel/deeptime

- https://omar-florez.github.io/scratch_mlp/

- https://joanbruna.github.io/MathsDL-spring19/

- https://github.com/isikdogan/deep_learning_tutorials

- https://www.brown.edu/research/projects/crunch/machine-learning-x-seminars

- Deep Learning: Theory & Practice

- https://www.math.ias.edu/wtdl

- https://www.ml.tu-berlin.de/menue/mitglieder/klaus-robert_mueller/

- https://www-m15.ma.tum.de/Allgemeines/MathFounNN

- https://www.math.purdue.edu/~buzzard/MA598-Spring2019/index.shtml

- http://mathematics-in-europe.eu/?p=801

- Discrete Mathematics of Neural Networks: Selected Topics

- https://cims.nyu.edu/~bruna/

- https://www.math.ias.edu/wtdl

- https://www.pims.math.ca/scientific-event/190722-pcssdlcm

- Deep Learning for Image Analysis EMBL COURSE

- http://voigtlaender.xyz/

- http://www.mit.edu/~9.520/fall19/

[angewandtefunktionalanalysis]

- https://www.math.ucla.edu/applied/cam

- numerical methods for deep learning

- http://www.mathcs.emory.edu/~lruthot/

- Automatic Differentiation of Parallelised Convolutional Neural Networks - Lessons from Adjoint PDE Solvers

- https://raoyongming.github.io/000000000000000000000000

- https://dionisos.wp.imt.fr/

- https://ir.library.louisville.edu/cgi/viewcontent.cgi?article=2227&context=etd

- https://papers.nips.cc/paper/6857-nonlinear-random-matrix-theory-for-deep-learning.pdf

- https://web.stanford.edu/~yplu/DynamicOCNN.pdf

- https://zhuanlan.zhihu.com/p/71747175

- https://web.stanford.edu/~yplu/

- https://web.stanford.edu/~yplu/project.html

- A Flexible Optimal Control Framework for Efficient Training of Deep Neural Networks

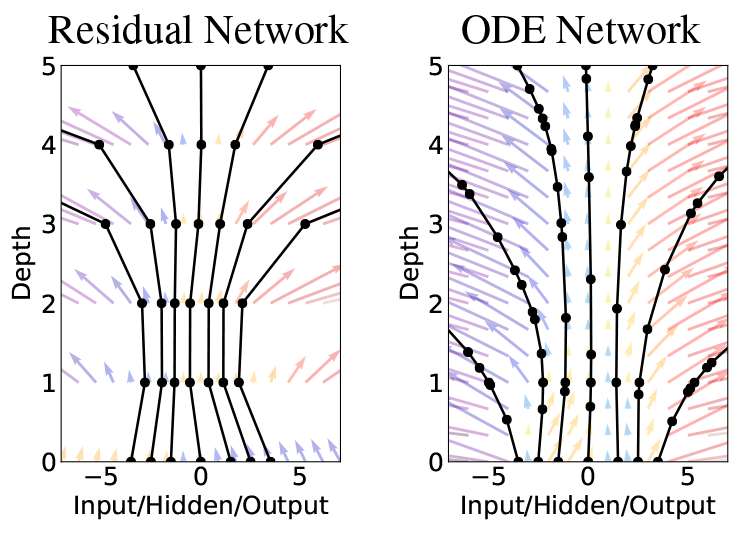

- NEURAL NETWORKS AS ORDINARY DIFFERENTIAL EQUATIONS

- BRIDGING DEEP NEURAL NETWORKS AND DIFFERENTIAL EQUATIONS FOR IMAGE ANALYSIS AND BEYOND

- Deep learning for universal linear embeddings of nonlinear dynamics

- Exact solutions to the nonlinear dynamics of learning in deep linear neural networks

- Neural Ordinary Differential Equations

- NeuPDE: Neural Network Based Ordinary and Partial Differential Equations for Modeling Time-Dependent Data

- Neural Ordinary Differential Equations and Adversarial Attacks

- Neural Dynamics and Computation Lab

- https://deeplearning-math.github.io/

- https://arxiv.org/abs/1901.02220

- http://helper.ipam.ucla.edu/publications/dlt2018/dlt2018_14936.pdf

- https://www.math.tu-berlin.de/fileadmin/i26_fg-kutyniok/Petersen/DGD_Approximation_Theory.pdf

- https://arxiv.org/abs/1804.04272

- https://deepai.org/machine-learning/researcher/weinan-e

- https://deepxde.readthedocs.io/en/latest/

- https://github.com/IBM/pde-deep-learning

- https://github.com/ZichaoLong/PDE-Net

- https://github.com/amkatrutsa/DeepPDE

- https://github.com/maziarraissi/DeepHPMs

- https://maziarraissi.github.io/DeepHPMs/

- DGM: A deep learning algorithm for solving partial differential equations

- A Theoretical Analysis of Deep Neural Networks and Parametric PDEs

- NeuralNetDiffEq.jl: A Neural Network solver for ODEs

https://arxiv.org/abs/1806.07366 https://rkevingibson.github.io/blog/neural-networks-as-ordinary-differential-equations/